Haiyang Xiong

Motif-Backdoor: Rethinking the Backdoor Attack on Graph Neural Networks via Motifs

Oct 25, 2022Abstract:Graph neural network (GNN) with a powerful representation capability has been widely applied to various areas, such as biological gene prediction, social recommendation, etc. Recent works have exposed that GNN is vulnerable to the backdoor attack, i.e., models trained with maliciously crafted training samples are easily fooled by patched samples. Most of the proposed studies launch the backdoor attack using a trigger that either is the randomly generated subgraph (e.g., erd\H{o}s-r\'enyi backdoor) for less computational burden, or the gradient-based generative subgraph (e.g., graph trojaning attack) to enable a more effective attack. However, the interpretation of how is the trigger structure and the effect of the backdoor attack related has been overlooked in the current literature. Motifs, recurrent and statistically significant sub-graphs in graphs, contain rich structure information. In this paper, we are rethinking the trigger from the perspective of motifs, and propose a motif-based backdoor attack, denoted as Motif-Backdoor. It contributes from three aspects. (i) Interpretation: it provides an in-depth explanation for backdoor effectiveness by the validity of the trigger structure from motifs, leading to some novel insights, e.g., using subgraphs that appear less frequently in the graph as the trigger can achieve better attack performance. (ii) Effectiveness: Motif-Backdoor reaches the state-of-the-art (SOTA) attack performance in both black-box and defensive scenarios. (iii) Efficiency: based on the graph motif distribution, Motif-Backdoor can quickly obtain an effective trigger structure without target model feedback or subgraph model generation. Extensive experimental results show that Motif-Backdoor realizes the SOTA performance on three popular models and four public datasets compared with five baselines.

Link-Backdoor: Backdoor Attack on Link Prediction via Node Injection

Aug 14, 2022

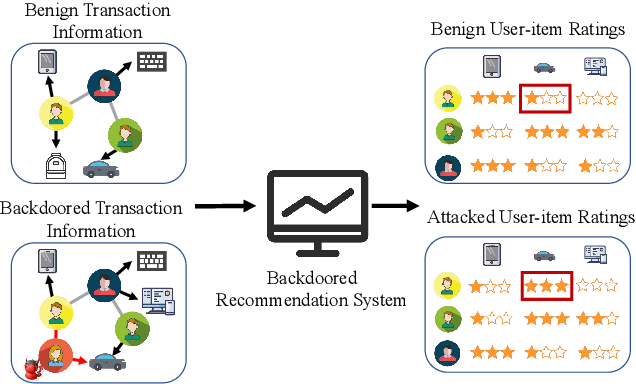

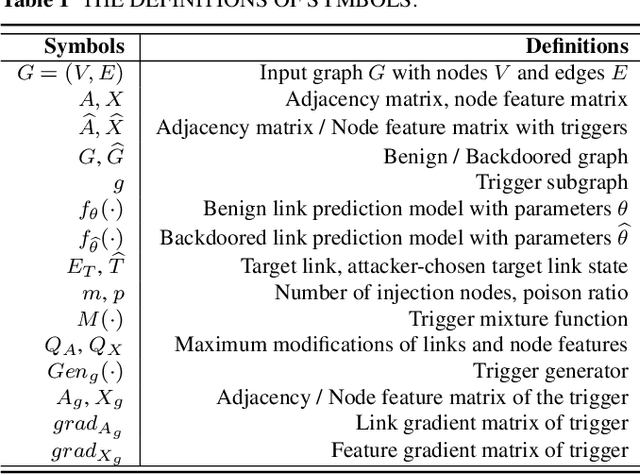

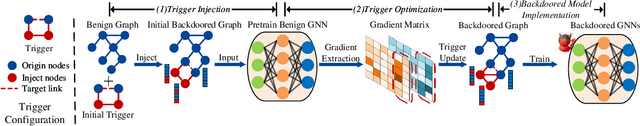

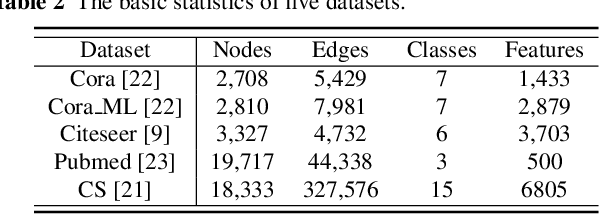

Abstract:Link prediction, inferring the undiscovered or potential links of the graph, is widely applied in the real-world. By facilitating labeled links of the graph as the training data, numerous deep learning based link prediction methods have been studied, which have dominant prediction accuracy compared with non-deep methods. However,the threats of maliciously crafted training graph will leave a specific backdoor in the deep model, thus when some specific examples are fed into the model, it will make wrong prediction, defined as backdoor attack. It is an important aspect that has been overlooked in the current literature. In this paper, we prompt the concept of backdoor attack on link prediction, and propose Link-Backdoor to reveal the training vulnerability of the existing link prediction methods. Specifically, the Link-Backdoor combines the fake nodes with the nodes of the target link to form a trigger. Moreover, it optimizes the trigger by the gradient information from the target model. Consequently, the link prediction model trained on the backdoored dataset will predict the link with trigger to the target state. Extensive experiments on five benchmark datasets and five well-performing link prediction models demonstrate that the Link-Backdoor achieves the state-of-the-art attack success rate under both white-box (i.e., available of the target model parameter)and black-box (i.e., unavailable of the target model parameter) scenarios. Additionally, we testify the attack under defensive circumstance, and the results indicate that the Link-Backdoor still can construct successful attack on the well-performing link prediction methods. The code and data are available at https://github.com/Seaocn/Link-Backdoor.

TEGDetector: A Phishing Detector that Knows Evolving Transaction Behaviors

Nov 26, 2021

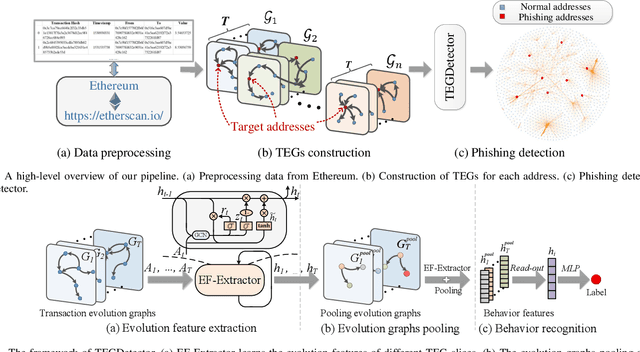

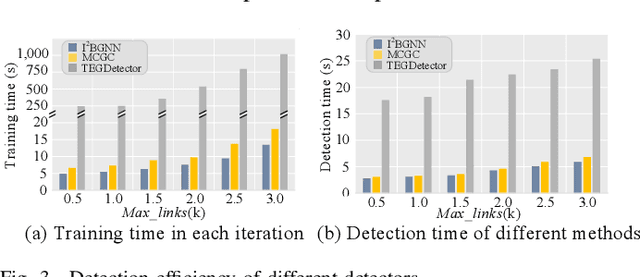

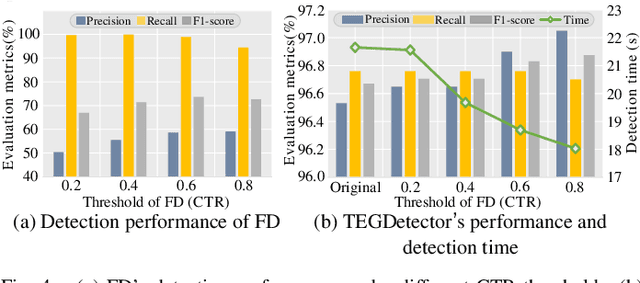

Abstract:Recently, phishing scams have posed a significant threat to blockchains. Phishing detectors direct their efforts in hunting phishing addresses. Most of the detectors extract target addresses' transaction behavior features by random walking or constructing static subgraphs. The random walking methods,unfortunately, usually miss structural information due to limited sampling sequence length, while the static subgraph methods tend to ignore temporal features lying in the evolving transaction behaviors. More importantly, their performance undergoes severe degradation when the malicious users intentionally hide phishing behaviors. To address these challenges, we propose TEGDetector, a dynamic graph classifier that learns the evolving behavior features from transaction evolution graphs (TEGs). First, we cast the transaction series into multiple time slices, capturing the target address's transaction behaviors in different periods. Then, we provide a fast non-parametric phishing detector to narrow down the search space of suspicious addresses. Finally, TEGDetector considers both the spatial and temporal evolutions towards a complete characterization of the evolving transaction behaviors. Moreover, TEGDetector utilizes adaptively learnt time coefficient to pay distinct attention to different periods, which provides several novel insights. Extensive experiments on the large-scale Ethereum transaction dataset demonstrate that the proposed method achieves state-of-the-art detection performance.

Dyn-Backdoor: Backdoor Attack on Dynamic Link Prediction

Oct 08, 2021

Abstract:Dynamic link prediction (DLP) makes graph prediction based on historical information. Since most DLP methods are highly dependent on the training data to achieve satisfying prediction performance, the quality of the training data is crucial. Backdoor attacks induce the DLP methods to make wrong prediction by the malicious training data, i.e., generating a subgraph sequence as the trigger and embedding it to the training data. However, the vulnerability of DLP toward backdoor attacks has not been studied yet. To address the issue, we propose a novel backdoor attack framework on DLP, denoted as Dyn-Backdoor. Specifically, Dyn-Backdoor generates diverse initial-triggers by a generative adversarial network (GAN). Then partial links of the initial-triggers are selected to form a trigger set, according to the gradient information of the attack discriminator in the GAN, so as to reduce the size of triggers and improve the concealment of the attack. Experimental results show that Dyn-Backdoor launches successful backdoor attacks on the state-of-the-art DLP models with success rate more than 90%. Additionally, we conduct a possible defense against Dyn-Backdoor to testify its resistance in defensive settings, highlighting the needs of defenses for backdoor attacks on DLP.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge