Haixia Long

MHAF-YOLO: Multi-Branch Heterogeneous Auxiliary Fusion YOLO for accurate object detection

Feb 07, 2025Abstract:Due to the effective multi-scale feature fusion capabilities of the Path Aggregation FPN (PAFPN), it has become a widely adopted component in YOLO-based detectors. However, PAFPN struggles to integrate high-level semantic cues with low-level spatial details, limiting its performance in real-world applications, especially with significant scale variations. In this paper, we propose MHAF-YOLO, a novel detection framework featuring a versatile neck design called the Multi-Branch Auxiliary FPN (MAFPN), which consists of two key modules: the Superficial Assisted Fusion (SAF) and Advanced Assisted Fusion (AAF). The SAF bridges the backbone and the neck by fusing shallow features, effectively transferring crucial low-level spatial information with high fidelity. Meanwhile, the AAF integrates multi-scale feature information at deeper neck layers, delivering richer gradient information to the output layer and further enhancing the model learning capacity. To complement MAFPN, we introduce the Global Heterogeneous Flexible Kernel Selection (GHFKS) mechanism and the Reparameterized Heterogeneous Multi-Scale (RepHMS) module to enhance feature fusion. RepHMS is globally integrated into the network, utilizing GHFKS to select larger convolutional kernels for various feature layers, expanding the vertical receptive field and capturing contextual information across spatial hierarchies. Locally, it optimizes convolution by processing both large and small kernels within the same layer, broadening the lateral receptive field and preserving crucial details for detecting smaller targets. The source code of this work is available at: https://github.com/yang0201/MHAF-YOLO.

Multi-Branch Auxiliary Fusion YOLO with Re-parameterization Heterogeneous Convolutional for accurate object detection

Jul 05, 2024

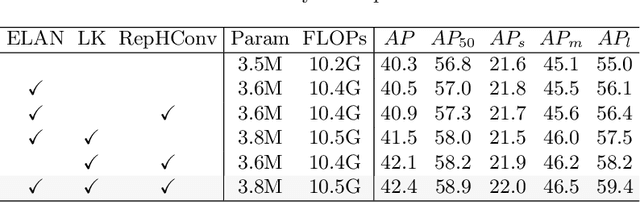

Abstract:Due to the effective performance of multi-scale feature fusion, Path Aggregation FPN (PAFPN) is widely employed in YOLO detectors. However, it cannot efficiently and adaptively integrate high-level semantic information with low-level spatial information simultaneously. We propose a new model named MAF-YOLO in this paper, which is a novel object detection framework with a versatile neck named Multi-Branch Auxiliary FPN (MAFPN). Within MAFPN, the Superficial Assisted Fusion (SAF) module is designed to combine the output of the backbone with the neck, preserving an optimal level of shallow information to facilitate subsequent learning. Meanwhile, the Advanced Assisted Fusion (AAF) module deeply embedded within the neck conveys a more diverse range of gradient information to the output layer. Furthermore, our proposed Re-parameterized Heterogeneous Efficient Layer Aggregation Network (RepHELAN) module ensures that both the overall model architecture and convolutional design embrace the utilization of heterogeneous large convolution kernels. Therefore, this guarantees the preservation of information related to small targets while simultaneously achieving the multi-scale receptive field. Finally, taking the nano version of MAF-YOLO for example, it can achieve 42.4% AP on COCO with only 3.76M learnable parameters and 10.51G FLOPs, and approximately outperforms YOLOv8n by about 5.1%. The source code of this work is available at: https://github.com/yang-0201/MAF-YOLO.

DuEDL: Dual-Branch Evidential Deep Learning for Scribble-Supervised Medical Image Segmentation

May 23, 2024

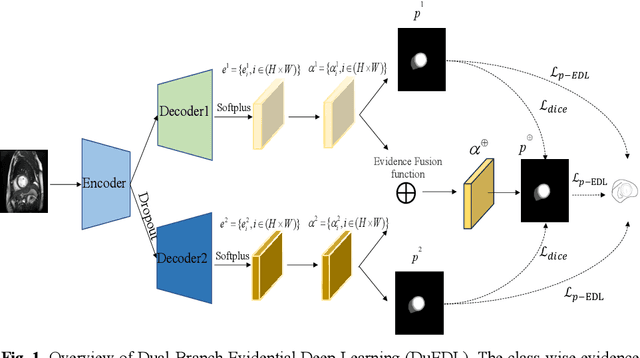

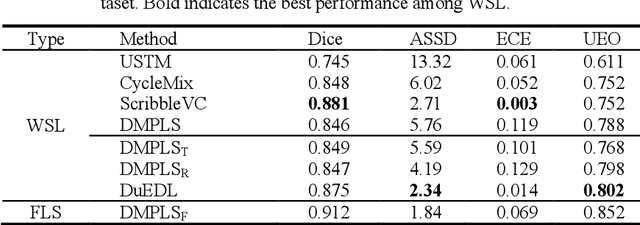

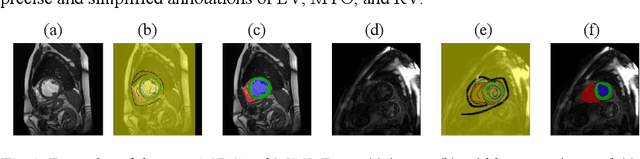

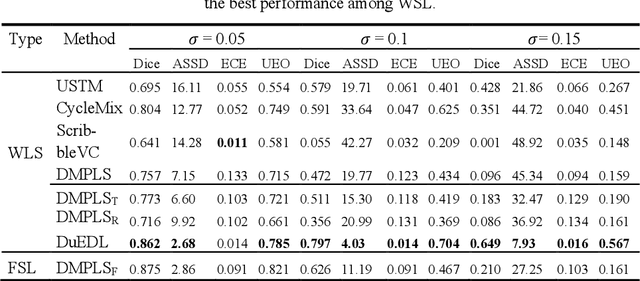

Abstract:Despite the recent progress in medical image segmentation with scribble-based annotations, the segmentation results of most models are still not ro-bust and generalizable enough in open environments. Evidential deep learn-ing (EDL) has recently been proposed as a promising solution to model predictive uncertainty and improve the reliability of medical image segmen-tation. However directly applying EDL to scribble-supervised medical im-age segmentation faces a tradeoff between accuracy and reliability. To ad-dress the challenge, we propose a novel framework called Dual-Branch Evi-dential Deep Learning (DuEDL). Firstly, the decoder of the segmentation network is changed to two different branches, and the evidence of the two branches is fused to generate high-quality pseudo-labels. Then the frame-work applies partial evidence loss and two-branch consistent loss for joint training of the model to adapt to the scribble supervision learning. The pro-posed method was tested on two cardiac datasets: ACDC and MSCMRseg. The results show that our method significantly enhances the reliability and generalization ability of the model without sacrificing accuracy, outper-forming state-of-the-art baselines. The code is available at https://github.com/Gardnery/DuEDL.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge