Gregor Cerar

Data Model Design for Explainable Machine Learning-based Electricity Applications

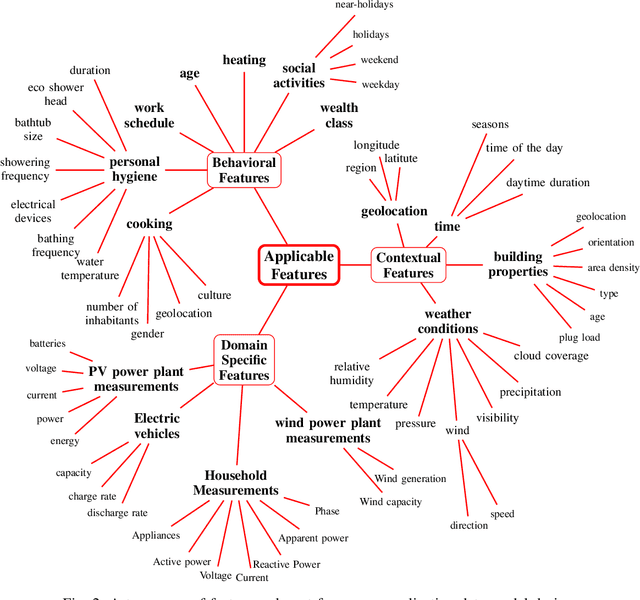

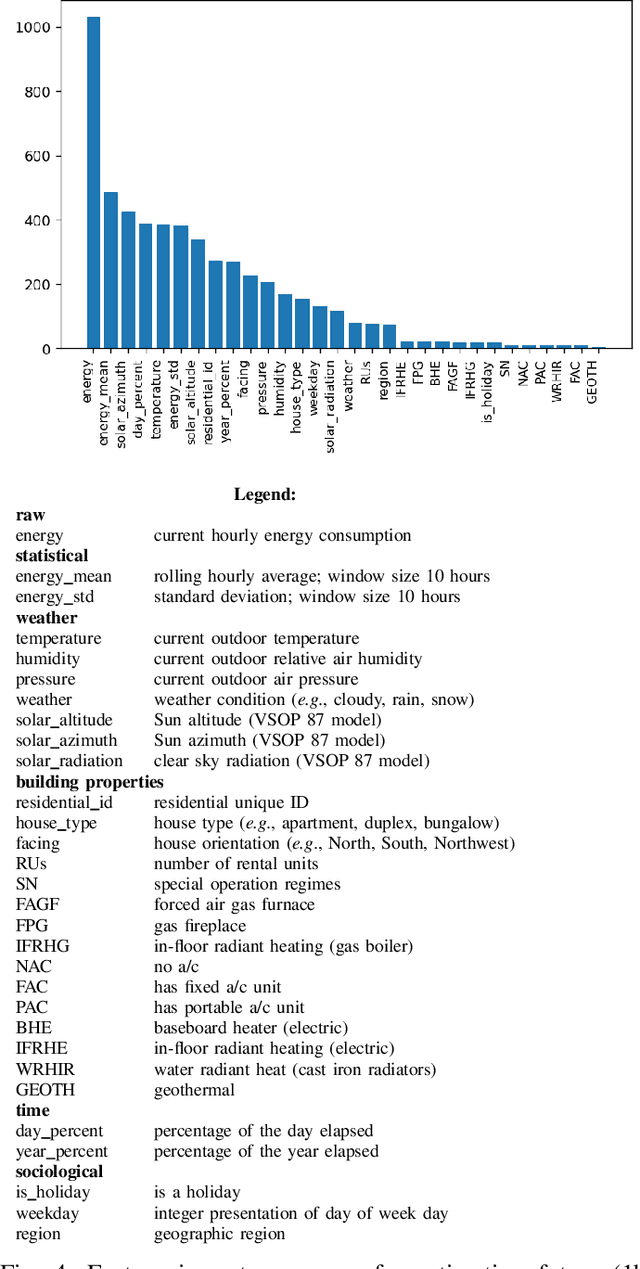

May 29, 2025Abstract:The transition from traditional power grids to smart grids, significant increase in the use of renewable energy sources, and soaring electricity prices has triggered a digital transformation of the energy infrastructure that enables new, data driven, applications often supported by machine learning models. However, the majority of the developed machine learning models rely on univariate data. To date, a structured study considering the role meta-data and additional measurements resulting in multivariate data is missing. In this paper we propose a taxonomy that identifies and structures various types of data related to energy applications. The taxonomy can be used to guide application specific data model development for training machine learning models. Focusing on a household electricity forecasting application, we validate the effectiveness of the proposed taxonomy in guiding the selection of the features for various types of models. As such, we study of the effect of domain, contextual and behavioral features on the forecasting accuracy of four interpretable machine learning techniques and three openly available datasets. Finally, using a feature importance techniques, we explain individual feature contributions to the forecasting accuracy.

Dealing with zero-inflated data: achieving SOTA with a two-fold machine learning approach

Oct 12, 2023Abstract:In many cases, a machine learning model must learn to correctly predict a few data points with particular values of interest in a broader range of data where many target values are zero. Zero-inflated data can be found in diverse scenarios, such as lumpy and intermittent demands, power consumption for home appliances being turned on and off, impurities measurement in distillation processes, and even airport shuttle demand prediction. The presence of zeroes affects the models' learning and may result in poor performance. Furthermore, zeroes also distort the metrics used to compute the model's prediction quality. This paper showcases two real-world use cases (home appliances classification and airport shuttle demand prediction) where a hierarchical model applied in the context of zero-inflated data leads to excellent results. In particular, for home appliances classification, the weighted average of Precision, Recall, F1, and AUC ROC was increased by 27%, 34%, 49%, and 27%, respectively. Furthermore, it is estimated that the proposed approach is also four times more energy efficient than the SOTA approach against which it was compared to. Two-fold models performed best in all cases when predicting airport shuttle demand, and the difference against other models has been proven to be statistically significant.

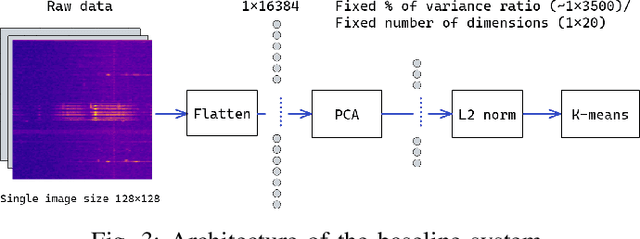

Deep Feature Learning for Wireless Spectrum Data

Aug 07, 2023Abstract:In recent years, the traditional feature engineering process for training machine learning models is being automated by the feature extraction layers integrated in deep learning architectures. In wireless networks, many studies were conducted in automatic learning of feature representations for domain-related challenges. However, most of the existing works assume some supervision along the learning process by using labels to optimize the model. In this paper, we investigate an approach to learning feature representations for wireless transmission clustering in a completely unsupervised manner, i.e. requiring no labels in the process. We propose a model based on convolutional neural networks that automatically learns a reduced dimensionality representation of the input data with 99.3% less components compared to a baseline principal component analysis (PCA). We show that the automatic representation learning is able to extract fine-grained clusters containing the shapes of the wireless transmission bursts, while the baseline enables only general separability of the data based on the background noise.

Towards Sustainable Deep Learning for Multi-Label Classification on NILM

Jul 18, 2023

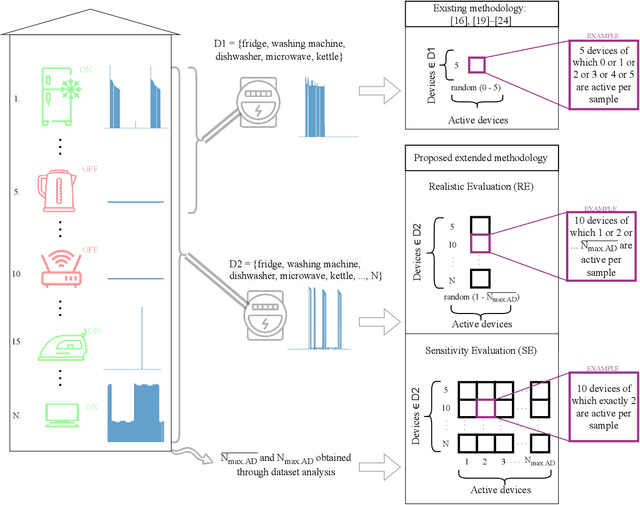

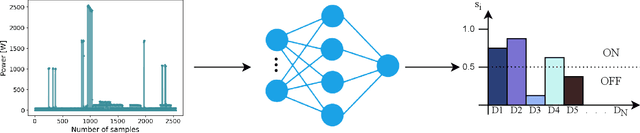

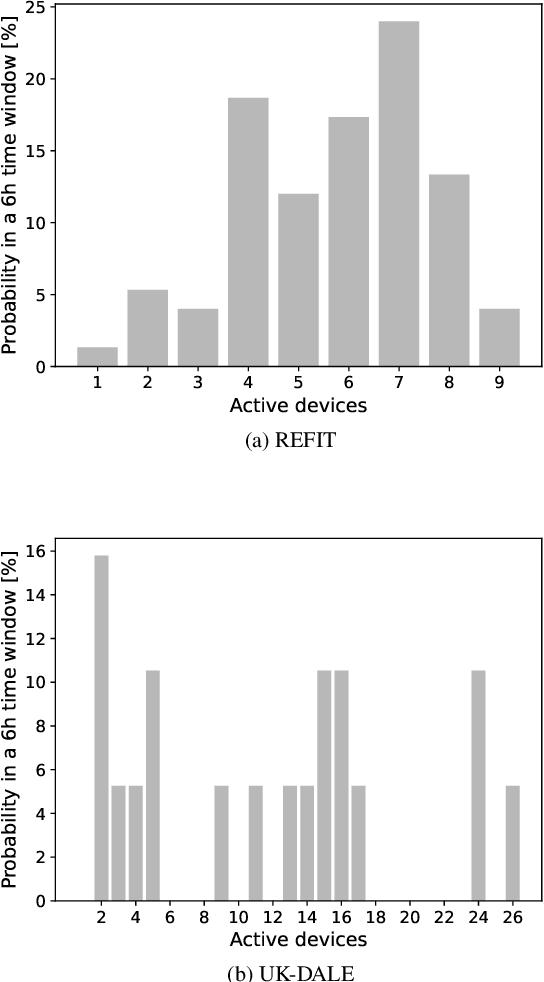

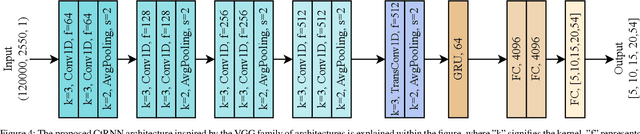

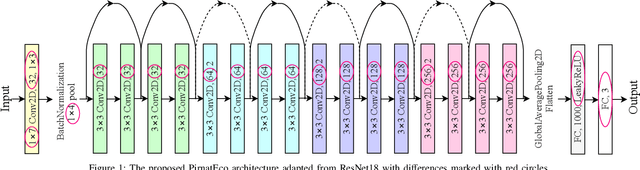

Abstract:Non-intrusive load monitoring (NILM) is the process of obtaining appliance-level data from a single metering point, measuring total electricity consumption of a household or a business. Appliance-level data can be directly used for demand response applications and energy management systems as well as for awareness raising and motivation for improvements in energy efficiency and reduction in the carbon footprint. Recently, classical machine learning and deep learning (DL) techniques became very popular and proved as highly effective for NILM classification, but with the growing complexity these methods are faced with significant computational and energy demands during both their training and operation. In this paper, we introduce a novel DL model aimed at enhanced multi-label classification of NILM with improved computation and energy efficiency. We also propose a testing methodology for comparison of different models using data synthesized from the measurement datasets so as to better represent real-world scenarios. Compared to the state-of-the-art, the proposed model has its carbon footprint reduced by more than 23% while providing on average approximately 8 percentage points in performance improvement when testing on data derived from REFIT and UK-DALE datasets.

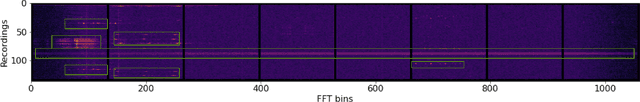

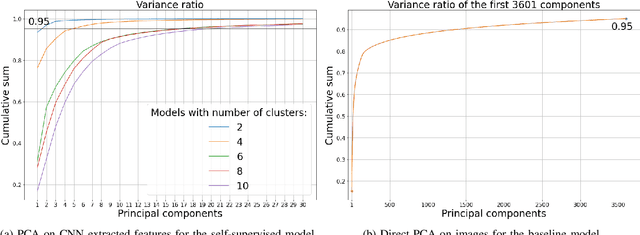

XAI for Self-supervised Clustering of Wireless Spectrum Activity

May 17, 2023Abstract:The so-called black-box deep learning (DL) models are increasingly used in classification tasks across many scientific disciplines, including wireless communications domain. In this trend, supervised DL models appear as most commonly proposed solutions to domain-related classification problems. Although they are proven to have unmatched performance, the necessity for large labeled training data and their intractable reasoning, as two major drawbacks, are constraining their usage. The self-supervised architectures emerged as a promising solution that reduces the size of the needed labeled data, but the explainability problem remains. In this paper, we propose a methodology for explaining deep clustering, self-supervised learning architectures comprised of a representation learning part based on a Convolutional Neural Network (CNN) and a clustering part. For the state of the art representation learning part, our methodology employs Guided Backpropagation to interpret the regions of interest of the input data. For the clustering part, the methodology relies on Shallow Trees to explain the clustering result using optimized depth decision tree. Finally, a data-specific visualizations part enables connection for each of the clusters to the input data trough the relevant features. We explain on a use case of wireless spectrum activity clustering how the CNN-based, deep clustering architecture reasons.

On-Premise Artificial Intelligence as a Service for Small and Medium Size Setups

Oct 12, 2022

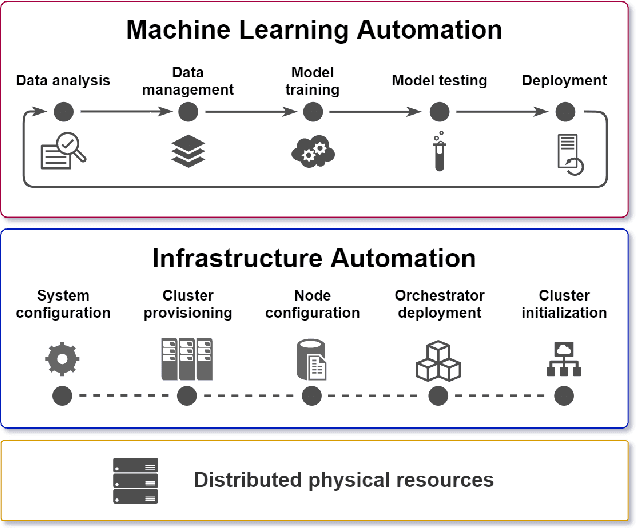

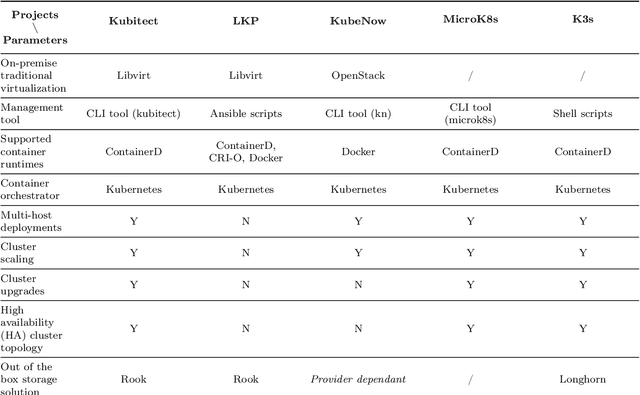

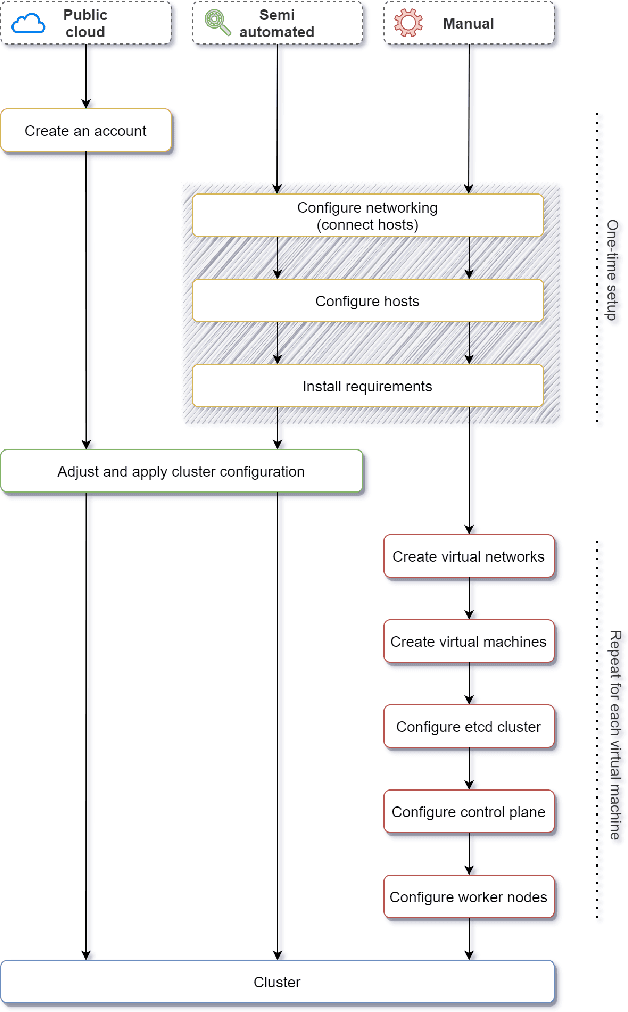

Abstract:Artificial Intelligence (AI) technologies are moving from customized deployments in specific domains towards generic solutions horizontally permeating vertical domains and industries. For instance, decisions on when to perform maintenance of roads or bridges or how to optimize public lighting in view of costs and safety in smart cities are increasingly informed by AI models. While various commercial solutions offer user friendly and easy to use AI as a Service (AIaaS), functionality-wise enabling the democratization of such ecosystems, open-source equivalent ecosystems are lagging behind. In this chapter, we discuss AIaaS functionality and corresponding technology stack and analyze possible realizations using open source user friendly technologies that are suitable for on-premise set-ups of small and medium sized users allowing full control over the data and technological platform without any third-party dependence or vendor lock-in.

Self-supervised Learning for Clustering of Wireless Spectrum Activity

Sep 22, 2022

Abstract:In recent years, much work has been done on processing of wireless spectral data involving machine learning techniques in domain-related problems for cognitive radio networks, such as anomaly detection, modulation classification, technology classification and device fingerprinting. Most of the solutions are based on labeled data, created in a controlled manner and processed with supervised learning approaches. Labeling spectral data is a laborious and expensive process, being one of the main drawbacks of using supervised approaches. In this paper, we introduce self-supervised learning for exploring spectral activities using real-world, unlabeled data. We show that the proposed model achieves superior performance regarding the quality of extracted features and clustering performance. We achieve reduction of the feature vectors size by 2 orders of magnitude (from 3601 to 20), while improving performance by 2 to 2.5 times across the evaluation metrics, supported by visual assessment. Using 15 days of continuous narrowband spectrum sensing data, we found that 17% of the spectrogram slices contain no or very weak transmissions, 36% contain mostly IEEE 802.15.4, 26% contain coexisting IEEE 802.15.4 with LoRA and proprietary activity, 12% contain LoRA with variable background noise and 9% contain only dotted activity, representing LoRA and proprietary transmissions.

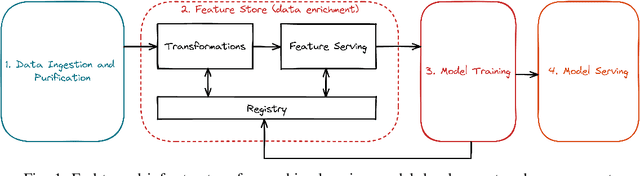

On Designing Data Models for Energy Feature Stores

May 09, 2022

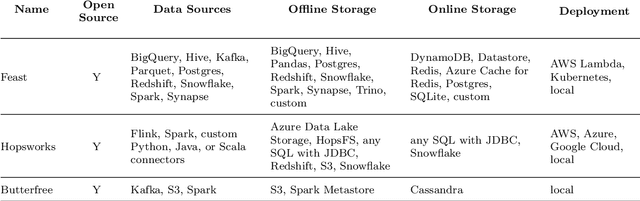

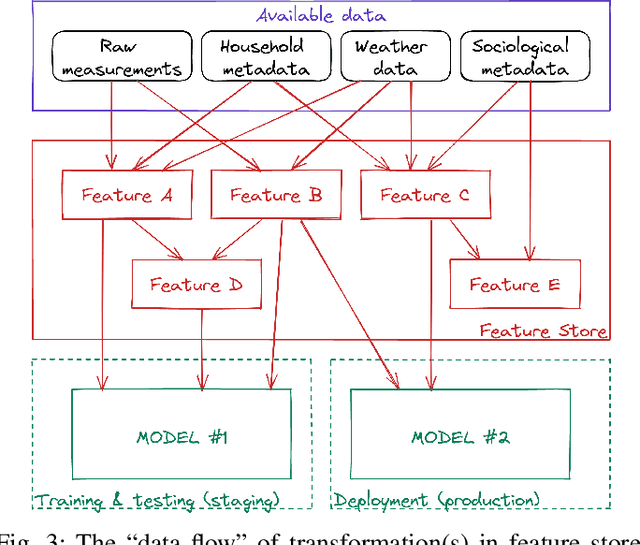

Abstract:The digitization of the energy infrastructure enables new, data driven, applications often supported by machine learning models. However, domain specific data transformations, pre-processing and management in modern data driven pipelines is yet to be addressed. In this paper we perform a first time study on data models, energy feature engineering and feature management solutions for developing ML-based energy applications. We first propose a taxonomy for designing data models suitable for energy applications, analyze feature engineering techniques able to transform the data model into features suitable for ML model training and finally also analyze available designs for feature stores. Using a short-term forecasting dataset, we show the benefits of designing richer data models and engineering the features on the performance of the resulting models. Finally, we benchmark three complementary feature management solutions, including an open-source feature store.

Towards Sustainable Deep Learning for Wireless Fingerprinting Localization

Jan 22, 2022

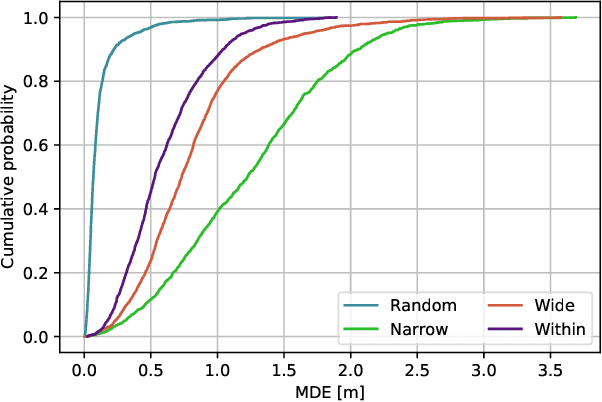

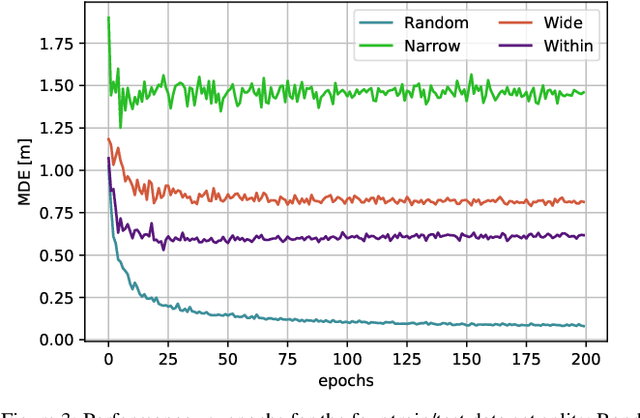

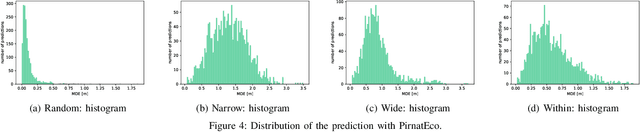

Abstract:Location based services, already popular with end users, are now inevitably becoming part of new wireless infrastructures and emerging business processes. The increasingly popular Deep Learning (DL) artificial intelligence methods perform very well in wireless fingerprinting localization based on extensive indoor radio measurement data. However, with the increasing complexity these methods become computationally very intensive and energy hungry, both for their training and subsequent operation. Considering only mobile users, estimated to exceed 7.4billion by the end of 2025, and assuming that the networks serving these users will need to perform only one localization per user per hour on average, the machine learning models used for the calculation would need to perform 65*10^12 predictions per year. Add to this equation tens of billions of other connected devices and applications that rely heavily on more frequent location updates, and it becomes apparent that localization will contribute significantly to carbon emissions unless more energy-efficient models are developed and used. This motivated our work on a new DL-based architecture for indoor localization that is more energy efficient compared to related state-of-the-art approaches while showing only marginal performance degradation. A detailed performance evaluation shows that the proposed model producesonly 58 % of the carbon footprint while maintaining 98.7 % of the overall performance compared to state of the art model external to our group. Additionally, we elaborate on a methodology to calculate the complexity of the DL model and thus the CO2 footprint during its training and operation.

Learning to Fairly Classify the Quality of Wireless Links

Feb 24, 2021

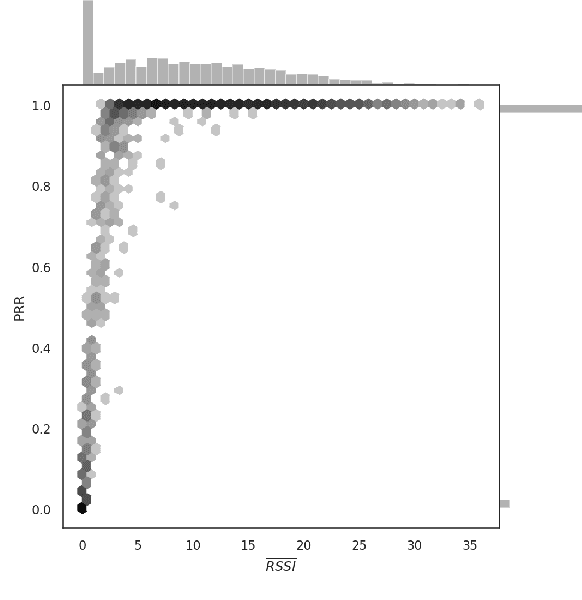

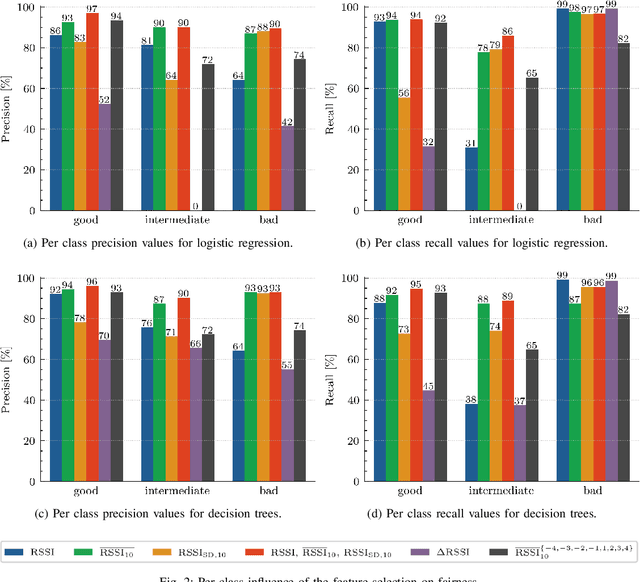

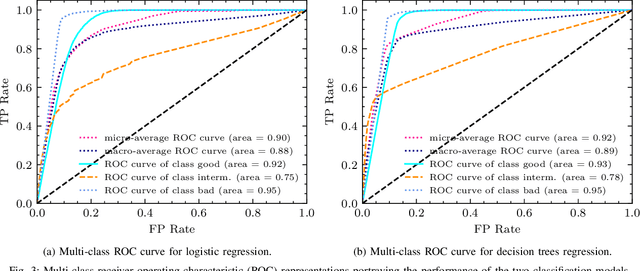

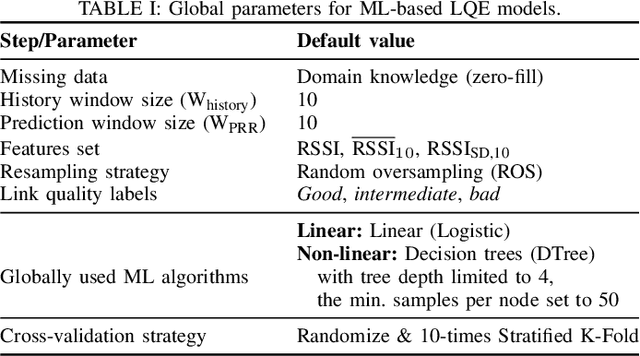

Abstract:Machine learning (ML) has been used to develop increasingly accurate link quality estimators for wireless networks. However, more in-depth questions regarding the most suitable class of models, most suitable metrics and model performance on imbalanced datasets remain open. In this paper, we propose a new tree-based link quality classifier that meets high performance and fairly classifies the minority class and, at the same time, incurs low training cost. We compare the tree-based model, to a multilayer perceptron (MLP) non-linear model and two linear models, namely logistic regression (LR) and SVM, on a selected imbalanced dataset and evaluate their results using five different performance metrics. Our study shows that 1) non-linear models perform slightly better than linear models in general, 2) the proposed non-linear tree-based model yields the best performance trade-off considering F1, training time and fairness, 3) single metric aggregated evaluations based only on accuracy can hide poor, unfair performance especially on minority classes, and 4) it is possible to improve the performance on minority classes, by over 40% through feature selection and by over 20% through resampling, therefore leading to fairer classification results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge