Goutam Das

AI-assisted Improved Service Provisioning for Low-latency XR over 5G NR

Jul 18, 2023Abstract:Extended Reality (XR) is one of the most important 5G/6G media applications that will fundamentally transform human interactions. However, ensuring low latency, high data rate, and reliability to support XR services poses significant challenges. This letter presents a novel AI-assisted service provisioning scheme that leverages predicted frames for processing rather than relying solely on actual frames. This method virtually increases the network delay budget and consequently improves service provisioning, albeit at the expense of minor prediction errors. The proposed scheme is validated by extensive simulations demonstrating a multi-fold increase in supported XR users and also provides crucial network design insights.

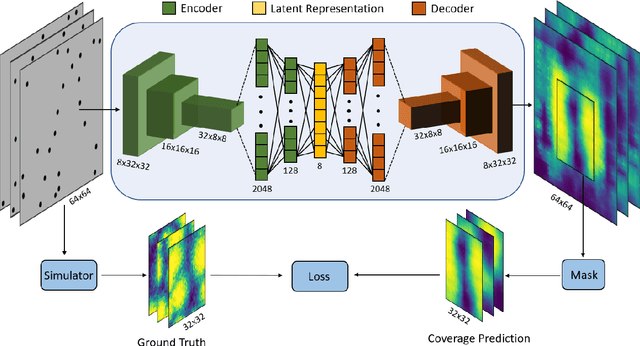

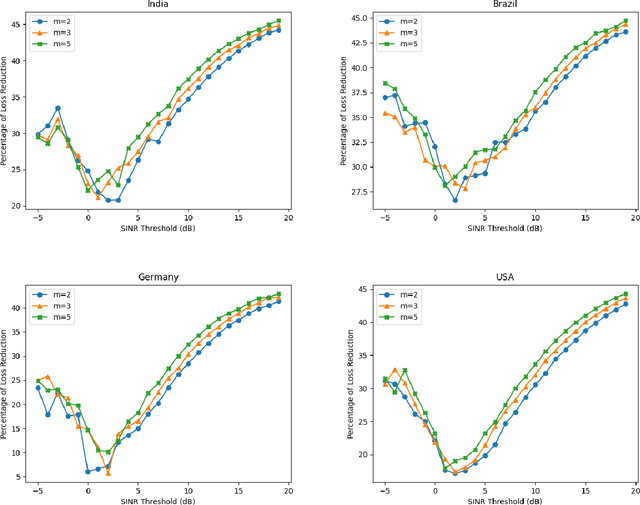

Deep Learning based Coverage and Rate Manifold Estimation in Cellular Networks

Feb 13, 2022

Abstract:This article proposes Convolutional Neural Network-based Auto Encoder (CNN-AE) to predict location-dependent rate and coverage probability of a network from its topology. We train the CNN utilising BS location data of India, Brazil, Germany, and the USA and compare its performance with stochastic geometry (SG) based analytical models. In comparison to the best-fitted SG-based model, CNN-AE improves the coverage and rate prediction errors by a margin of as large as $40\%$ and $25\%$ respectively. As an application, we propose a low complexity, provably convergent algorithm that, using trained CNN-AE, can compute locations of new BSs that need to be deployed in a network in order to satisfy pre-defined spatially heterogeneous performance goals.

Adaptive Rate NOMA for Cellular IoT Networks

Aug 18, 2021Abstract:Internet-of-Things (IoT) technology is envisioned to enable a variety of real-time applications by interconnecting billions of sensors/devices deployed to observe some random physical processes. These IoT devices rely on low-power wide-area wireless connectivity for transmitting, mostly fixed- but small-size, status updates of their associated random processes. The cellular networks are seen as a natural candidate for providing reliable wireless connectivity to IoT devices. However, the conventional orthogonal multiple access (OMA) to these massive number of devices is expected to degrade the spectral efficiency. As a promising alternative to OMA, the cellular base stations (BSs) can employ non-orthogonal multiple access (NOMA) for the uplink transmissions of mobile users and IoT devices. In particular, the uplink NOMA can be configured such that the mobile user can adapt transmission rate based on its channel condition while the IoT device transmits at a fixed rate. For this setting, we analyze the ergodic capacity of mobile users and the mean local delay of IoT devices using stochastic geometry. Our analysis demonstrates that the above NOMA configuration can provide better ergodic capacity for mobile users compare to OMA when IoT devices' delay constraint is strict. Furthermore, we also show that NOMA can support a larger packet size for IoT devices than OMA under the same delay constraint.

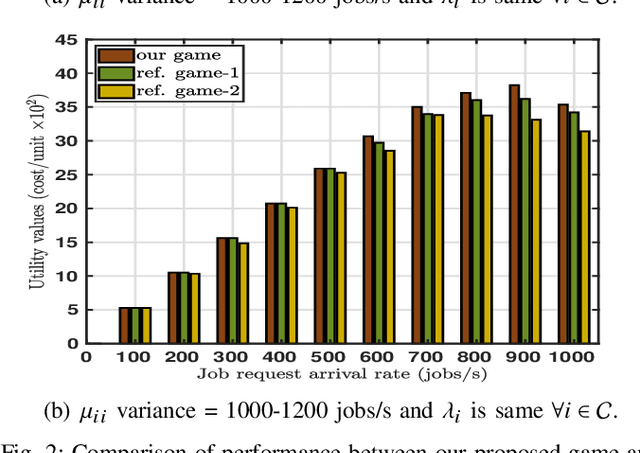

Centralized and Decentralized Non-Cooperative Load-Balancing Games among Competing Cloudlets

May 31, 2020

Abstract:Edge computing servers like cloudlets from different service providers that compensate scarce computational, memory, and energy resources of mobile devices, are distributed across access networks. However, depending on the mobility pattern and dynamically varying computational requirements of associated mobile devices, cloudlets at different parts of the network become either overloaded or under-loaded. Hence, load balancing among neighboring cloudlets appears to be an essential research problem. Nonetheless, the existing load balancing frameworks are unsuitable for low-latency applications. Thus, in this paper, we propose an economic and non-cooperative load balancing game for low-latency applications among neighboring cloudlets, from same as well as different service providers. Firstly, we propose a centralized incentive mechanism to compute the unique Nash equilibrium load balancing strategies of the cloudlets under the supervision of a neutral mediator. With this mechanism, we ensure that the truthful revelation of private information to the mediator is a weakly-dominant strategy for both the under-loaded and overloaded cloudlets. Secondly, we propose a continuous-action reinforcement learning automata-based algorithm, which allows each cloudlet to independently compute the Nash equilibrium in a completely distributed network setting. We critically study the convergence properties of the designed learning algorithm, scaffolding our understanding of the underlying load balancing game for faster convergence. Furthermore, through extensive simulations, we study the impacts of exploration and exploitation on learning accuracy. This is the first study to show the effectiveness of reinforcement learning algorithms for load balancing games among neighboring cloudlets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge