Centralized and Decentralized Non-Cooperative Load-Balancing Games among Competing Cloudlets

Paper and Code

May 31, 2020

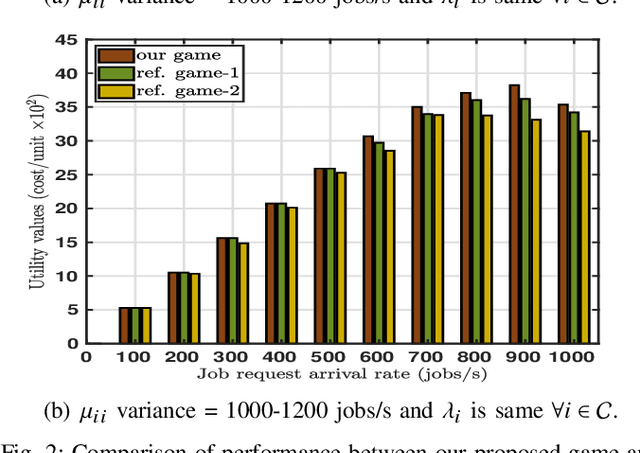

Edge computing servers like cloudlets from different service providers that compensate scarce computational, memory, and energy resources of mobile devices, are distributed across access networks. However, depending on the mobility pattern and dynamically varying computational requirements of associated mobile devices, cloudlets at different parts of the network become either overloaded or under-loaded. Hence, load balancing among neighboring cloudlets appears to be an essential research problem. Nonetheless, the existing load balancing frameworks are unsuitable for low-latency applications. Thus, in this paper, we propose an economic and non-cooperative load balancing game for low-latency applications among neighboring cloudlets, from same as well as different service providers. Firstly, we propose a centralized incentive mechanism to compute the unique Nash equilibrium load balancing strategies of the cloudlets under the supervision of a neutral mediator. With this mechanism, we ensure that the truthful revelation of private information to the mediator is a weakly-dominant strategy for both the under-loaded and overloaded cloudlets. Secondly, we propose a continuous-action reinforcement learning automata-based algorithm, which allows each cloudlet to independently compute the Nash equilibrium in a completely distributed network setting. We critically study the convergence properties of the designed learning algorithm, scaffolding our understanding of the underlying load balancing game for faster convergence. Furthermore, through extensive simulations, we study the impacts of exploration and exploitation on learning accuracy. This is the first study to show the effectiveness of reinforcement learning algorithms for load balancing games among neighboring cloudlets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge