Giovanni Conforti

CMAP

Discrete Markov Probabilistic Models

Feb 11, 2025Abstract:This paper introduces the Discrete Markov Probabilistic Model (DMPM), a novel algorithm for discrete data generation. The algorithm operates in the space of bits $\{0,1\}^d$, where the noising process is a continuous-time Markov chain that can be sampled exactly via a Poissonian clock that flips labels uniformly at random. The time-reversal process, like the forward noise process, is a jump process, with its intensity governed by a discrete analogue of the classical score function. Crucially, this intensity is proven to be the conditional expectation of a function of the forward process, strengthening its theoretical alignment with score-based generative models while ensuring robustness and efficiency. We further establish convergence bounds for the algorithm under minimal assumptions and demonstrate its effectiveness through experiments on low-dimensional Bernoulli-distributed datasets and high-dimensional binary MNIST data. The results highlight its strong performance in generating discrete structures. This work bridges theoretical foundations and practical applications, advancing the development of effective and theoretically grounded discrete generative modeling.

A semiconcavity approach to stability of entropic plans and exponential convergence of Sinkhorn's algorithm

Dec 12, 2024

Abstract:We study stability of optimizers and convergence of Sinkhorn's algorithm in the framework of entropic optimal transport. We show entropic stability for optimal plans in terms of the Wasserstein distance between their marginals under a semiconcavity assumption on the sum of the cost and one of the two entropic potentials. When employed in the analysis of Sinkhorn's algorithm, this result gives a natural sufficient condition for its exponential convergence, which does not require the ground cost to be bounded. By controlling from above the Hessians of Sinkhorn potentials in examples of interest, we obtain new exponential convergence results. For instance, for the first time we obtain exponential convergence for log-concave marginals and quadratic costs for all values of the regularization parameter. Moreover, the convergence rate has a linear dependence on the regularization: this behavior is sharp and had only been previously obtained for compact distributions arXiv:2407.01202. Other interesting new applications include subspace elastic costs [Cuturi et al. PMLR 202(2023)], weakly log-concave marginals, marginals with light tails, where, under reinforced assumptions, we manage to improve the rates obtained in arXiv:2311.04041, the case of unbounded Lipschitz costs, and compact Riemannian manifolds.

Theoretical guarantees in KL for Diffusion Flow Matching

Sep 12, 2024Abstract:Flow Matching (FM) (also referred to as stochastic interpolants or rectified flows) stands out as a class of generative models that aims to bridge in finite time the target distribution $\nu^\star$ with an auxiliary distribution $\mu$, leveraging a fixed coupling $\pi$ and a bridge which can either be deterministic or stochastic. These two ingredients define a path measure which can then be approximated by learning the drift of its Markovian projection. The main contribution of this paper is to provide relatively mild assumptions on $\nu^\star$, $\mu$ and $\pi$ to obtain non-asymptotics guarantees for Diffusion Flow Matching (DFM) models using as bridge the conditional distribution associated with the Brownian motion. More precisely, we establish bounds on the Kullback-Leibler divergence between the target distribution and the one generated by such DFM models under moment conditions on the score of $\nu^\star$, $\mu$ and $\pi$, and a standard $L^2$-drift-approximation error assumption.

Projected Langevin dynamics and a gradient flow for entropic optimal transport

Sep 15, 2023Abstract:The classical (overdamped) Langevin dynamics provide a natural algorithm for sampling from its invariant measure, which uniquely minimizes an energy functional over the space of probability measures, and which concentrates around the minimizer(s) of the associated potential when the noise parameter is small. We introduce analogous diffusion dynamics that sample from an entropy-regularized optimal transport, which uniquely minimizes the same energy functional but constrained to the set $\Pi(\mu,\nu)$ of couplings of two given marginal probability measures $\mu$ and $\nu$ on $\mathbb{R}^d$, and which concentrates around the optimal transport coupling(s) for small regularization parameter. More specifically, our process satisfies two key properties: First, the law of the solution at each time stays in $\Pi(\mu,\nu)$ if it is initialized there. Second, the long-time limit is the unique solution of an entropic optimal transport problem. In addition, we show by means of a new log-Sobolev-type inequality that the convergence holds exponentially fast, for sufficiently large regularization parameter and for a class of marginals which strictly includes all strongly log-concave measures. By studying the induced Wasserstein geometry of the submanifold $\Pi(\mu,\nu)$, we argue that the SDE can be viewed as a Wasserstein gradient flow on this space of couplings, at least when $d=1$, and we identify a conjectural gradient flow for $d \ge 2$. The main technical difficulties stems from the appearance of conditional expectation terms which serve to constrain the dynamics to $\Pi(\mu,\nu)$.

Score diffusion models without early stopping: finite Fisher information is all you need

Aug 23, 2023Abstract:Diffusion models are a new class of generative models that revolve around the estimation of the score function associated with a stochastic differential equation. Subsequent to its acquisition, the approximated score function is then harnessed to simulate the corresponding time-reversal process, ultimately enabling the generation of approximate data samples. Despite their evident practical significance these models carry, a notable challenge persists in the form of a lack of comprehensive quantitative results, especially in scenarios involving non-regular scores and estimators. In almost all reported bounds in Kullback Leibler (KL) divergence, it is assumed that either the score function or its approximation is Lipschitz uniformly in time. However, this condition is very restrictive in practice or appears to be difficult to establish. To circumvent this issue, previous works mainly focused on establishing convergence bounds in KL for an early stopped version of the diffusion model and a smoothed version of the data distribution, or assuming that the data distribution is supported on a compact manifold. These explorations have lead to interesting bounds in either Wasserstein or Fortet-Mourier metrics. However, the question remains about the relevance of such early-stopping procedure or compactness conditions. In particular, if there exist a natural and mild condition ensuring explicit and sharp convergence bounds in KL. In this article, we tackle the aforementioned limitations by focusing on score diffusion models with fixed step size stemming from the Ornstein-Ulhenbeck semigroup and its kinetic counterpart. Our study provides a rigorous analysis, yielding simple, improved and sharp convergence bounds in KL applicable to any data distribution with finite Fisher information with respect to the standard Gaussian distribution.

Non-asymptotic convergence bounds for Sinkhorn iterates and their gradients: a coupling approach

Apr 13, 2023Abstract:Computational optimal transport (OT) has recently emerged as a powerful framework with applications in various fields. In this paper we focus on a relaxation of the original OT problem, the entropic OT problem, which allows to implement efficient and practical algorithmic solutions, even in high dimensional settings. This formulation, also known as the Schr\"odinger Bridge problem, notably connects with Stochastic Optimal Control (SOC) and can be solved with the popular Sinkhorn algorithm. In the case of discrete-state spaces, this algorithm is known to have exponential convergence; however, achieving a similar rate of convergence in a more general setting is still an active area of research. In this work, we analyze the convergence of the Sinkhorn algorithm for probability measures defined on the $d$-dimensional torus $\mathbb{T}_L^d$, that admit densities with respect to the Haar measure of $\mathbb{T}_L^d$. In particular, we prove pointwise exponential convergence of Sinkhorn iterates and their gradient. Our proof relies on the connection between these iterates and the evolution along the Hamilton-Jacobi-Bellman equations of value functions obtained from SOC-problems. Our approach is novel in that it is purely probabilistic and relies on coupling by reflection techniques for controlled diffusions on the torus.

Mean Field Optimization Problem Regularized by Fisher Information

Feb 12, 2023Abstract:Recently there is a rising interest in the research of mean field optimization, in particular because of its role in analyzing the training of neural networks. In this paper by adding the Fisher Information as the regularizer, we relate the regularized mean field optimization problem to a so-called mean field Schrodinger dynamics. We develop an energy-dissipation method to show that the marginal distributions of the mean field Schrodinger dynamics converge exponentially quickly towards the unique minimizer of the regularized optimization problem. Remarkably, the mean field Schrodinger dynamics is proved to be a gradient flow on the probability measure space with respect to the relative entropy. Finally we propose a Monte Carlo method to sample the marginal distributions of the mean field Schrodinger dynamics.

Game on Random Environment, Mean-field Langevin System and Neural Networks

Apr 22, 2020

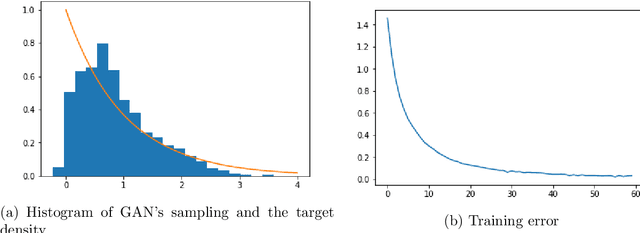

Abstract:In this paper we study a type of games regularized by the relative entropy, where the players' strategies are coupled through a random environment variable. Besides the existence and the uniqueness of equilibria of such games, we prove that the marginal laws of the corresponding mean-field Langevin systems can converge towards the games' equilibria in different settings. As applications, the dynamic games can be treated as games on a random environment when one treats the time horizon as the environment. In practice, our results can be applied to analysing the stochastic gradient descent algorithm for deep neural networks in the context of supervised learning as well as for the generative adversarial networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge