Gadiel Sznaier Camps

Touch-GS: Visual-Tactile Supervised 3D Gaussian Splatting

Mar 18, 2024Abstract:In this work, we propose a novel method to supervise 3D Gaussian Splatting (3DGS) scenes using optical tactile sensors. Optical tactile sensors have become widespread in their use in robotics for manipulation and object representation; however, raw optical tactile sensor data is unsuitable to directly supervise a 3DGS scene. Our representation leverages a Gaussian Process Implicit Surface to implicitly represent the object, combining many touches into a unified representation with uncertainty. We merge this model with a monocular depth estimation network, which is aligned in a two stage process, coarsely aligning with a depth camera and then finely adjusting to match our touch data. For every training image, our method produces a corresponding fused depth and uncertainty map. Utilizing this additional information, we propose a new loss function, variance weighted depth supervised loss, for training the 3DGS scene model. We leverage the DenseTact optical tactile sensor and RealSense RGB-D camera to show that combining touch and vision in this manner leads to quantitatively and qualitatively better results than vision or touch alone in a few-view scene syntheses on opaque as well as on reflective and transparent objects. Please see our project page at http://armlabstanford.github.io/touch-gs

Learning Deep SDF Maps Online for Robot Navigation and Exploration

Aug 02, 2022

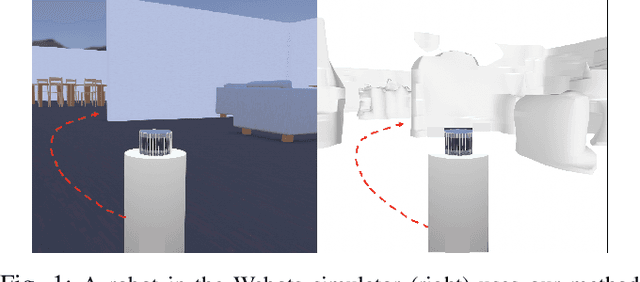

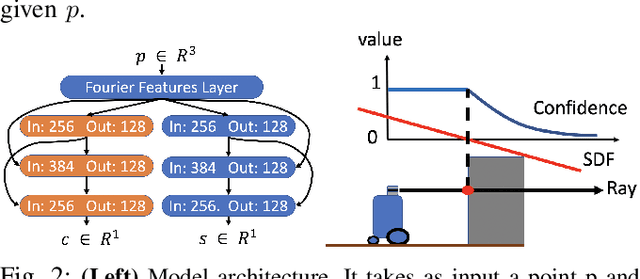

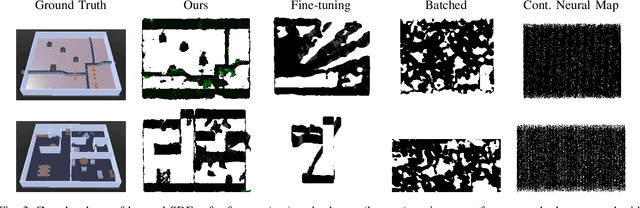

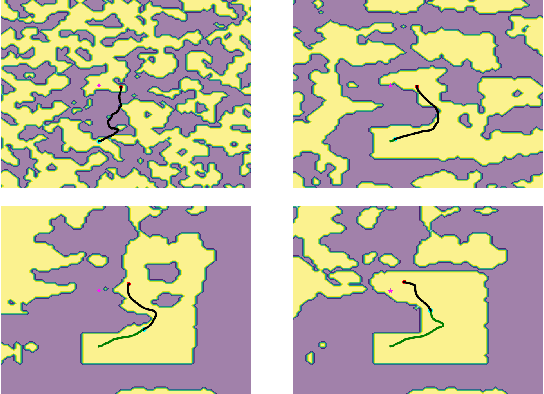

Abstract:We propose an algorithm to (i) learn online a deep signed distance function (SDF) with a LiDAR-equipped robot to represent the 3D environment geometry, and (ii) plan collision-free trajectories given this deep learned map. Our algorithm takes a stream of incoming LiDAR scans and continually optimizes a neural network to represent the SDF of the environment around its current vicinity. When the SDF network quality saturates, we cache a copy of the network, along with a learned confidence metric, and initialize a new SDF network to continue mapping new regions of the environment. We then concatenate all the cached local SDFs through a confidence-weighted scheme to give a global SDF for planning. For planning, we make use of a sequential convex model predictive control (MPC) algorithm. The MPC planner optimizes a dynamically feasible trajectory for the robot while enforcing no collisions with obstacles mapped in the global SDF. We show that our online mapping algorithm produces higher-quality maps than existing methods for online SDF training. In the WeBots simulator, we further showcase the combined mapper and planner running online -- navigating autonomously and without collisions in an unknown environment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge