G A Pershing

CROM: Continuous Reduced-Order Modeling of PDEs Using Implicit Neural Representations

Jun 06, 2022

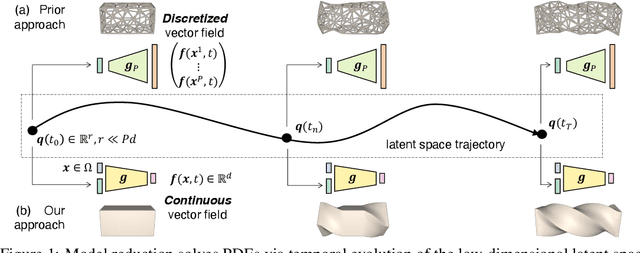

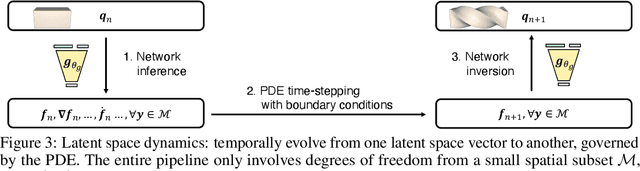

Abstract:The excessive runtime of high-fidelity partial differential equation (PDE) solvers makes them unsuitable for time-critical applications. We propose to accelerate PDE solvers using reduced-order modeling (ROM). Whereas prior ROM approaches reduce the dimensionality of discretized vector fields, our continuous reduced-order modeling (CROM) approach builds a smooth, low-dimensional manifold of the continuous vector fields themselves, not their discretization. We represent this reduced manifold using neural fields, relying on their continuous and differentiable nature to efficiently solve the PDEs. CROM may train on any and all available numerical solutions of the continuous system, even when they are obtained using diverse methods or discretizations. After the low-dimensional manifolds are built, solving PDEs requires significantly less computational resources. Since CROM is discretization-agnostic, CROM-based PDE solvers may optimally adapt discretization resolution over time to economize computation. We validate our approach on an extensive range of PDEs with training data from voxel grids, meshes, and point clouds. Large-scale experiments demonstrate that our approach obtains speed, memory, and accuracy advantages over prior ROM approaches while gaining 109$\times$ wall-clock speedup over full-order models on CPUs and 89$\times$ speedup on GPUs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge