Friedrich M. Rockenbauer

Traversing Mars: Cooperative Informative Path Planning to Efficiently Navigate Unknown Scenes

Jun 12, 2024

Abstract:The ability to traverse an unknown environment is crucial for autonomous robot operations. However, due to the limited sensing capabilities and system constraints, approaching this problem with a single robot agent can be slow, costly, and unsafe. For example, in planetary exploration missions, the wear on the wheels of a rover from abrasive terrain should be minimized at all costs as reparations are infeasible. On the other hand, utilizing a scouting robot such as a micro aerial vehicle (MAV) has the potential to reduce wear and time costs and increasing safety of a follower robot. This work proposes a novel cooperative IPP framework that allows a scout (e.g., an MAV) to efficiently explore the minimum-cost-path for a follower (e.g., a rover) to reach the goal. We derive theoretic guarantees for our algorithm, and prove that the algorithm always terminates, always finds the optimal path if it exists, and terminates early when the found path is shown to be optimal or infeasible. We show in thorough experimental evaluation that the guarantees hold in practice, and that our algorithm is 22.5% quicker to find the optimal path and 15% quicker to terminate compared to existing methods.

Exploring Event Camera-based Odometry for Planetary Robots

Apr 12, 2022

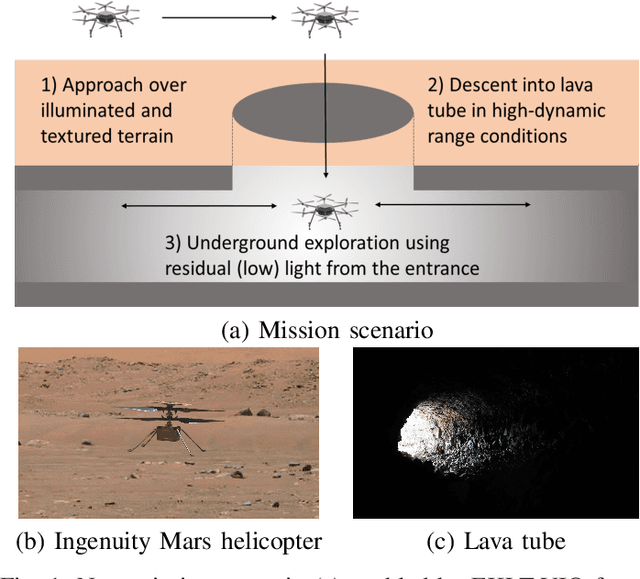

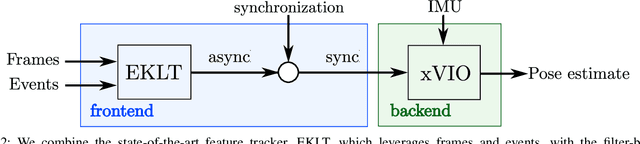

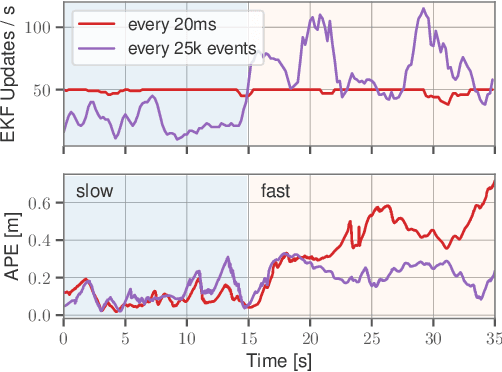

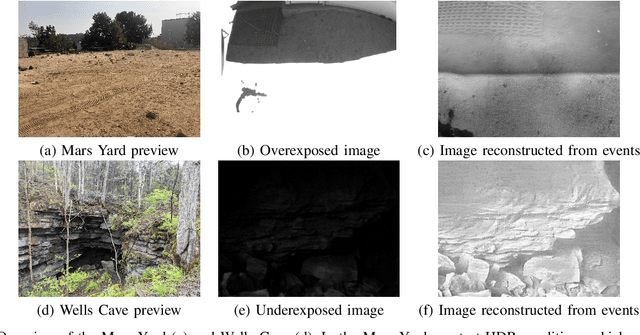

Abstract:Due to their resilience to motion blur and high robustness in low-light and high dynamic range conditions, event cameras are poised to become enabling sensors for vision-based exploration on future Mars helicopter missions. However, existing event-based visual-inertial odometry (VIO) algorithms either suffer from high tracking errors or are brittle, since they cannot cope with significant depth uncertainties caused by an unforeseen loss of tracking or other effects. In this work, we introduce EKLT-VIO, which addresses both limitations by combining a state-of-the-art event-based frontend with a filter-based backend. This makes it both accurate and robust to uncertainties, outperforming event- and frame-based VIO algorithms on challenging benchmarks by 32%. In addition, we demonstrate accurate performance in hover-like conditions (outperforming existing event-based methods) as well as high robustness in newly collected Mars-like and high-dynamic-range sequences, where existing frame-based methods fail. In doing so, we show that event-based VIO is the way forward for vision-based exploration on Mars.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge