Franco Zambonelli

Space-fluid Adaptive Sampling by Self-Organisation

Oct 31, 2022

Abstract:A recurrent task in coordinated systems is managing (estimating, predicting, or controlling) signals that vary in space, such as distributed sensed data or computation outcomes. Especially in large-scale settings, the problem can be addressed through decentralised and situated computing systems: nodes can locally sense, process, and act upon signals, and coordinate with neighbours to implement collective strategies. Accordingly, in this work we devise distributed coordination strategies for the estimation of a spatial phenomenon through collaborative adaptive sampling. Our design is based on the idea of dynamically partitioning space into regions that compete and grow/shrink to provide accurate aggregate sampling. Such regions hence define a sort of virtualised space that is "fluid", since its structure adapts in response to pressure forces exerted by the underlying phenomenon. We provide an adaptive sampling algorithm in the field-based coordination framework, and prove it is self-stabilising and locally optimal. Finally, we verify by simulation that the proposed algorithm effectively carries out a spatially adaptive sampling while maintaining a tuneable trade-off between accuracy and efficiency.

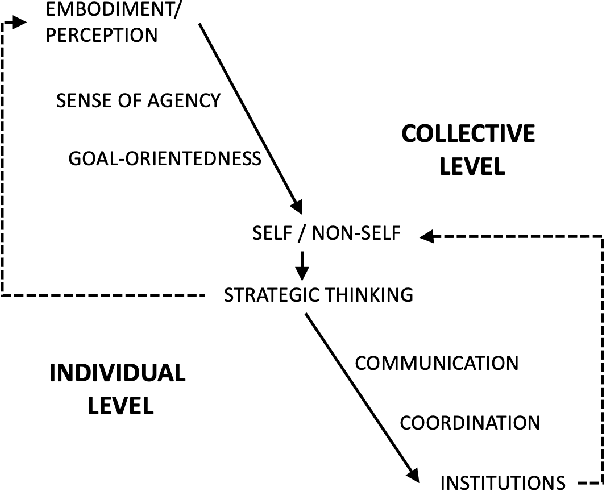

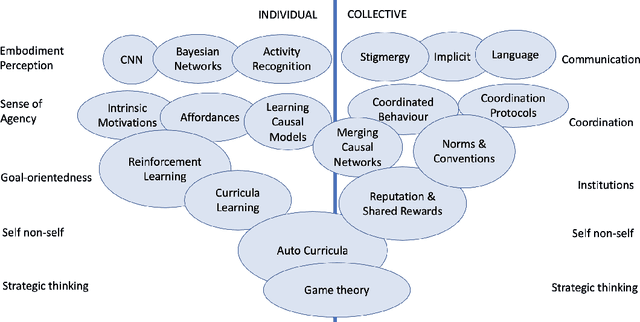

Individual and Collective Autonomous Development

Oct 03, 2021

Abstract:The increasing complexity and unpredictability of many ICT scenarios let us envision that future systems will have to dynamically learn how to act and adapt to face evolving situations with little or no a priori knowledge, both at the level of individual components and at the collective level. In other words, such systems should become able to autonomously develop models of themselves and of their environment. Autonomous development includes: learning models of own capabilities; learning how to act purposefully towards the achievement of specific goals; and learning how to act collectively, i.e., accounting for the presence of others. In this paper, we introduce the vision of autonomous development in ICT systems, by framing its key concepts and by illustrating suitable application domains. Then, we overview the many research areas that are contributing or can potentially contribute to the realization of the vision, and identify some key research challenges.

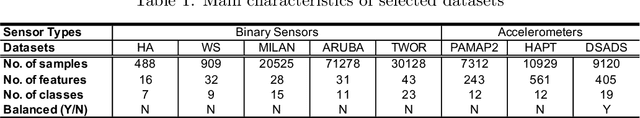

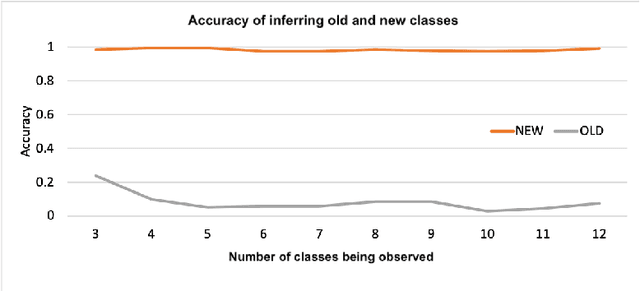

Continual Learning in Sensor-based Human Activity Recognition: an Empirical Benchmark Analysis

Apr 19, 2021

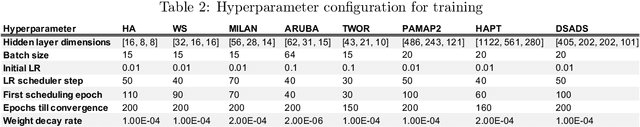

Abstract:Sensor-based human activity recognition (HAR), i.e., the ability to discover human daily activity patterns from wearable or embedded sensors, is a key enabler for many real-world applications in smart homes, personal healthcare, and urban planning. However, with an increasing number of applications being deployed, an important question arises: how can a HAR system autonomously learn new activities over a long period of time without being re-engineered from scratch? This problem is known as continual learning and has been particularly popular in the domain of computer vision, where several techniques to attack it have been developed. This paper aims to assess to what extent such continual learning techniques can be applied to the HAR domain. To this end, we propose a general framework to evaluate the performance of such techniques on various types of commonly used HAR datasets. We then present a comprehensive empirical analysis of their computational cost and effectiveness of tackling HAR-specific challenges (i.e., sensor noise and labels' scarcity). The presented results uncover useful insights on their applicability and suggest future research directions for HAR systems. Our code, models and data are available at https://github.com/srvCodes/continual-learning-benchmark.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge