Martin Schiemer

Hadamard Domain Training with Integers for Class Incremental Quantized Learning

Oct 05, 2023Abstract:Continual learning is a desirable feature in many modern machine learning applications, which allows in-field adaptation and updating, ranging from accommodating distribution shift, to fine-tuning, and to learning new tasks. For applications with privacy and low latency requirements, the compute and memory demands imposed by continual learning can be cost-prohibitive for resource-constraint edge platforms. Reducing computational precision through fully quantized training (FQT) simultaneously reduces memory footprint and increases compute efficiency for both training and inference. However, aggressive quantization especially integer FQT typically degrades model accuracy to unacceptable levels. In this paper, we propose a technique that leverages inexpensive Hadamard transforms to enable low-precision training with only integer matrix multiplications. We further determine which tensors need stochastic rounding and propose tiled matrix multiplication to enable low-bit width accumulators. We demonstrate the effectiveness of our technique on several human activity recognition datasets and CIFAR100 in a class incremental learning setting. We achieve less than 0.5% and 3% accuracy degradation while we quantize all matrix multiplications inputs down to 4-bits with 8-bit accumulators.

Continual Learning in Sensor-based Human Activity Recognition: an Empirical Benchmark Analysis

Apr 19, 2021

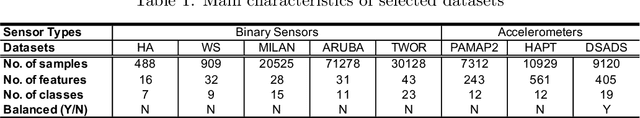

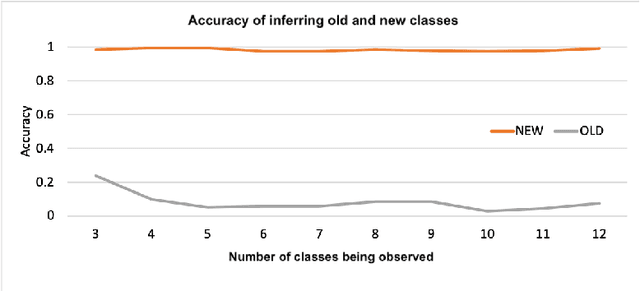

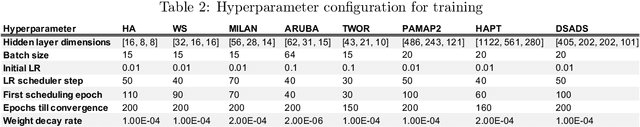

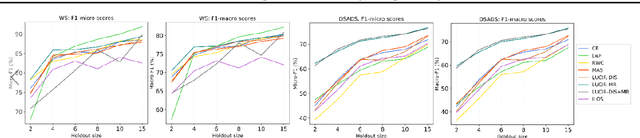

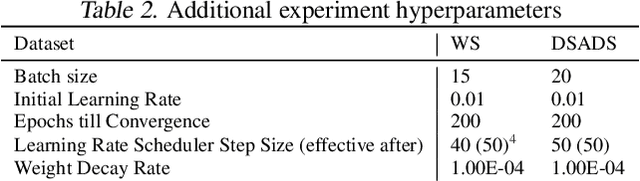

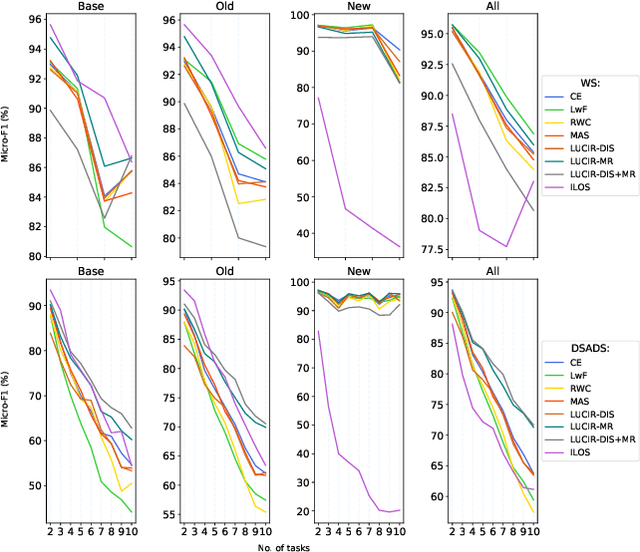

Abstract:Sensor-based human activity recognition (HAR), i.e., the ability to discover human daily activity patterns from wearable or embedded sensors, is a key enabler for many real-world applications in smart homes, personal healthcare, and urban planning. However, with an increasing number of applications being deployed, an important question arises: how can a HAR system autonomously learn new activities over a long period of time without being re-engineered from scratch? This problem is known as continual learning and has been particularly popular in the domain of computer vision, where several techniques to attack it have been developed. This paper aims to assess to what extent such continual learning techniques can be applied to the HAR domain. To this end, we propose a general framework to evaluate the performance of such techniques on various types of commonly used HAR datasets. We then present a comprehensive empirical analysis of their computational cost and effectiveness of tackling HAR-specific challenges (i.e., sensor noise and labels' scarcity). The presented results uncover useful insights on their applicability and suggest future research directions for HAR systems. Our code, models and data are available at https://github.com/srvCodes/continual-learning-benchmark.

Continual Learning in Human Activity Recognition: an Empirical Analysis of Regularization

Jul 06, 2020

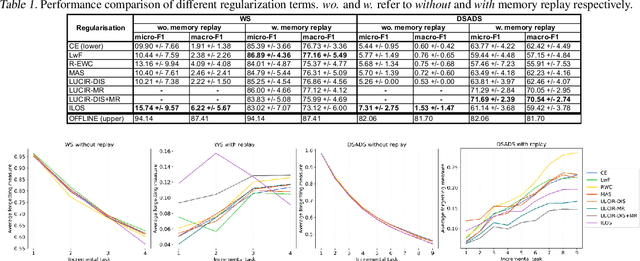

Abstract:Given the growing trend of continual learning techniques for deep neural networks focusing on the domain of computer vision, there is a need to identify which of these generalizes well to other tasks such as human activity recognition (HAR). As recent methods have mostly been composed of loss regularization terms and memory replay, we provide a constituent-wise analysis of some prominent task-incremental learning techniques employing these on HAR datasets. We find that most regularization approaches lack substantial effect and provide an intuition of when they fail. Thus, we make the case that the development of continual learning algorithms should be motivated by rather diverse task domains.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge