Francesco Regazzoni

Emerging Threats and Countermeasures in Neuromorphic Systems: A Survey

Jan 23, 2026Abstract:Neuromorphic computing mimics brain-inspired mechanisms through spiking neurons and energy-efficient processing, offering a pathway to efficient in-memory computing (IMC). However, these advancements raise critical security and privacy concerns. As the adoption of bio-inspired architectures and memristive devices increases, so does the urgency to assess the vulnerability of these emerging technologies to hardware and software attacks. Emerging architectures introduce new attack surfaces, particularly due to asynchronous, event-driven processing and stochastic device behavior. The integration of memristors into neuromorphic hardware and software implementations in spiking neural networks offers diverse possibilities for advanced computing architectures, including their role in security-aware applications. This survey systematically analyzes the security landscape of neuromorphic systems, covering attack methodologies, side-channel vulnerabilities, and countermeasures. We focus on both hardware and software concerns relevant to spiking neural networks (SNNs) and hardware primitives, such as Physical Unclonable Functions (PUFs) and True Random Number Generators (TRNGs) for cryptographic and secure computation applications. We approach this analysis from diverse perspectives, from attack methodologies to countermeasure strategies that integrate efficiency and protection in brain-inspired hardware. This review not only maps the current landscape of security threats but provides a foundation for developing secure and trustworthy neuromorphic architectures.

Emergence of Structure in Ensembles of Random Neural Networks

May 15, 2025Abstract:Randomness is ubiquitous in many applications across data science and machine learning. Remarkably, systems composed of random components often display emergent global behaviors that appear deterministic, manifesting a transition from microscopic disorder to macroscopic organization. In this work, we introduce a theoretical model for studying the emergence of collective behaviors in ensembles of random classifiers. We argue that, if the ensemble is weighted through the Gibbs measure defined by adopting the classification loss as an energy, then there exists a finite temperature parameter for the distribution such that the classification is optimal, with respect to the loss (or the energy). Interestingly, for the case in which samples are generated by a Gaussian distribution and labels are constructed by employing a teacher perceptron, we analytically prove and numerically confirm that such optimal temperature does not depend neither on the teacher classifier (which is, by construction of the learning problem, unknown), nor on the number of random classifiers, highlighting the universal nature of the observed behavior. Experiments on the MNIST dataset underline the relevance of this phenomenon in high-quality, noiseless, datasets. Finally, a physical analogy allows us to shed light on the self-organizing nature of the studied phenomenon.

A model learning framework for inferring the dynamics of transmission rate depending on exogenous variables for epidemic forecasts

Oct 15, 2024

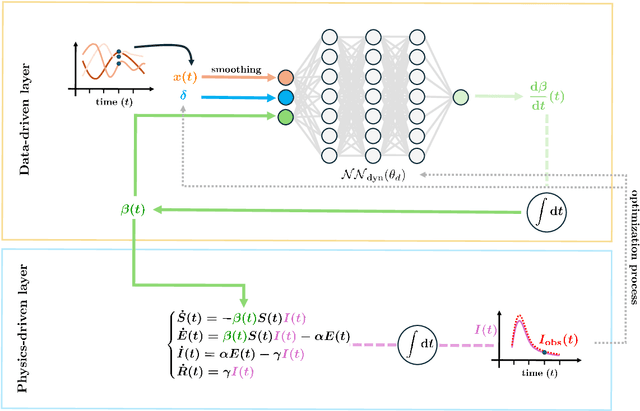

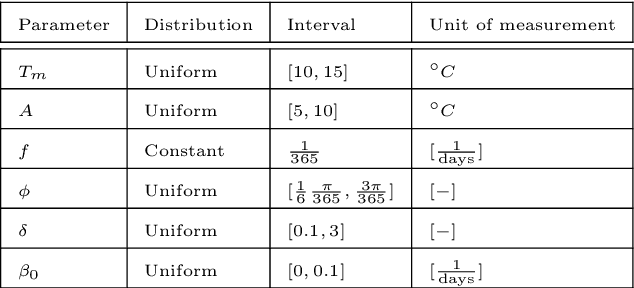

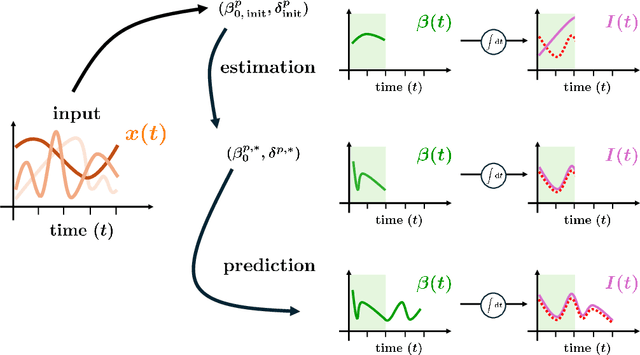

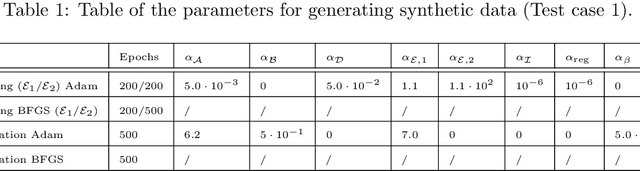

Abstract:In this work, we aim to formalize a novel scientific machine learning framework to reconstruct the hidden dynamics of the transmission rate, whose inaccurate extrapolation can significantly impair the quality of the epidemic forecasts, by incorporating the influence of exogenous variables (such as environmental conditions and strain-specific characteristics). We propose an hybrid model that blends a data-driven layer with a physics-based one. The data-driven layer is based on a neural ordinary differential equation that learns the dynamics of the transmission rate, conditioned on the meteorological data and wave-specific latent parameters. The physics-based layer, instead, consists of a standard SEIR compartmental model, wherein the transmission rate represents an input. The learning strategy follows an end-to-end approach: the loss function quantifies the mismatch between the actual numbers of infections and its numerical prediction obtained from the SEIR model incorporating as an input the transmission rate predicted by the neural ordinary differential equation. We validate this original approach using both a synthetic test case and a realistic test case based on meteorological data (temperature and humidity) and influenza data from Italy between 2010 and 2020. In both scenarios, we achieve low generalization error on the test set and observe strong alignment between the reconstructed model and established findings on the influence of meteorological factors on epidemic spread. Finally, we implement a data assimilation strategy to adapt the neural equation to the specific characteristics of an epidemic wave under investigation, and we conduct sensitivity tests on the network hyperparameters.

Physics-informed Neural Network Estimation of Material Properties in Soft Tissue Nonlinear Biomechanical Models

Dec 20, 2023Abstract:The development of biophysical models for clinical applications is rapidly advancing in the research community, thanks to their predictive nature and their ability to assist the interpretation of clinical data. However, high-resolution and accurate multi-physics computational models are computationally expensive and their personalisation involves fine calibration of a large number of parameters, which may be space-dependent, challenging their clinical translation. In this work, we propose a new approach which relies on the combination of physics-informed neural networks (PINNs) with three-dimensional soft tissue nonlinear biomechanical models, capable of reconstructing displacement fields and estimating heterogeneous patient-specific biophysical properties. The proposed learning algorithm encodes information from a limited amount of displacement and, in some cases, strain data, that can be routinely acquired in the clinical setting, and combines it with the physics of the problem, represented by a mathematical model based on partial differential equations, to regularise the problem and improve its convergence properties. Several benchmarks are presented to show the accuracy and robustness of the proposed method and its great potential to enable the robust and effective identification of patient-specific, heterogeneous physical properties, s.a. tissue stiffness properties. In particular, we demonstrate the capability of the PINN to detect the presence, location and severity of scar tissue, which is beneficial to develop personalised simulation models for disease diagnosis, especially for cardiac applications.

Real-time whole-heart electromechanical simulations using Latent Neural Ordinary Differential Equations

Jun 08, 2023

Abstract:Cardiac digital twins provide a physics and physiology informed framework to deliver predictive and personalized medicine. However, high-fidelity multi-scale cardiac models remain a barrier to adoption due to their extensive computational costs and the high number of model evaluations needed for patient-specific personalization. Artificial Intelligence-based methods can make the creation of fast and accurate whole-heart digital twins feasible. In this work, we use Latent Neural Ordinary Differential Equations (LNODEs) to learn the temporal pressure-volume dynamics of a heart failure patient. Our surrogate model based on LNODEs is trained from 400 3D-0D whole-heart closed-loop electromechanical simulations while accounting for 43 model parameters, describing single cell through to whole organ and cardiovascular hemodynamics. The trained LNODEs provides a compact and efficient representation of the 3D-0D model in a latent space by means of a feedforward fully-connected Artificial Neural Network that retains 3 hidden layers with 13 neurons per layer and allows for 300x real-time numerical simulations of the cardiac function on a single processor of a standard laptop. This surrogate model is employed to perform global sensitivity analysis and robust parameter estimation with uncertainty quantification in 3 hours of computations, still on a single processor. We match pressure and volume time traces unseen by the LNODEs during the training phase and we calibrate 4 to 11 model parameters while also providing their posterior distribution. This paper introduces the most advanced surrogate model of cardiac function available in the literature and opens new important venues for parameter calibration in cardiac digital twins.

Latent Dynamics Networks (LDNets): learning the intrinsic dynamics of spatio-temporal processes

Apr 28, 2023

Abstract:Predicting the evolution of systems that exhibit spatio-temporal dynamics in response to external stimuli is a key enabling technology fostering scientific innovation. Traditional equations-based approaches leverage first principles to yield predictions through the numerical approximation of high-dimensional systems of differential equations, thus calling for large-scale parallel computing platforms and requiring large computational costs. Data-driven approaches, instead, enable the description of systems evolution in low-dimensional latent spaces, by leveraging dimensionality reduction and deep learning algorithms. We propose a novel architecture, named Latent Dynamics Network (LDNet), which is able to discover low-dimensional intrinsic dynamics of possibly non-Markovian dynamical systems, thus predicting the time evolution of space-dependent fields in response to external inputs. Unlike popular approaches, in which the latent representation of the solution manifold is learned by means of auto-encoders that map a high-dimensional discretization of the system state into itself, LDNets automatically discover a low-dimensional manifold while learning the latent dynamics, without ever operating in the high-dimensional space. Furthermore, LDNets are meshless algorithms that do not reconstruct the output on a predetermined grid of points, but rather at any point of the domain, thus enabling weight-sharing across query-points. These features make LDNets lightweight and easy-to-train, with excellent accuracy and generalization properties, even in time-extrapolation regimes. We validate our method on several test cases and we show that, for a challenging highly-nonlinear problem, LDNets outperform state-of-the-art methods in terms of accuracy (normalized error 5 times smaller), by employing a dramatically smaller number of trainable parameters (more than 10 times fewer).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge