Francesco Ballerini

Embed Any NeRF: Graph Meta-Networks for Neural Tasks on Arbitrary NeRF Architectures

Feb 13, 2025Abstract:Neural Radiance Fields (NeRFs) have emerged as a groundbreaking paradigm for representing 3D objects and scenes by encoding shape and appearance information into the weights of a neural network. Recent works have shown how such weights can be used as input to frameworks processing them to solve deep learning tasks. Yet, these frameworks can only process NeRFs with a specific, predefined architecture. In this paper, we present the first framework that can ingest NeRFs with multiple architectures and perform inference on architectures unseen at training time. We achieve this goal by training a Graph Meta-Network in a representation learning framework. Moreover, we show how a contrastive objective is conducive to obtaining an architecture-agnostic latent space. In experiments on both MLP-based and tri-planar NeRFs, our approach demonstrates robust performance in classification and retrieval tasks that either matches or exceeds that of existing frameworks constrained to single architectures, thus providing the first architecture-agnostic method to perform tasks on NeRFs by processing their weights.

Connecting NeRFs, Images, and Text

Apr 11, 2024

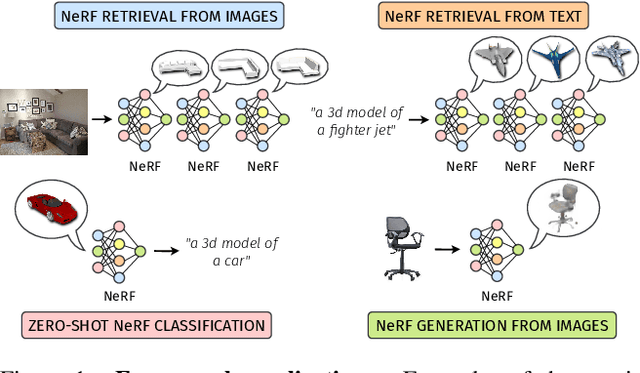

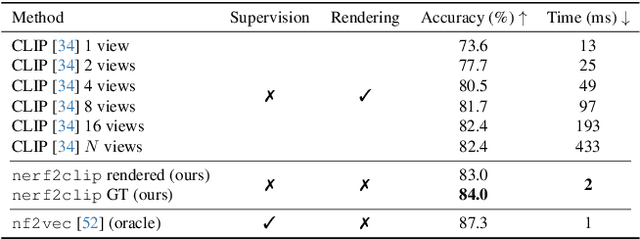

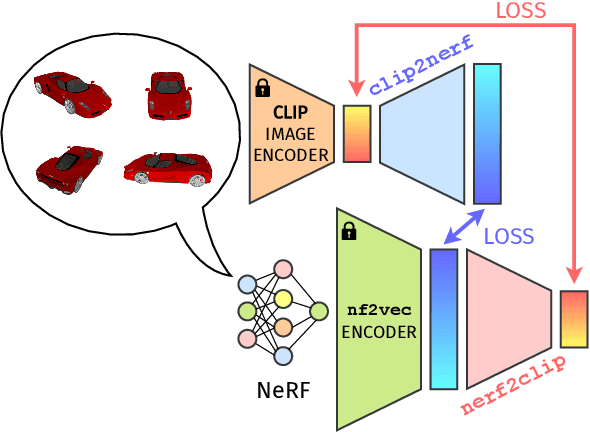

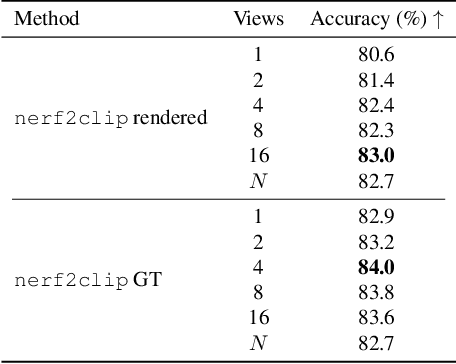

Abstract:Neural Radiance Fields (NeRFs) have emerged as a standard framework for representing 3D scenes and objects, introducing a novel data type for information exchange and storage. Concurrently, significant progress has been made in multimodal representation learning for text and image data. This paper explores a novel research direction that aims to connect the NeRF modality with other modalities, similar to established methodologies for images and text. To this end, we propose a simple framework that exploits pre-trained models for NeRF representations alongside multimodal models for text and image processing. Our framework learns a bidirectional mapping between NeRF embeddings and those obtained from corresponding images and text. This mapping unlocks several novel and useful applications, including NeRF zero-shot classification and NeRF retrieval from images or text.

Deep Learning on 3D Neural Fields

Dec 20, 2023

Abstract:In recent years, Neural Fields (NFs) have emerged as an effective tool for encoding diverse continuous signals such as images, videos, audio, and 3D shapes. When applied to 3D data, NFs offer a solution to the fragmentation and limitations associated with prevalent discrete representations. However, given that NFs are essentially neural networks, it remains unclear whether and how they can be seamlessly integrated into deep learning pipelines for solving downstream tasks. This paper addresses this research problem and introduces nf2vec, a framework capable of generating a compact latent representation for an input NF in a single inference pass. We demonstrate that nf2vec effectively embeds 3D objects represented by the input NFs and showcase how the resulting embeddings can be employed in deep learning pipelines to successfully address various tasks, all while processing exclusively NFs. We test this framework on several NFs used to represent 3D surfaces, such as unsigned/signed distance and occupancy fields. Moreover, we demonstrate the effectiveness of our approach with more complex NFs that encompass both geometry and appearance of 3D objects such as neural radiance fields.

Neural Processing of Tri-Plane Hybrid Neural Fields

Oct 02, 2023

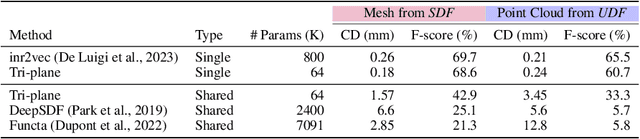

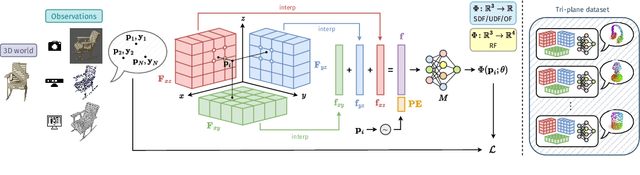

Abstract:Driven by the appealing properties of neural fields for storing and communicating 3D data, the problem of directly processing them to address tasks such as classification and part segmentation has emerged and has been investigated in recent works. Early approaches employ neural fields parameterized by shared networks trained on the whole dataset, achieving good task performance but sacrificing reconstruction quality. To improve the latter, later methods focus on individual neural fields parameterized as large Multi-Layer Perceptrons (MLPs), which are, however, challenging to process due to the high dimensionality of the weight space, intrinsic weight space symmetries, and sensitivity to random initialization. Hence, results turn out significantly inferior to those achieved by processing explicit representations, e.g., point clouds or meshes. In the meantime, hybrid representations, in particular based on tri-planes, have emerged as a more effective and efficient alternative to realize neural fields, but their direct processing has not been investigated yet. In this paper, we show that the tri-plane discrete data structure encodes rich information, which can be effectively processed by standard deep-learning machinery. We define an extensive benchmark covering a diverse set of fields such as occupancy, signed/unsigned distance, and, for the first time, radiance fields. While processing a field with the same reconstruction quality, we achieve task performance far superior to frameworks that process large MLPs and, for the first time, almost on par with architectures handling explicit representations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge