Florian Schwarzhans

Developing Predictive and Robust Radiomics Models for Chemotherapy Response in High-Grade Serous Ovarian Carcinoma

Jan 13, 2026Abstract:Objectives: High-grade serous ovarian carcinoma (HGSOC) is typically diagnosed at an advanced stage with extensive peritoneal metastases, making treatment challenging. Neoadjuvant chemotherapy (NACT) is often used to reduce tumor burden before surgery, but about 40% of patients show limited response. Radiomics, combined with machine learning (ML), offers a promising non-invasive method for predicting NACT response by analyzing computed tomography (CT) imaging data. This study aimed to improve response prediction in HGSOC patients undergoing NACT by integration different feature selection methods. Materials and methods: A framework for selecting robust radiomics features was introduced by employing an automated randomisation algorithm to mimic inter-observer variability, ensuring a balance between feature robustness and prediction accuracy. Four response metrics were used: chemotherapy response score (CRS), RECIST, volume reduction (VolR), and diameter reduction (DiaR). Lesions in different anatomical sites were studied. Pre- and post-NACT CT scans were used for feature extraction and model training on one cohort, and an independent cohort was used for external testing. Results: The best prediction performance was achieved using all lesions combined for VolR prediction, with an AUC of 0.83. Omental lesions provided the best results for CRS prediction (AUC 0.77), while pelvic lesions performed best for DiaR (AUC 0.76). Conclusion: The integration of robustness into the feature selection processes ensures the development of reliable models and thus facilitates the implementation of the radiomics models in clinical applications for HGSOC patients. Future work should explore further applications of radiomics in ovarian cancer, particularly in real-time clinical settings.

Variational U-Net with Local Alignment for Joint Tumor Extraction and Registration (VALOR-Net) of Breast MRI Data Acquired at Two Different Field Strengths

Jan 23, 2025

Abstract:Background: Multiparametric breast MRI data might improve tumor diagnostics, characterization, and treatment planning. Accurate alignment and delineation of images acquired at different field strengths such as 3T and 7T, remain challenging research tasks. Purpose: To address alignment challenges and enable consistent tumor segmentation across different MRI field strengths. Study type: Retrospective. Subjects: Nine female subjects with breast tumors were involved: six histologically proven invasive ductal carcinomas (IDC) and three fibroadenomas. Field strength/sequence: Imaging was performed at 3T and 7T scanners using post-contrast T1-weighted three-dimensional time-resolved angiography with stochastic trajectories (TWIST) sequence. Assessments: The method's performance for joint image registration and tumor segmentation was evaluated using several quantitative metrics, including signal-to-noise ratio (PSNR), structural similarity index (SSIM), normalized cross-correlation (NCC), Dice coefficient, F1 score, and relative sum of squared differences (rel SSD). Statistical tests: The Pearson correlation coefficient was used to test the relationship between the registration and segmentation metrics. Results: When calculated for each subject individually, the PSNR was in a range from 27.5 to 34.5 dB, and the SSIM was from 82.6 to 92.8%. The model achieved an NCC from 96.4 to 99.3% and a Dice coefficient of 62.9 to 95.3%. The F1 score was between 55.4 and 93.2% and the rel SSD was in the range of 2.0 and 7.5%. The segmentation metrics Dice and F1 Score are highly correlated (0.995), while a moderate correlation between NCC and SSIM (0.681) was found for registration. Data conclusion: Initial results demonstrate that the proposed method may be feasible in providing joint tumor segmentation and registration of MRI data acquired at different field strengths.

WCEbleedGen: A wireless capsule endoscopy dataset and its benchmarking for automatic bleeding classification, detection, and segmentation

Aug 22, 2024

Abstract:Computer-based analysis of Wireless Capsule Endoscopy (WCE) is crucial. However, a medically annotated WCE dataset for training and evaluation of automatic classification, detection, and segmentation of bleeding and non-bleeding frames is currently lacking. The present work focused on development of a medically annotated WCE dataset called WCEbleedGen for automatic classification, detection, and segmentation of bleeding and non-bleeding frames. It comprises 2,618 WCE bleeding and non-bleeding frames which were collected from various internet resources and existing WCE datasets. A comprehensive benchmarking and evaluation of the developed dataset was done using nine classification-based, three detection-based, and three segmentation-based deep learning models. The dataset is of high-quality, is class-balanced and contains single and multiple bleeding sites. Overall, our standard benchmark results show that Visual Geometric Group (VGG) 19, You Only Look Once version 8 nano (YOLOv8n), and Link network (Linknet) performed best in automatic classification, detection, and segmentation-based evaluations, respectively. Automatic bleeding diagnosis is crucial for WCE video interpretations. This diverse dataset will aid in developing of real-time, multi-task learning-based innovative solutions for automatic bleeding diagnosis in WCE. The dataset and code are publicly available at https://zenodo.org/records/10156571 and https://github.com/misahub2023/Benchmarking-Codes-of-the-WCEBleedGen-dataset.

Capsule Vision 2024 Challenge: Multi-Class Abnormality Classification for Video Capsule Endoscopy

Aug 09, 2024

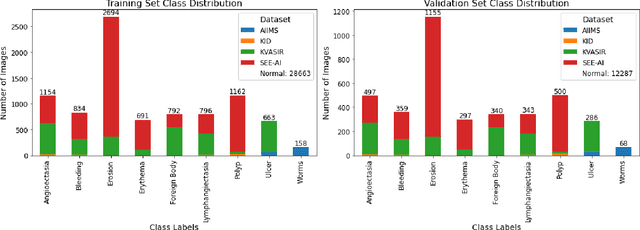

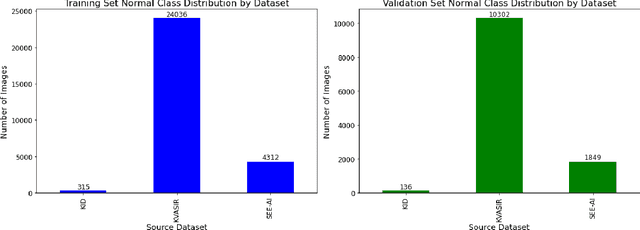

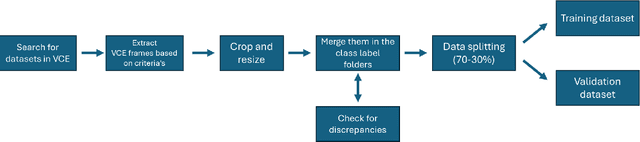

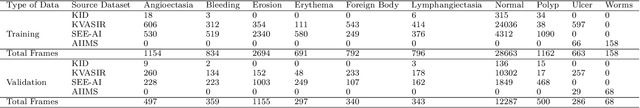

Abstract:We present the Capsule Vision 2024 Challenge: Multi-Class Abnormality Classification for Video Capsule Endoscopy. It is being virtually organized by the Research Center for Medical Image Analysis and Artificial Intelligence (MIAAI), Department of Medicine, Danube Private University, Krems, Austria and Medical Imaging and Signal Analysis Hub (MISAHUB) in collaboration with the 9th International Conference on Computer Vision & Image Processing (CVIP 2024) being organized by the Indian Institute of Information Technology, Design and Manufacturing (IIITDM) Kancheepuram, Chennai, India. This document describes the overview of the challenge, its registration and rules, submission format, and the description of the utilized datasets.

Breast MRI radiomics and machine learning radiomics-based predictions of response to neoadjuvant chemotherapy -- how are they affected by variations in tumour delineation?

Sep 03, 2023

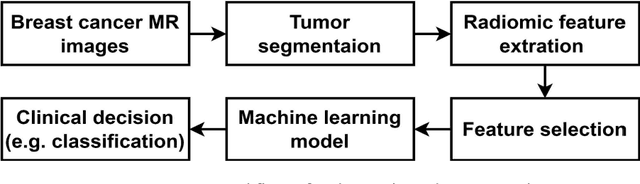

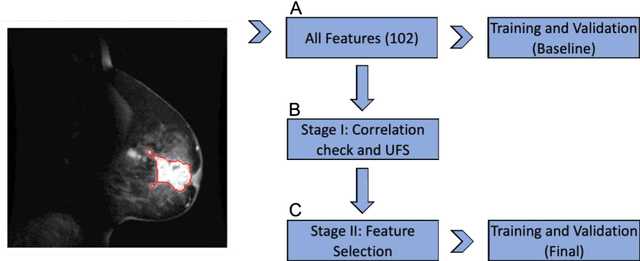

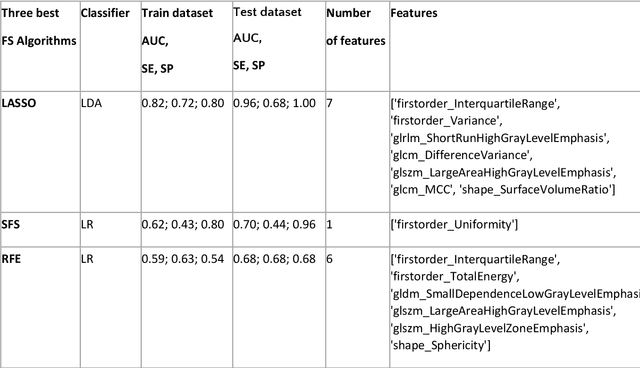

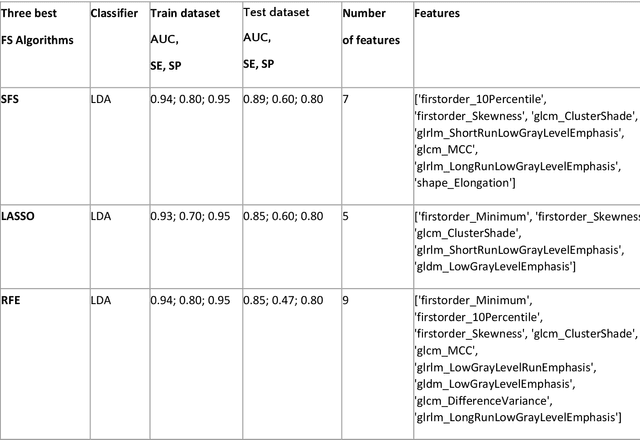

Abstract:Manual delineation of volumes of interest (VOIs) by experts is considered the gold-standard method in radiomics analysis. However, it suffers from inter- and intra-operator variability. A quantitative assessment of the impact of variations in these delineations on the performance of the radiomics predictors is required to develop robust radiomics based prediction models. In this study, we developed radiomics models for the prediction of pathological complete response to neoadjuvant chemotherapy in patients with two different breast cancer subtypes based on contrast-enhanced magnetic resonance imaging acquired prior to treatment (baseline MRI scans). Different mathematical operations such as erosion, smoothing, dilation, randomization, and ellipse fitting were applied to the original VOIs delineated by experts to simulate variations of segmentation masks. The effects of such VOI modifications on various steps of the radiomics workflow, including feature extraction, feature selection, and prediction performance, were evaluated. Using manual tumor VOIs and radiomics features extracted from baseline MRI scans, an AUC of up to 0.96 and 0.89 was achieved for human epidermal growth receptor 2 positive and triple-negative breast cancer, respectively. For smoothing and erosion, VOIs yielded the highest number of robust features and the best prediction performance, while ellipse fitting and dilation lead to the lowest robustness and prediction performance for both breast cancer subtypes. At most 28% of the selected features were similar to manual VOIs when different VOI delineation data were used. Differences in VOI delineation affects different steps of radiomics analysis, and their quantification is therefore important for development of standardized radiomics research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge