Florian Lehmann

Exploring Mobile Touch Interaction with Large Language Models

Feb 11, 2025

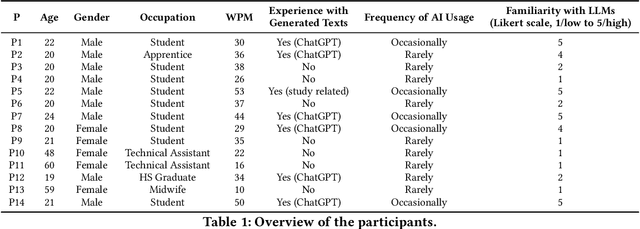

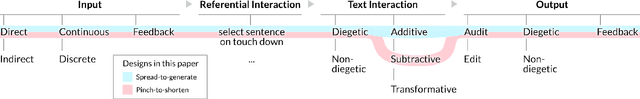

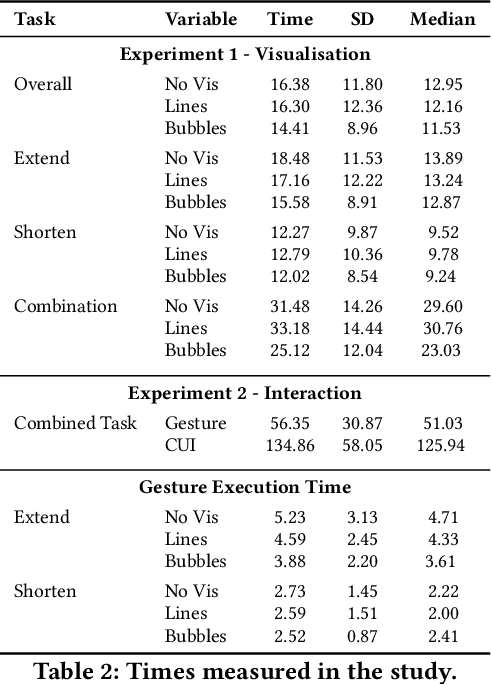

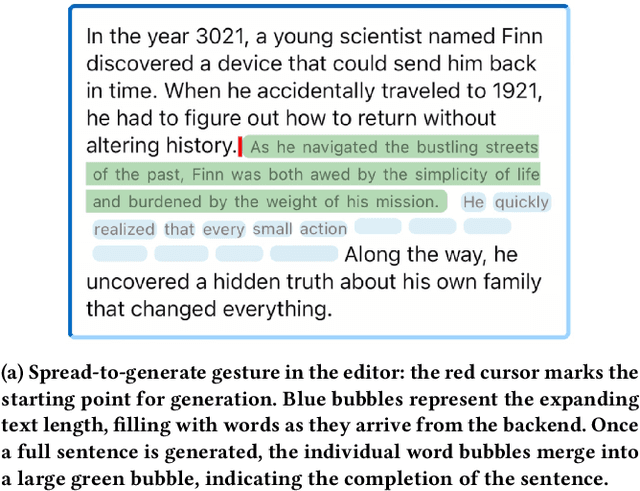

Abstract:Interacting with Large Language Models (LLMs) for text editing on mobile devices currently requires users to break out of their writing environment and switch to a conversational AI interface. In this paper, we propose to control the LLM via touch gestures performed directly on the text. We first chart a design space that covers fundamental touch input and text transformations. In this space, we then concretely explore two control mappings: spread-to-generate and pinch-to-shorten, with visual feedback loops. We evaluate this concept in a user study (N=14) that compares three feedback designs: no visualisation, text length indicator, and length + word indicator. The results demonstrate that touch-based control of LLMs is both feasible and user-friendly, with the length + word indicator proving most effective for managing text generation. This work lays the foundation for further research into gesture-based interaction with LLMs on touch devices.

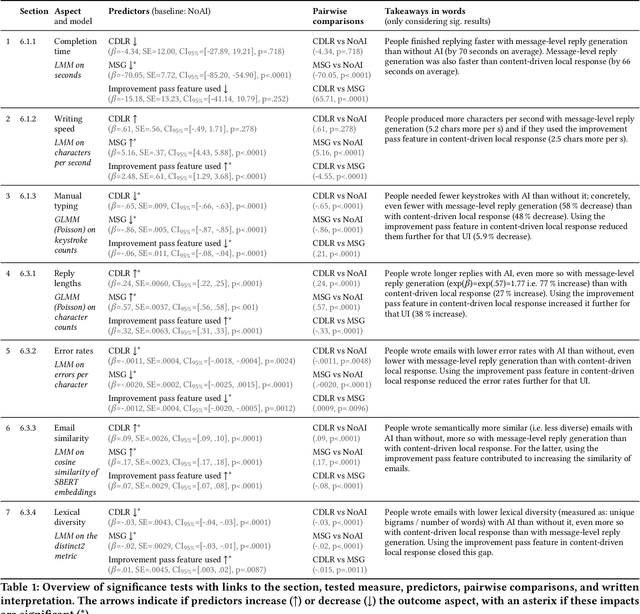

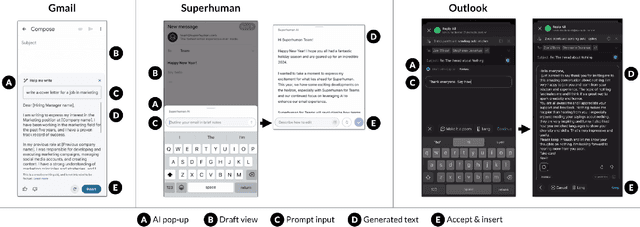

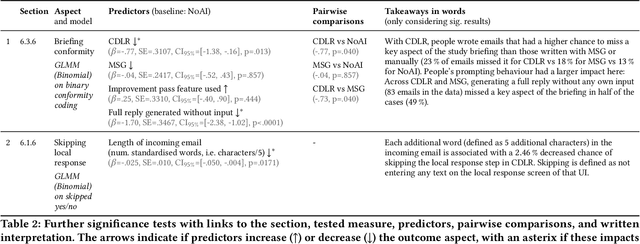

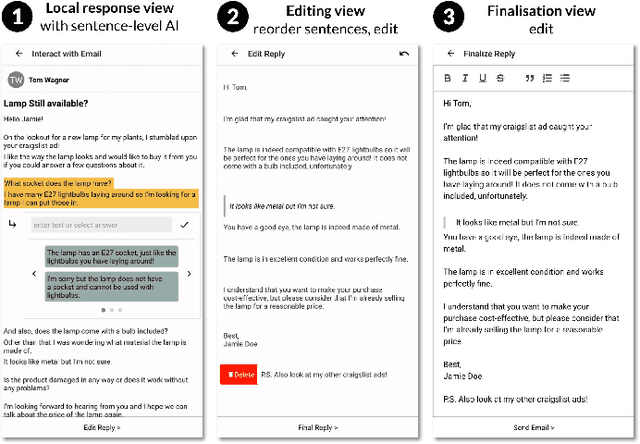

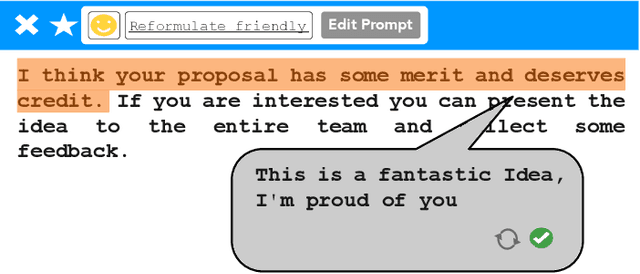

Content-Driven Local Response: Supporting Sentence-Level and Message-Level Mobile Email Replies With and Without AI

Feb 10, 2025

Abstract:Mobile emailing demands efficiency in diverse situations, which motivates the use of AI. However, generated text does not always reflect how people want to respond. This challenges users with AI involvement tradeoffs not yet considered in email UIs. We address this with a new UI concept called Content-Driven Local Response (CDLR), inspired by microtasking. This allows users to insert responses into the email by selecting sentences, which additionally serves to guide AI suggestions. The concept supports combining AI for local suggestions and message-level improvements. Our user study (N=126) compared CDLR with manual typing and full reply generation. We found that CDLR supports flexible workflows with varying degrees of AI involvement, while retaining the benefits of reduced typing and errors. This work contributes a new approach to integrating AI capabilities: By redesigning the UI for workflows with and without AI, we can empower users to dynamically adjust AI involvement.

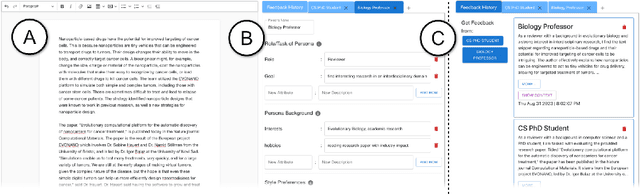

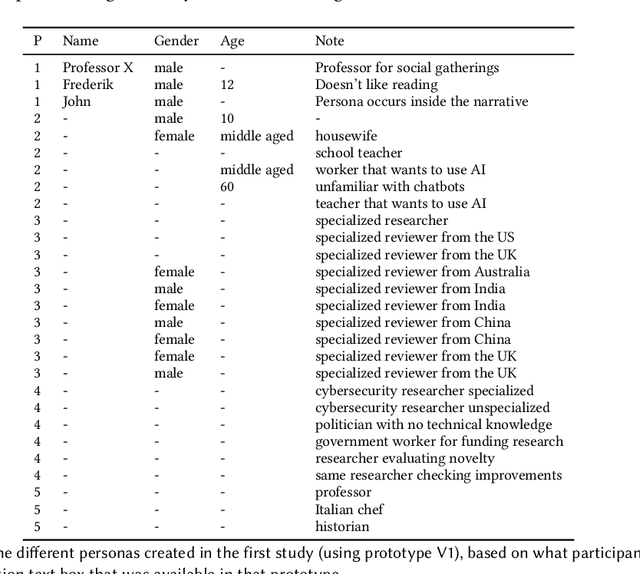

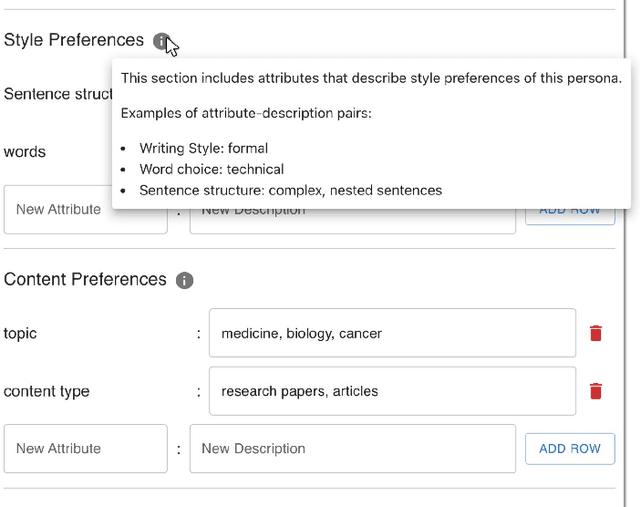

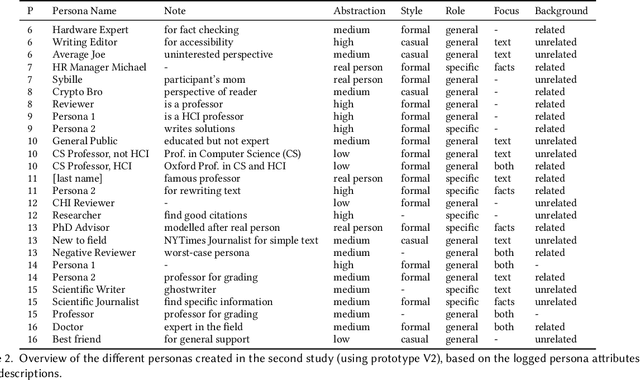

Writer-Defined AI Personas for On-Demand Feedback Generation

Sep 19, 2023

Abstract:Compelling writing is tailored to its audience. This is challenging, as writers may struggle to empathize with readers, get feedback in time, or gain access to the target group. We propose a concept that generates on-demand feedback, based on writer-defined AI personas of any target audience. We explore this concept with a prototype (using GPT-3.5) in two user studies (N=5 and N=11): Writers appreciated the concept and strategically used personas for getting different perspectives. The feedback was seen as helpful and inspired revisions of text and personas, although it was often verbose and unspecific. We discuss the impact of on-demand feedback, the limited representativity of contemporary AI systems, and further ideas for defining AI personas. This work contributes to the vision of supporting writers with AI by expanding the socio-technical perspective in AI tool design: To empower creators, we also need to keep in mind their relationship to an audience.

The AI Ghostwriter Effect: Users Do Not Perceive Ownership of AI-Generated Text But Self-Declare as Authors

Mar 06, 2023Abstract:Human-AI interaction in text production increases complexity in authorship. In two empirical studies (n1 = 30 & n2 = 96), we investigate authorship and ownership in human-AI collaboration for personalized language generation models. We show an AI Ghostwriter Effect: Users do not consider themselves the owners and authors of AI-generated text but refrain from publicly declaring AI authorship. The degree of personalization did not impact the AI Ghostwriter Effect, and control over the model increased participants' sense of ownership. We also found that the discrepancy between the sense of ownership and the authorship declaration is stronger in interactions with a human ghostwriter and that people use similar rationalizations for authorship in AI ghostwriters and human ghostwriters. We discuss how our findings relate to psychological ownership and human-AI interaction to lay the foundations for adapting authorship frameworks and user interfaces in AI in text-generation tasks.

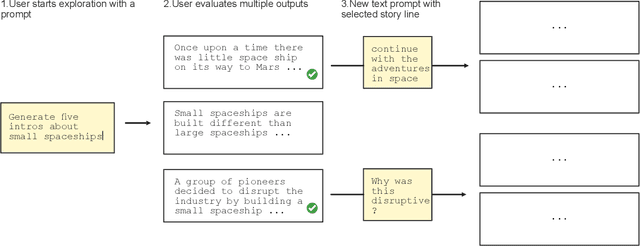

Choice Over Control: How Users Write with Large Language Models using Diegetic and Non-Diegetic Prompting

Mar 06, 2023

Abstract:We propose a conceptual perspective on prompts for Large Language Models (LLMs) that distinguishes between (1) diegetic prompts (part of the narrative, e.g. "Once upon a time, I saw a fox..."), and (2) non-diegetic prompts (external, e.g. "Write about the adventures of the fox."). With this lens, we study how 129 crowd workers on Prolific write short texts with different user interfaces (1 vs 3 suggestions, with/out non-diegetic prompts; implemented with GPT-3): When the interface offered multiple suggestions and provided an option for non-diegetic prompting, participants preferred choosing from multiple suggestions over controlling them via non-diegetic prompts. When participants provided non-diegetic prompts it was to ask for inspiration, topics or facts. Single suggestions in particular were guided both with diegetic and non-diegetic information. This work informs human-AI interaction with generative models by revealing that (1) writing non-diegetic prompts requires effort, (2) people combine diegetic and non-diegetic prompting, and (3) they use their draft (i.e. diegetic information) and suggestion timing to strategically guide LLMs.

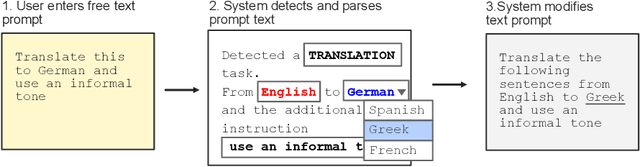

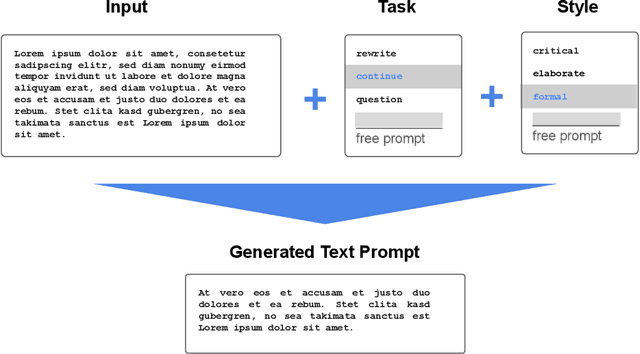

How to Prompt? Opportunities and Challenges of Zero- and Few-Shot Learning for Human-AI Interaction in Creative Applications of Generative Models

Sep 03, 2022

Abstract:Deep generative models have the potential to fundamentally change the way we create high-fidelity digital content but are often hard to control. Prompting a generative model is a promising recent development that in principle enables end-users to creatively leverage zero-shot and few-shot learning to assign new tasks to an AI ad-hoc, simply by writing them down. However, for the majority of end-users writing effective prompts is currently largely a trial and error process. To address this, we discuss the key opportunities and challenges for interactive creative applications that use prompting as a new paradigm for Human-AI interaction. Based on our analysis, we propose four design goals for user interfaces that support prompting. We illustrate these with concrete UI design sketches, focusing on the use case of creative writing. The research community in HCI and AI can take these as starting points to develop adequate user interfaces for models capable of zero- and few-shot learning.

Beyond Text Generation: Supporting Writers with Continuous Automatic Text Summaries

Aug 19, 2022

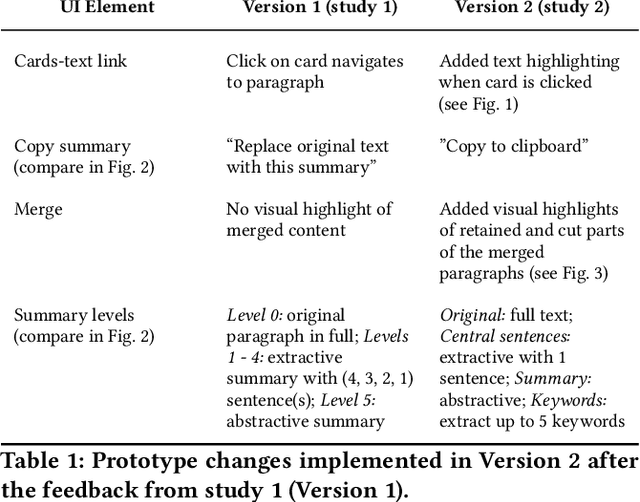

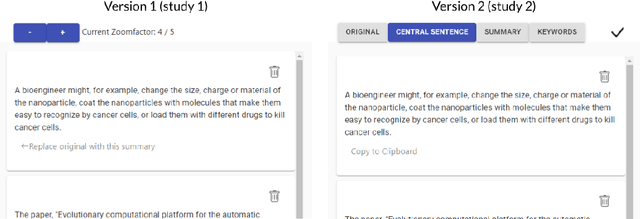

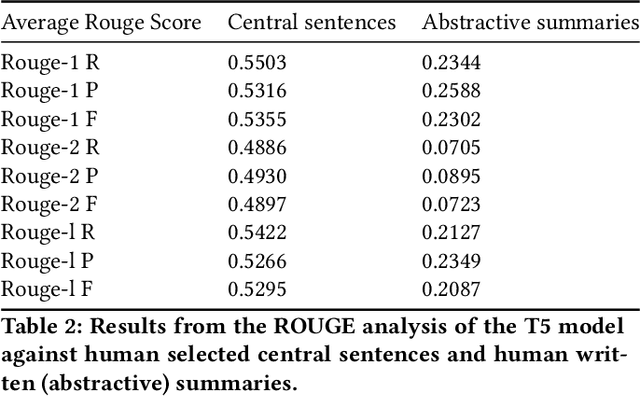

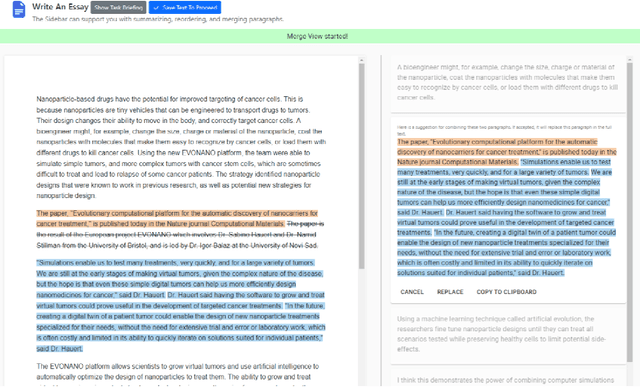

Abstract:We propose a text editor to help users plan, structure and reflect on their writing process. It provides continuously updated paragraph-wise summaries as margin annotations, using automatic text summarization. Summary levels range from full text, to selected (central) sentences, down to a collection of keywords. To understand how users interact with this system during writing, we conducted two user studies (N=4 and N=8) in which people wrote analytic essays about a given topic and article. As a key finding, the summaries gave users an external perspective on their writing and helped them to revise the content and scope of their drafted paragraphs. People further used the tool to quickly gain an overview of the text and developed strategies to integrate insights from the automated summaries. More broadly, this work explores and highlights the value of designing AI tools for writers, with Natural Language Processing (NLP) capabilities that go beyond direct text generation and correction.

Suggestion Lists vs. Continuous Generation: Interaction Design for Writing with Generative Models on Mobile Devices Affect Text Length, Wording and Perceived Authorship

Aug 01, 2022

Abstract:Neural language models have the potential to support human writing. However, questions remain on their integration and influence on writing and output. To address this, we designed and compared two user interfaces for writing with AI on mobile devices, which manipulate levels of initiative and control: 1) Writing with continuously generated text, the AI adds text word-by-word and user steers. 2) Writing with suggestions, the AI suggests phrases and user selects from a list. In a supervised online study (N=18), participants used these prototypes and a baseline without AI. We collected touch interactions, ratings on inspiration and authorship, and interview data. With AI suggestions, people wrote less actively, yet felt they were the author. Continuously generated text reduced this perceived authorship, yet increased editing behavior. In both designs, AI increased text length and was perceived to influence wording. Our findings add new empirical evidence on the impact of UI design decisions on user experience and output with co-creative systems.

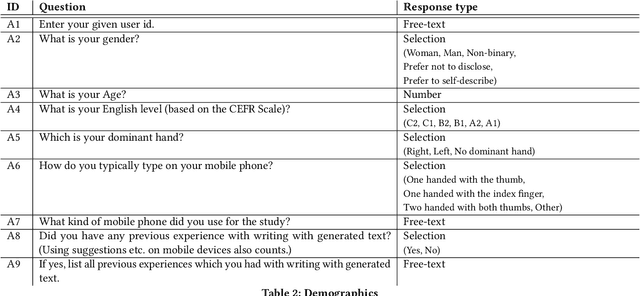

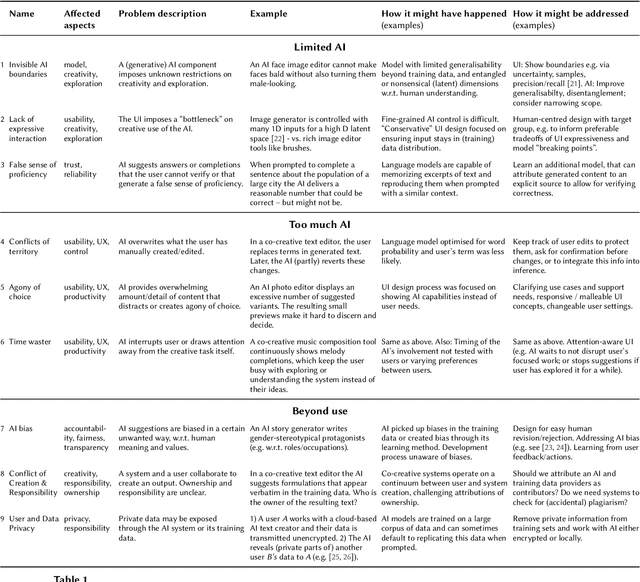

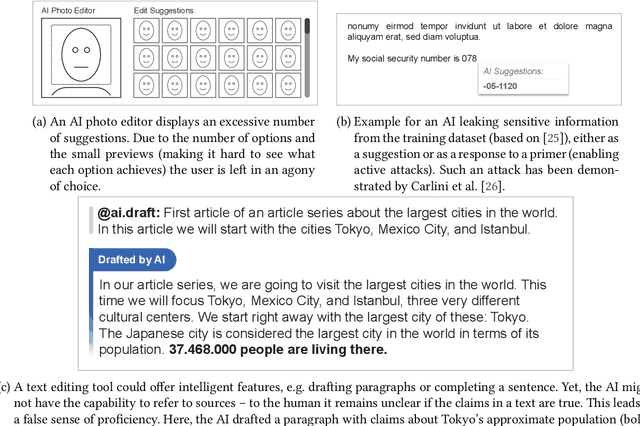

Nine Potential Pitfalls when Designing Human-AI Co-Creative Systems

Apr 01, 2021

Abstract:This position paper examines potential pitfalls on the way towards achieving human-AI co-creation with generative models in a way that is beneficial to the users' interests. In particular, we collected a set of nine potential pitfalls, based on the literature and our own experiences as researchers working at the intersection of HCI and AI. We illustrate each pitfall with examples and suggest ideas for addressing it. Reflecting on all pitfalls, we discuss and conclude with implications for future research directions. With this collection, we hope to contribute to a critical and constructive discussion on the roles of humans and AI in co-creative interactions, with an eye on related assumptions and potential side-effects for creative practices and beyond.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge