Tim Zindulka

Exploring Mobile Touch Interaction with Large Language Models

Feb 11, 2025

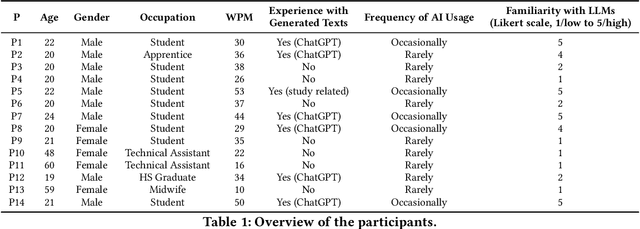

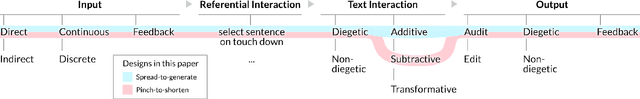

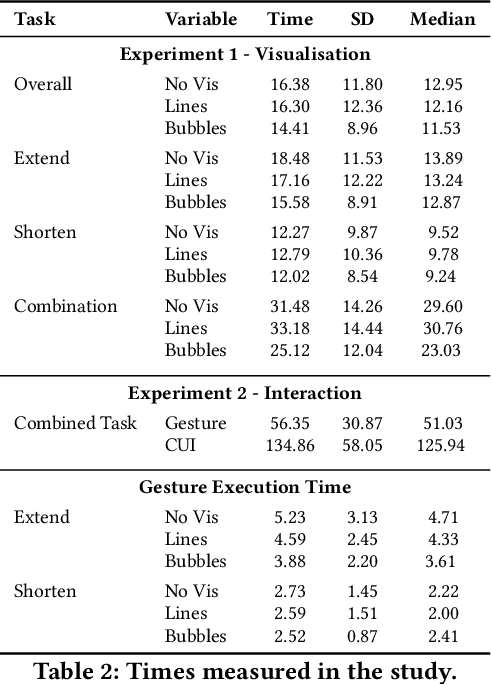

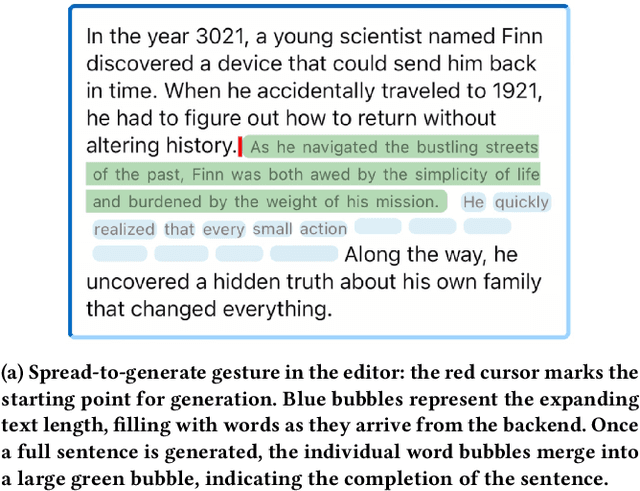

Abstract:Interacting with Large Language Models (LLMs) for text editing on mobile devices currently requires users to break out of their writing environment and switch to a conversational AI interface. In this paper, we propose to control the LLM via touch gestures performed directly on the text. We first chart a design space that covers fundamental touch input and text transformations. In this space, we then concretely explore two control mappings: spread-to-generate and pinch-to-shorten, with visual feedback loops. We evaluate this concept in a user study (N=14) that compares three feedback designs: no visualisation, text length indicator, and length + word indicator. The results demonstrate that touch-based control of LLMs is both feasible and user-friendly, with the length + word indicator proving most effective for managing text generation. This work lays the foundation for further research into gesture-based interaction with LLMs on touch devices.

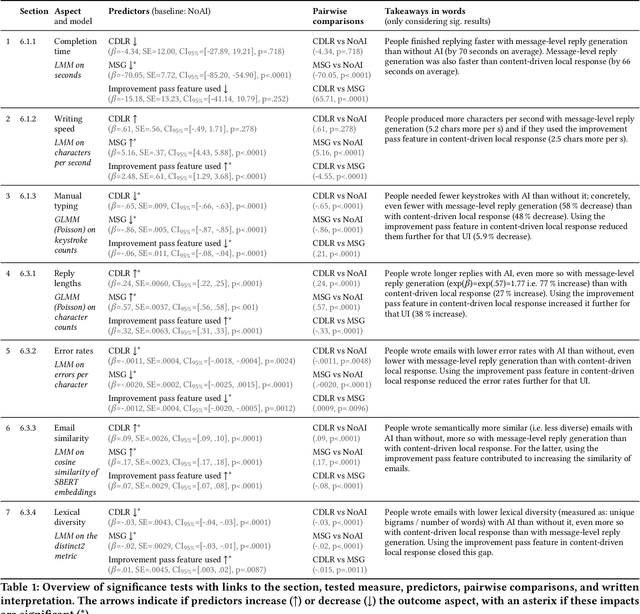

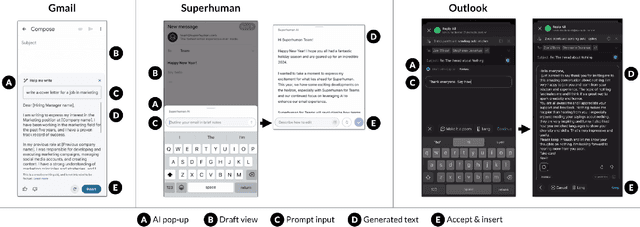

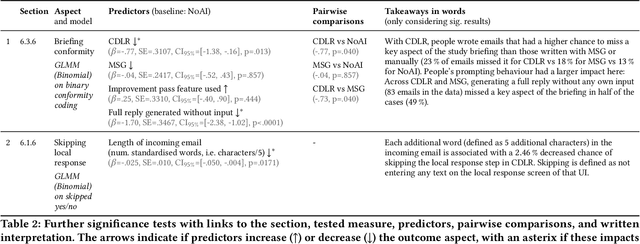

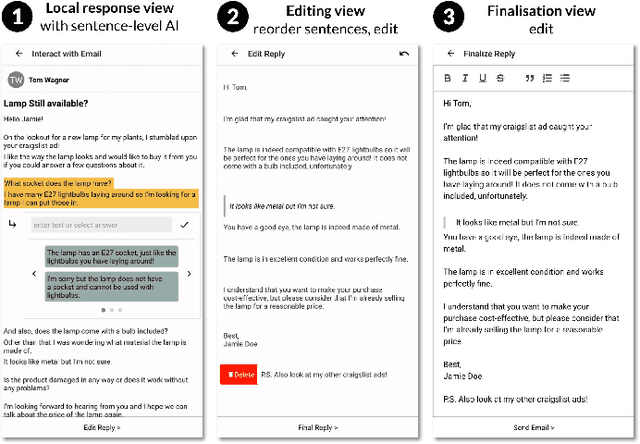

Content-Driven Local Response: Supporting Sentence-Level and Message-Level Mobile Email Replies With and Without AI

Feb 10, 2025

Abstract:Mobile emailing demands efficiency in diverse situations, which motivates the use of AI. However, generated text does not always reflect how people want to respond. This challenges users with AI involvement tradeoffs not yet considered in email UIs. We address this with a new UI concept called Content-Driven Local Response (CDLR), inspired by microtasking. This allows users to insert responses into the email by selecting sentences, which additionally serves to guide AI suggestions. The concept supports combining AI for local suggestions and message-level improvements. Our user study (N=126) compared CDLR with manual typing and full reply generation. We found that CDLR supports flexible workflows with varying degrees of AI involvement, while retaining the benefits of reduced typing and errors. This work contributes a new approach to integrating AI capabilities: By redesigning the UI for workflows with and without AI, we can empower users to dynamically adjust AI involvement.

Deceptive Patterns of Intelligent and Interactive Writing Assistants

Apr 14, 2024Abstract:Large Language Models have become an integral part of new intelligent and interactive writing assistants. Many are offered commercially with a chatbot-like UI, such as ChatGPT, and provide little information about their inner workings. This makes this new type of widespread system a potential target for deceptive design patterns. For example, such assistants might exploit hidden costs by providing guidance up until a certain point before asking for a fee to see the rest. As another example, they might sneak unwanted content/edits into longer generated or revised text pieces (e.g. to influence the expressed opinion). With these and other examples, we conceptually transfer several deceptive patterns from the literature to the new context of AI writing assistants. Our goal is to raise awareness and encourage future research into how the UI and interaction design of such systems can impact people and their writing.

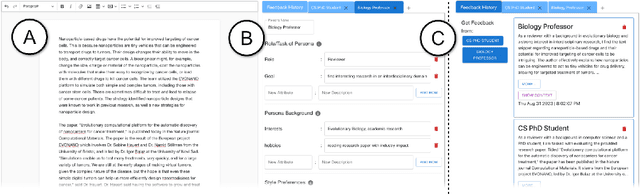

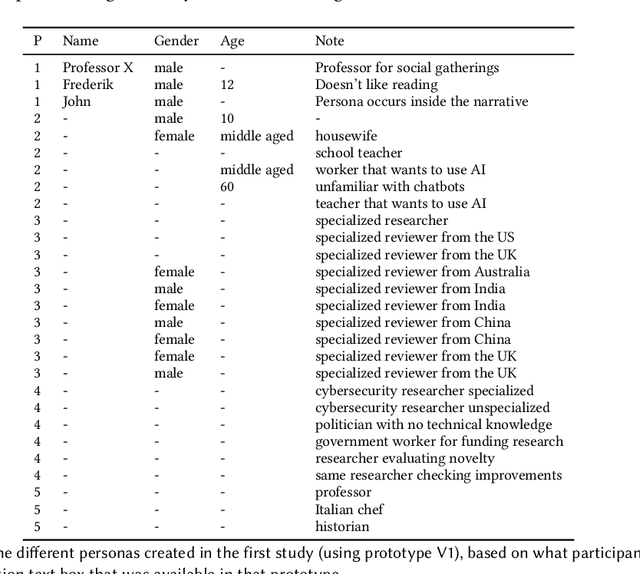

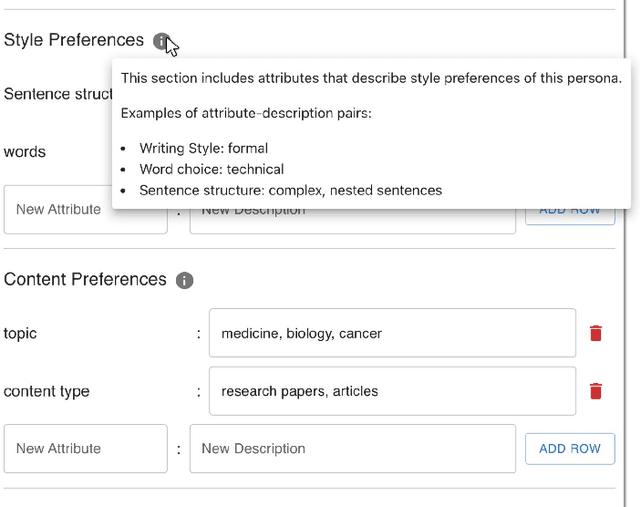

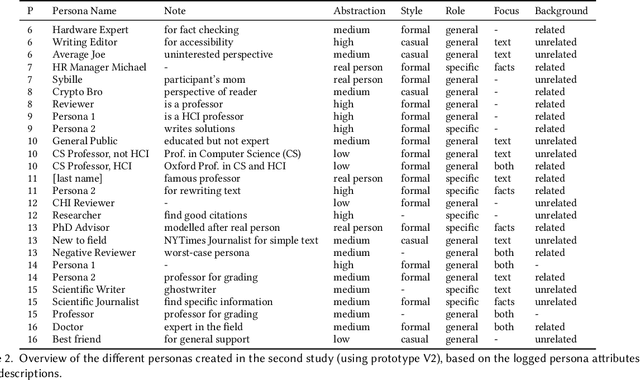

Writer-Defined AI Personas for On-Demand Feedback Generation

Sep 19, 2023

Abstract:Compelling writing is tailored to its audience. This is challenging, as writers may struggle to empathize with readers, get feedback in time, or gain access to the target group. We propose a concept that generates on-demand feedback, based on writer-defined AI personas of any target audience. We explore this concept with a prototype (using GPT-3.5) in two user studies (N=5 and N=11): Writers appreciated the concept and strategically used personas for getting different perspectives. The feedback was seen as helpful and inspired revisions of text and personas, although it was often verbose and unspecific. We discuss the impact of on-demand feedback, the limited representativity of contemporary AI systems, and further ideas for defining AI personas. This work contributes to the vision of supporting writers with AI by expanding the socio-technical perspective in AI tool design: To empower creators, we also need to keep in mind their relationship to an audience.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge