Sven Goller

Content-Driven Local Response: Supporting Sentence-Level and Message-Level Mobile Email Replies With and Without AI

Feb 10, 2025

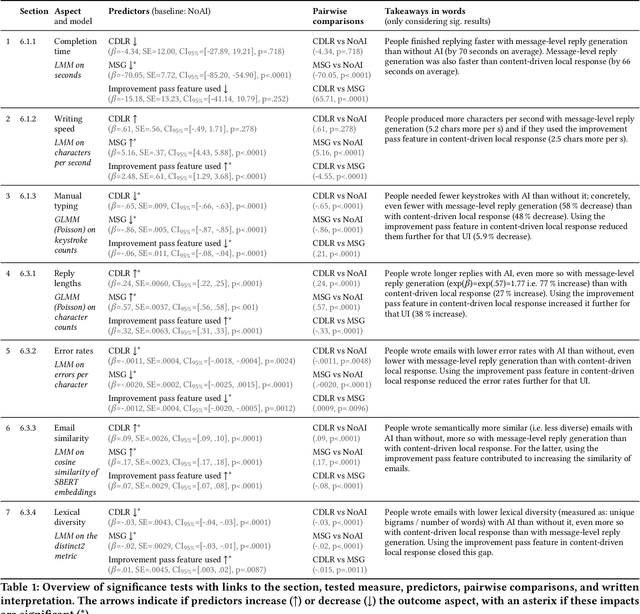

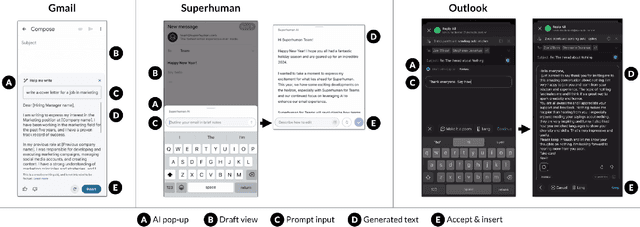

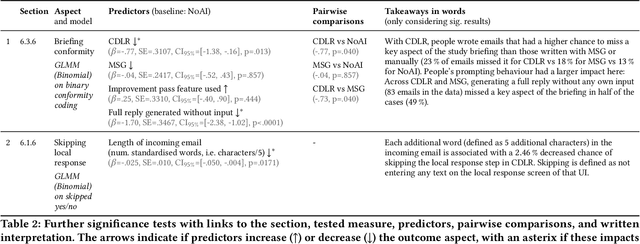

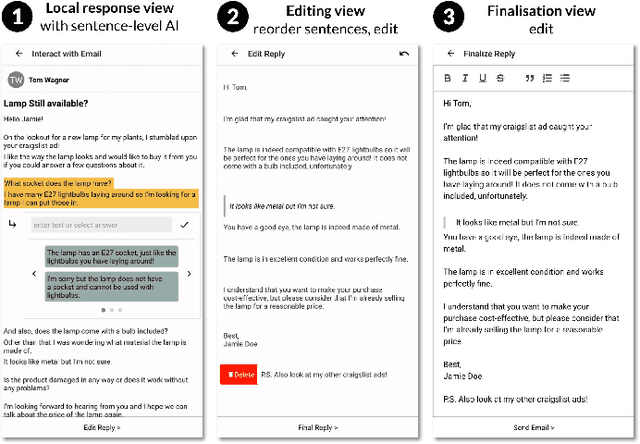

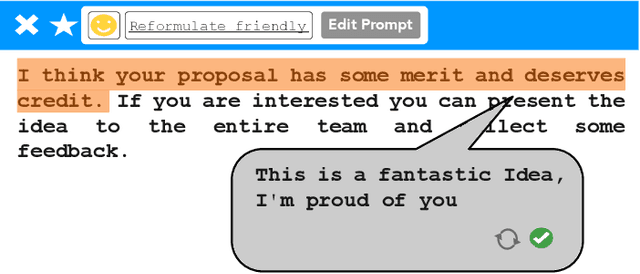

Abstract:Mobile emailing demands efficiency in diverse situations, which motivates the use of AI. However, generated text does not always reflect how people want to respond. This challenges users with AI involvement tradeoffs not yet considered in email UIs. We address this with a new UI concept called Content-Driven Local Response (CDLR), inspired by microtasking. This allows users to insert responses into the email by selecting sentences, which additionally serves to guide AI suggestions. The concept supports combining AI for local suggestions and message-level improvements. Our user study (N=126) compared CDLR with manual typing and full reply generation. We found that CDLR supports flexible workflows with varying degrees of AI involvement, while retaining the benefits of reduced typing and errors. This work contributes a new approach to integrating AI capabilities: By redesigning the UI for workflows with and without AI, we can empower users to dynamically adjust AI involvement.

Choice Over Control: How Users Write with Large Language Models using Diegetic and Non-Diegetic Prompting

Mar 06, 2023

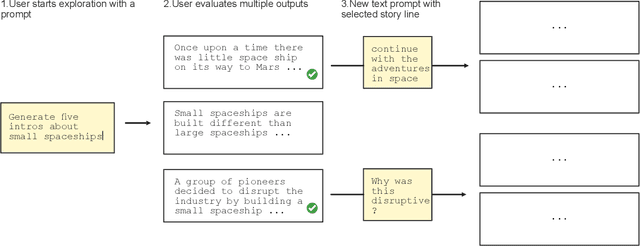

Abstract:We propose a conceptual perspective on prompts for Large Language Models (LLMs) that distinguishes between (1) diegetic prompts (part of the narrative, e.g. "Once upon a time, I saw a fox..."), and (2) non-diegetic prompts (external, e.g. "Write about the adventures of the fox."). With this lens, we study how 129 crowd workers on Prolific write short texts with different user interfaces (1 vs 3 suggestions, with/out non-diegetic prompts; implemented with GPT-3): When the interface offered multiple suggestions and provided an option for non-diegetic prompting, participants preferred choosing from multiple suggestions over controlling them via non-diegetic prompts. When participants provided non-diegetic prompts it was to ask for inspiration, topics or facts. Single suggestions in particular were guided both with diegetic and non-diegetic information. This work informs human-AI interaction with generative models by revealing that (1) writing non-diegetic prompts requires effort, (2) people combine diegetic and non-diegetic prompting, and (3) they use their draft (i.e. diegetic information) and suggestion timing to strategically guide LLMs.

How to Prompt? Opportunities and Challenges of Zero- and Few-Shot Learning for Human-AI Interaction in Creative Applications of Generative Models

Sep 03, 2022

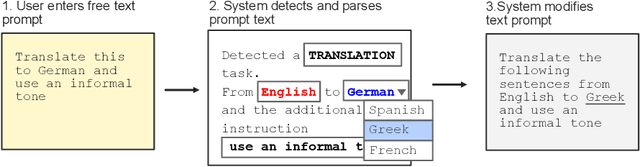

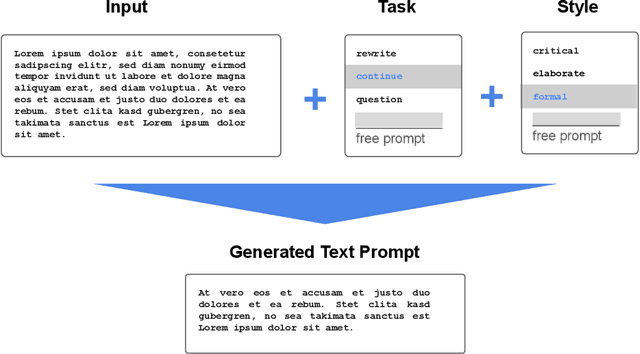

Abstract:Deep generative models have the potential to fundamentally change the way we create high-fidelity digital content but are often hard to control. Prompting a generative model is a promising recent development that in principle enables end-users to creatively leverage zero-shot and few-shot learning to assign new tasks to an AI ad-hoc, simply by writing them down. However, for the majority of end-users writing effective prompts is currently largely a trial and error process. To address this, we discuss the key opportunities and challenges for interactive creative applications that use prompting as a new paradigm for Human-AI interaction. Based on our analysis, we propose four design goals for user interfaces that support prompting. We illustrate these with concrete UI design sketches, focusing on the use case of creative writing. The research community in HCI and AI can take these as starting points to develop adequate user interfaces for models capable of zero- and few-shot learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge