Filippo Cavallo

Enhancing Smart Environments with Context-Aware Chatbots using Large Language Models

Feb 20, 2025Abstract:This work presents a novel architecture for context-aware interactions within smart environments, leveraging Large Language Models (LLMs) to enhance user experiences. Our system integrates user location data obtained through UWB tags and sensor-equipped smart homes with real-time human activity recognition (HAR) to provide a comprehensive understanding of user context. This contextual information is then fed to an LLM-powered chatbot, enabling it to generate personalised interactions and recommendations based on the user's current activity and environment. This approach moves beyond traditional static chatbot interactions by dynamically adapting to the user's real-time situation. A case study conducted from a real-world dataset demonstrates the feasibility and effectiveness of our proposed architecture, showcasing its potential to create more intuitive and helpful interactions within smart homes. The results highlight the significant benefits of integrating LLM with real-time activity and location data to deliver personalised and contextually relevant user experiences.

Measuring the perception of the personalized activities with CloudIA robot

Dec 11, 2023Abstract:Socially Assistive Robots represent a valid solution for improving the quality of life and the mood of older adults. In this context, this work presents the CloudIA robot, a non-human-like robot intended to promote sociality and well-being among older adults. The design of the robot and of the provided services were carried out by a multidisciplinary team of designers and technology developers in tandem with professional caregivers. The capabilities of the robot were implemented according to the received guidelines and tested in two nursing facilities by 15 older people. Qualitative and quantitative metrics were used to investigate the engagement of the participants during the interaction with the robot, and to investigate any differences in the interaction during the proposed activities. The results highlighted the general tendency of humanizing the robotic platform and demonstrated the feasibility of introducing the CloudIA robot in support of the professional caregivers' work. From this pilot test, further ideas on improving the personalization of the robotic platform emerged.

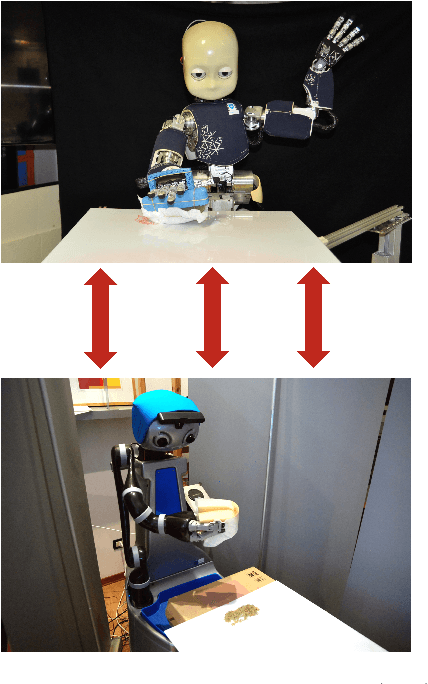

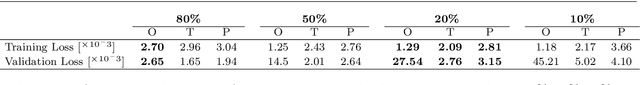

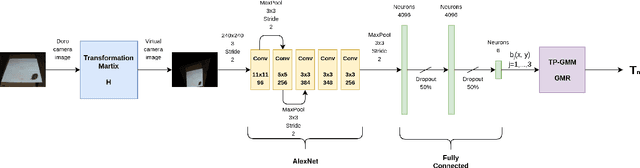

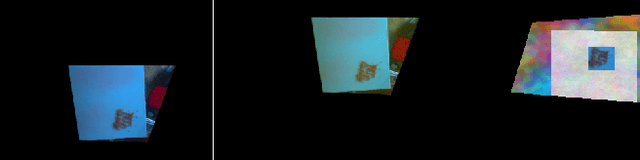

Cleaning tasks knowledge transfer between heterogeneous robots: a deep learning approach

Mar 13, 2019

Abstract:Nowadays, autonomous service robots are becoming an important topic in robotic research. Differently from typical industrial scenarios, with highly controlled environments, service robots must show an additional robustness to task perturbations and changes in the characteristics of their sensory feedback. In this paper a robot is taught to perform two different cleaning tasks over a table, using a learning from demonstration paradigm. However, differently from other approaches, a convolutional neural network is used to generalize the demonstrations to different, not yet seen dirt or stain patterns on the same table using only visual feedback, and to perform cleaning movements accordingly. Robustness to robot posture and illumination changes is achieved using data augmentation techniques and camera images transformation. This robustness allows the transfer of knowledge regarding execution of cleaning tasks between heterogeneous robots operating in different environmental settings. To demonstrate the viability of the proposed approach, a network trained in Lisbon to perform cleaning tasks, using the iCub robot, is successfully employed by the DoRo robot in Peccioli, Italy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge