Cleaning tasks knowledge transfer between heterogeneous robots: a deep learning approach

Paper and Code

Mar 13, 2019

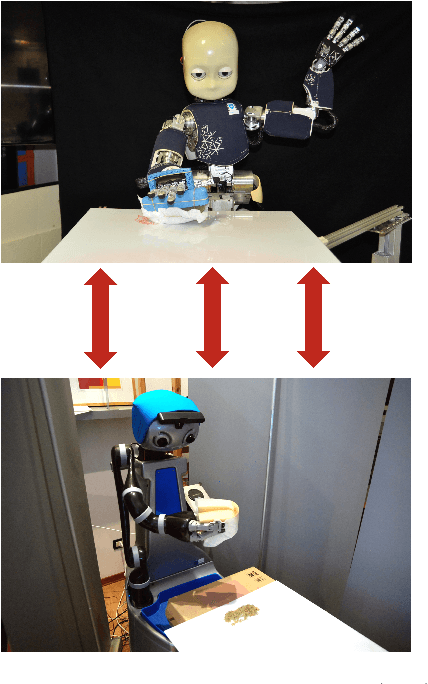

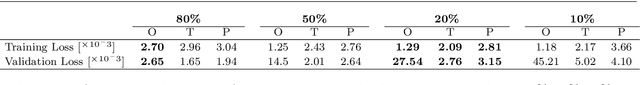

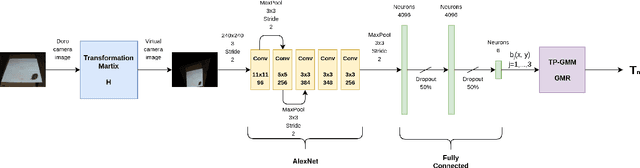

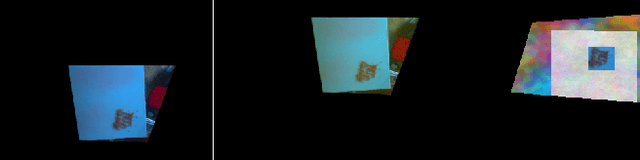

Nowadays, autonomous service robots are becoming an important topic in robotic research. Differently from typical industrial scenarios, with highly controlled environments, service robots must show an additional robustness to task perturbations and changes in the characteristics of their sensory feedback. In this paper a robot is taught to perform two different cleaning tasks over a table, using a learning from demonstration paradigm. However, differently from other approaches, a convolutional neural network is used to generalize the demonstrations to different, not yet seen dirt or stain patterns on the same table using only visual feedback, and to perform cleaning movements accordingly. Robustness to robot posture and illumination changes is achieved using data augmentation techniques and camera images transformation. This robustness allows the transfer of knowledge regarding execution of cleaning tasks between heterogeneous robots operating in different environmental settings. To demonstrate the viability of the proposed approach, a network trained in Lisbon to perform cleaning tasks, using the iCub robot, is successfully employed by the DoRo robot in Peccioli, Italy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge