Filip Sroubek

H-NeXt: The next step towards roto-translation invariant networks

Nov 02, 2023Abstract:The widespread popularity of equivariant networks underscores the significance of parameter efficient models and effective use of training data. At a time when robustness to unseen deformations is becoming increasingly important, we present H-NeXt, which bridges the gap between equivariance and invariance. H-NeXt is a parameter-efficient roto-translation invariant network that is trained without a single augmented image in the training set. Our network comprises three components: an equivariant backbone for learning roto-translation independent features, an invariant pooling layer for discarding roto-translation information, and a classification layer. H-NeXt outperforms the state of the art in classification on unaugmented training sets and augmented test sets of MNIST and CIFAR-10.

NeRD: Neural field-based Demosaicking

Apr 13, 2023Abstract:We introduce NeRD, a new demosaicking method for generating full-color images from Bayer patterns. Our approach leverages advancements in neural fields to perform demosaicking by representing an image as a coordinate-based neural network with sine activation functions. The inputs to the network are spatial coordinates and a low-resolution Bayer pattern, while the outputs are the corresponding RGB values. An encoder network, which is a blend of ResNet and U-net, enhances the implicit neural representation of the image to improve its quality and ensure spatial consistency through prior learning. Our experimental results demonstrate that NeRD outperforms traditional and state-of-the-art CNN-based methods and significantly closes the gap to transformer-based methods.

Real-Time Wheel Detection and Rim Classification in Automotive Production

Apr 13, 2023Abstract:This paper proposes a novel approach to real-time automatic rim detection, classification, and inspection by combining traditional computer vision and deep learning techniques. At the end of every automotive assembly line, a quality control process is carried out to identify any potential defects in the produced cars. Common yet hazardous defects are related, for example, to incorrectly mounted rims. Routine inspections are mostly conducted by human workers that are negatively affected by factors such as fatigue or distraction. We have designed a new prototype to validate whether all four wheels on a single car match in size and type. Additionally, we present three comprehensive open-source databases, CWD1500, WHEEL22, and RB600, for wheel, rim, and bolt detection, as well as rim classification, which are free-to-use for scientific purposes.

Blur Invariants for Image Recognition

Jan 18, 2023Abstract:Blur is an image degradation that is difficult to remove. Invariants with respect to blur offer an alternative way of a~description and recognition of blurred images without any deblurring. In this paper, we present an original unified theory of blur invariants. Unlike all previous attempts, the new theory does not require any prior knowledge of the blur type. The invariants are constructed in the Fourier domain by means of orthogonal projection operators and moment expansion is used for efficient and stable computation. It is shown that all blur invariants published earlier are just particular cases of this approach. Experimental comparison to concurrent approaches shows the advantages of the proposed theory.

FMODetect: Robust Detection and Trajectory Estimation of Fast Moving Objects

Dec 15, 2020

Abstract:We propose the first learning-based approach for detection and trajectory estimation of fast moving objects. Such objects are highly blurred and move over large distances within one video frame. Fast moving objects are associated with a deblurring and matting problem, also called deblatting. Instead of solving the complex deblatting problem jointly, we split the problem into matting and deblurring and solve them separately. The proposed method first detects all fast moving objects as a truncated distance function to the trajectory. Subsequently, a matting and fitting network for each detected object estimates the object trajectory and its blurred appearance without background. For the sharp appearance estimation, we propose an energy minimization based deblurring. The state-of-the-art methods are outperformed in terms of trajectory estimation and sharp appearance reconstruction. Compared to other methods, such as deblatting, the inference is of several orders of magnitude faster and allows applications such as real-time fast moving object detection and retrieval in large video collections.

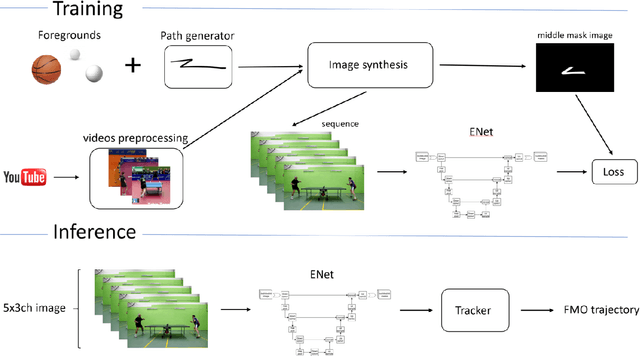

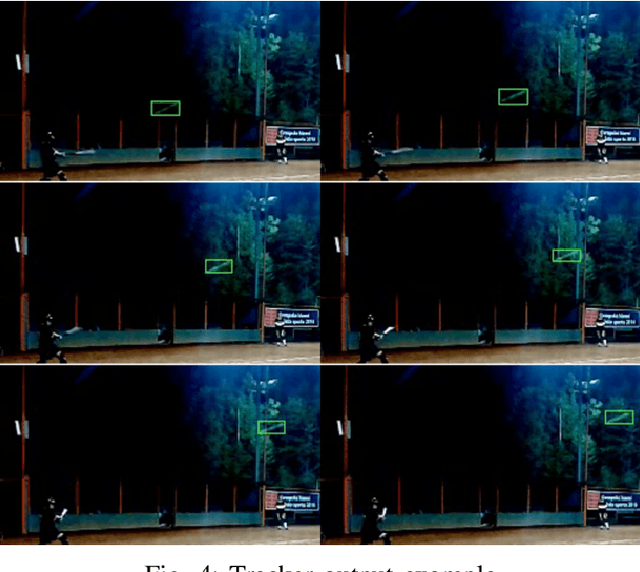

Learning-based Tracking of Fast Moving Objects

May 04, 2020

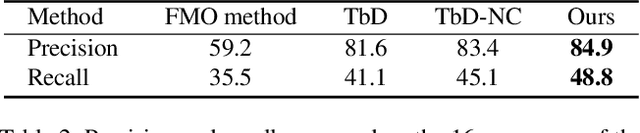

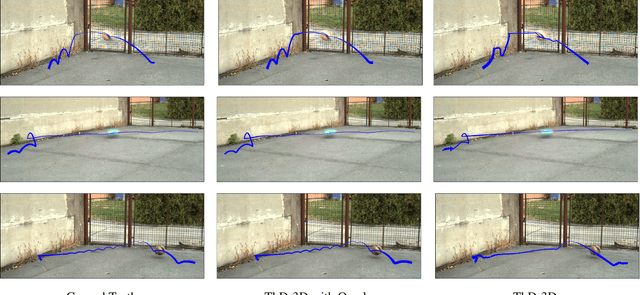

Abstract:Tracking fast moving objects, which appear as blurred streaks in video sequences, is a difficult task for standard trackers as the object position does not overlap in consecutive video frames and texture information of the objects is blurred. Up-to-date approaches tuned for this task are based on background subtraction with static background and slow deblurring algorithms. In this paper, we present a tracking-by-segmentation approach implemented using state-of-the-art deep learning methods that performs near-realtime tracking on real-world video sequences. We implemented a physically plausible FMO sequence generator to be a robust foundation for our training pipeline and demonstrate the ease of fast generator and network adaptation for different FMO scenarios in terms of foreground variations.

Sub-frame Appearance and 6D Pose Estimation of Fast Moving Objects

Nov 25, 2019

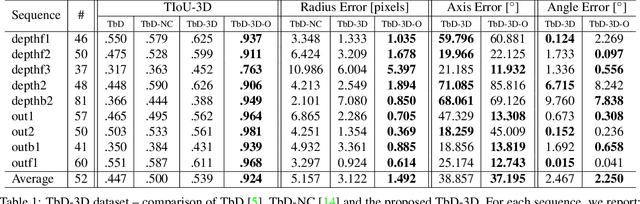

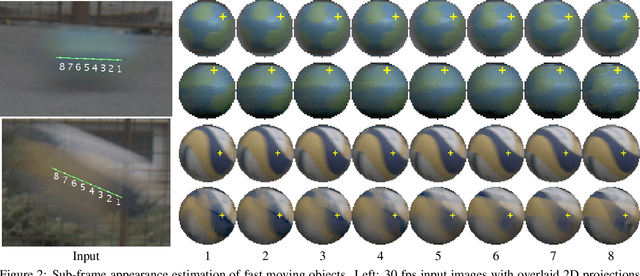

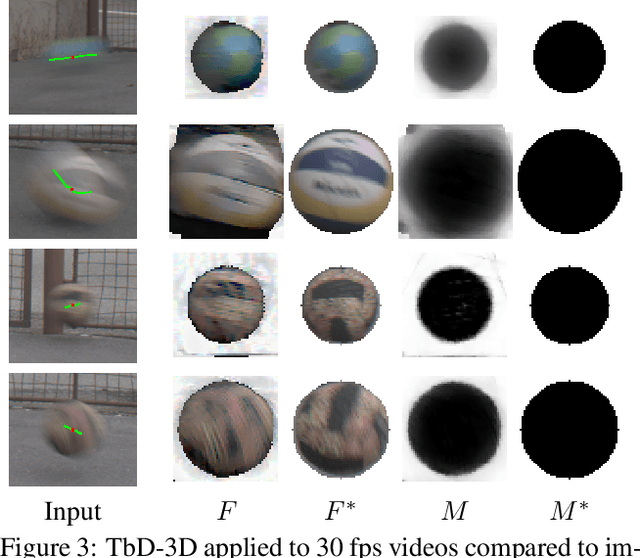

Abstract:We propose a novel method that tracks fast moving objects, mainly non-uniform spherical, in full 6 degrees of freedom, estimating simultaneously their 3D motion trajectory, 3D pose and object appearance changes with a time step that is a fraction of the video frame exposure time. The sub-frame object localization and appearance estimation allows realistic temporal super-resolution and precise shape estimation. The method, called TbD-3D (Tracking by Deblatting in 3D) relies on a novel reconstruction algorithm which solves a piece-wise deblurring and matting problem. The 3D rotation is estimated by minimizing the reprojection error. As a second contribution, we present a new challenging dataset with fast moving objects that change their appearance and distance to the camera. High speed camera recordings with zero lag between frame exposures were used to generate videos with different frame rates annotated with ground-truth trajectory and pose.

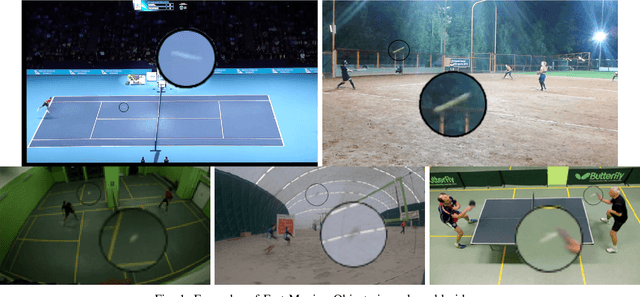

The World of Fast Moving Objects

Nov 23, 2016

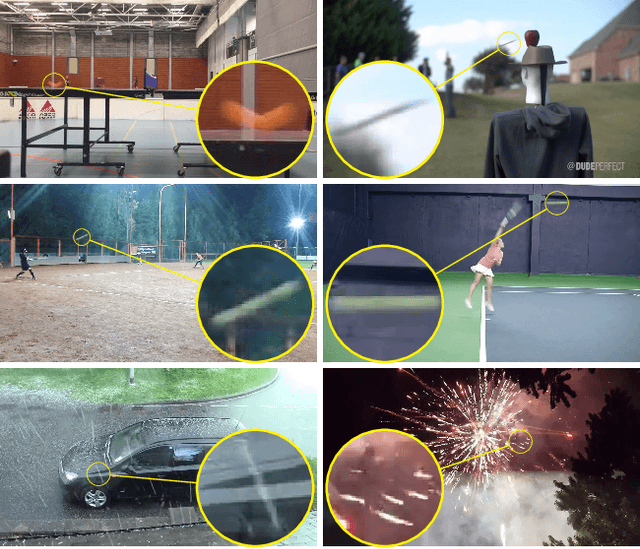

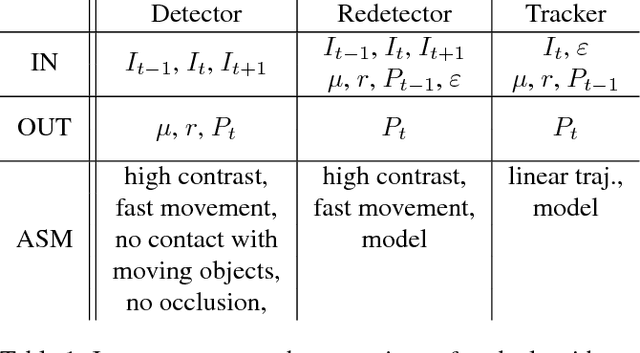

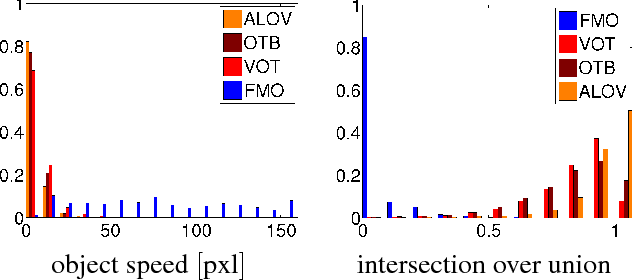

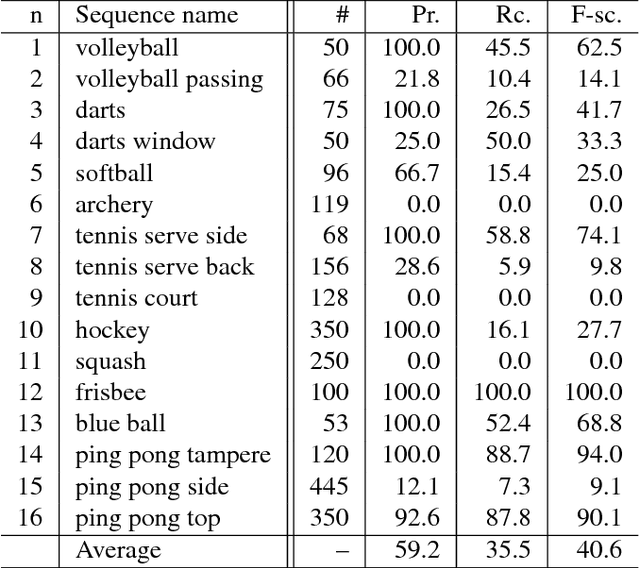

Abstract:The notion of a Fast Moving Object (FMO), i.e. an object that moves over a distance exceeding its size within the exposure time, is introduced. FMOs may, and typically do, rotate with high angular speed. FMOs are very common in sports videos, but are not rare elsewhere. In a single frame, such objects are often barely visible and appear as semi-transparent streaks. A method for the detection and tracking of FMOs is proposed. The method consists of three distinct algorithms, which form an efficient localization pipeline that operates successfully in a broad range of conditions. We show that it is possible to recover the appearance of the object and its axis of rotation, despite its blurred appearance. The proposed method is evaluated on a new annotated dataset. The results show that existing trackers are inadequate for the problem of FMO localization and a new approach is required. Two applications of localization, temporal super-resolution and highlighting, are presented.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge