Fangye Wang

Towards Deeper, Lighter and Interpretable Cross Network for CTR Prediction

Nov 08, 2023

Abstract:Click Through Rate (CTR) prediction plays an essential role in recommender systems and online advertising. It is crucial to effectively model feature interactions to improve the prediction performance of CTR models. However, existing methods face three significant challenges. First, while most methods can automatically capture high-order feature interactions, their performance tends to diminish as the order of feature interactions increases. Second, existing methods lack the ability to provide convincing interpretations of the prediction results, especially for high-order feature interactions, which limits the trustworthiness of their predictions. Third, many methods suffer from the presence of redundant parameters, particularly in the embedding layer. This paper proposes a novel method called Gated Deep Cross Network (GDCN) and a Field-level Dimension Optimization (FDO) approach to address these challenges. As the core structure of GDCN, Gated Cross Network (GCN) captures explicit high-order feature interactions and dynamically filters important interactions with an information gate in each order. Additionally, we use the FDO approach to learn condensed dimensions for each field based on their importance. Comprehensive experiments on five datasets demonstrate the effectiveness, superiority and interpretability of GDCN. Moreover, we verify the effectiveness of FDO in learning various dimensions and reducing model parameters. The code is available on \url{https://github.com/anonctr/GDCN}.

A Comprehensive Summarization and Evaluation of Feature Refinement Modules for CTR Prediction

Nov 08, 2023

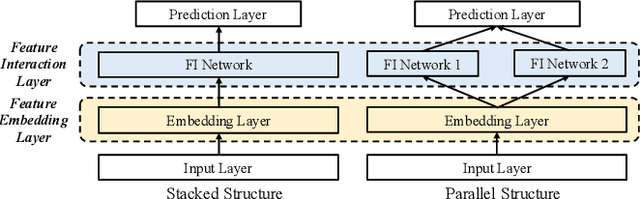

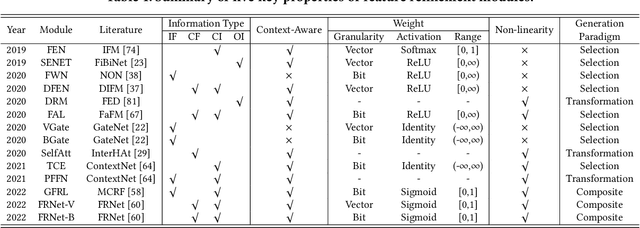

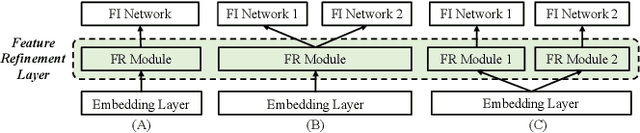

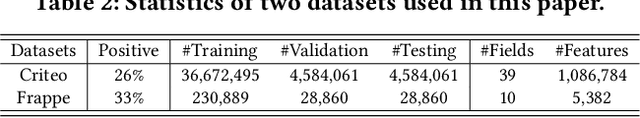

Abstract:Click-through rate (CTR) prediction is widely used in academia and industry. Most CTR tasks fall into a feature embedding \& feature interaction paradigm, where the accuracy of CTR prediction is mainly improved by designing practical feature interaction structures. However, recent studies have argued that the fixed feature embedding learned only through the embedding layer limits the performance of existing CTR models. Some works apply extra modules on top of the embedding layer to dynamically refine feature representations in different instances, making it effective and easy to integrate with existing CTR methods. Despite the promising results, there is a lack of a systematic review and summarization of this new promising direction on the CTR task. To fill this gap, we comprehensively summarize and define a new module, namely \textbf{feature refinement} (FR) module, that can be applied between feature embedding and interaction layers. We extract 14 FR modules from previous works, including instances where the FR module was proposed but not clearly defined or explained. We fully assess the effectiveness and compatibility of existing FR modules through comprehensive and extensive experiments with over 200 augmented models and over 4,000 runs for more than 15,000 GPU hours. The results offer insightful guidelines for researchers, and all benchmarking code and experimental results are open-sourced. In addition, we present a new architecture of assigning independent FR modules to separate sub-networks for parallel CTR models, as opposed to the conventional method of inserting a shared FR module on top of the embedding layer. Our approach is also supported by comprehensive experiments demonstrating its effectiveness.

CL4CTR: A Contrastive Learning Framework for CTR Prediction

Dec 01, 2022Abstract:Many Click-Through Rate (CTR) prediction works focused on designing advanced architectures to model complex feature interactions but neglected the importance of feature representation learning, e.g., adopting a plain embedding layer for each feature, which results in sub-optimal feature representations and thus inferior CTR prediction performance. For instance, low frequency features, which account for the majority of features in many CTR tasks, are less considered in standard supervised learning settings, leading to sub-optimal feature representations. In this paper, we introduce self-supervised learning to produce high-quality feature representations directly and propose a model-agnostic Contrastive Learning for CTR (CL4CTR) framework consisting of three self-supervised learning signals to regularize the feature representation learning: contrastive loss, feature alignment, and field uniformity. The contrastive module first constructs positive feature pairs by data augmentation and then minimizes the distance between the representations of each positive feature pair by the contrastive loss. The feature alignment constraint forces the representations of features from the same field to be close, and the field uniformity constraint forces the representations of features from different fields to be distant. Extensive experiments verify that CL4CTR achieves the best performance on four datasets and has excellent effectiveness and compatibility with various representative baselines.

Enhancing CTR Prediction with Context-Aware Feature Representation Learning

Apr 19, 2022

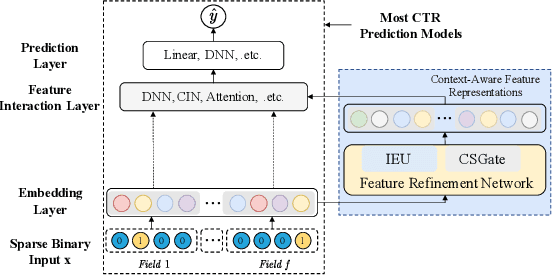

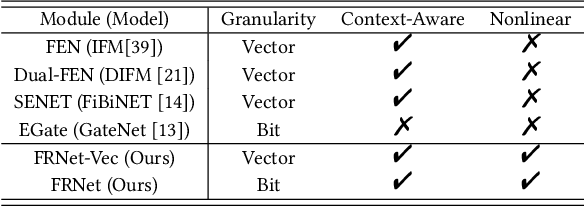

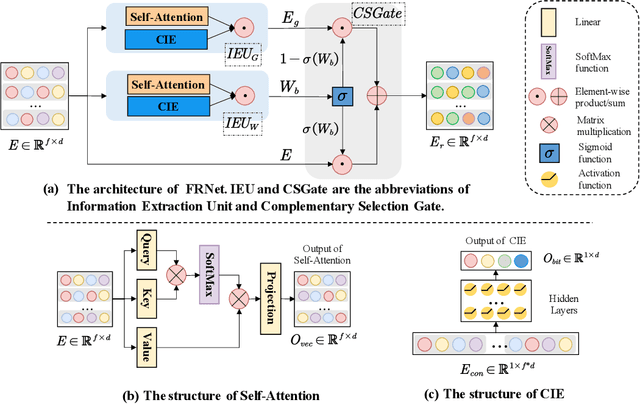

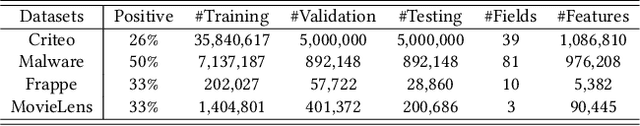

Abstract:CTR prediction has been widely used in the real world. Many methods model feature interaction to improve their performance. However, most methods only learn a fixed representation for each feature without considering the varying importance of each feature under different contexts, resulting in inferior performance. Recently, several methods tried to learn vector-level weights for feature representations to address the fixed representation issue. However, they only produce linear transformations to refine the fixed feature representations, which are still not flexible enough to capture the varying importance of each feature under different contexts. In this paper, we propose a novel module named Feature Refinement Network (FRNet), which learns context-aware feature representations at bit-level for each feature in different contexts. FRNet consists of two key components: 1) Information Extraction Unit (IEU), which captures contextual information and cross-feature relationships to guide context-aware feature refinement; and 2) Complementary Selection Gate (CSGate), which adaptively integrates the original and complementary feature representations learned in IEU with bit-level weights. Notably, FRNet is orthogonal to existing CTR methods and thus can be applied in many existing methods to boost their performance. Comprehensive experiments are conducted to verify the effectiveness, efficiency, and compatibility of FRNet.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge