Fabio Di Troia

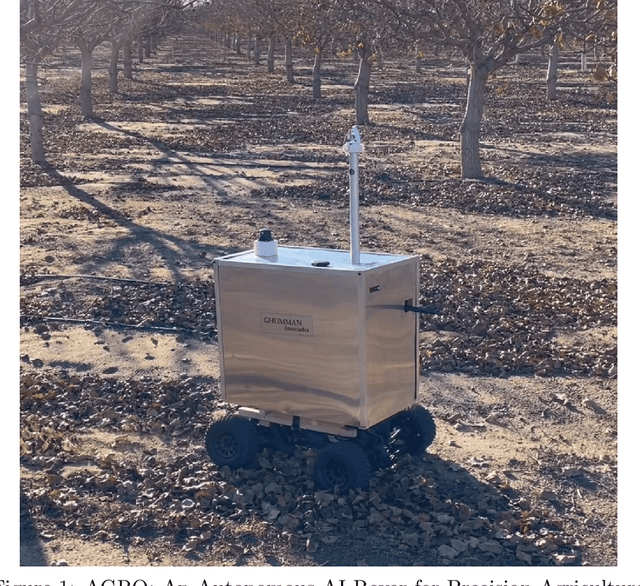

AGRO: An Autonomous AI Rover for Precision Agriculture

May 02, 2025

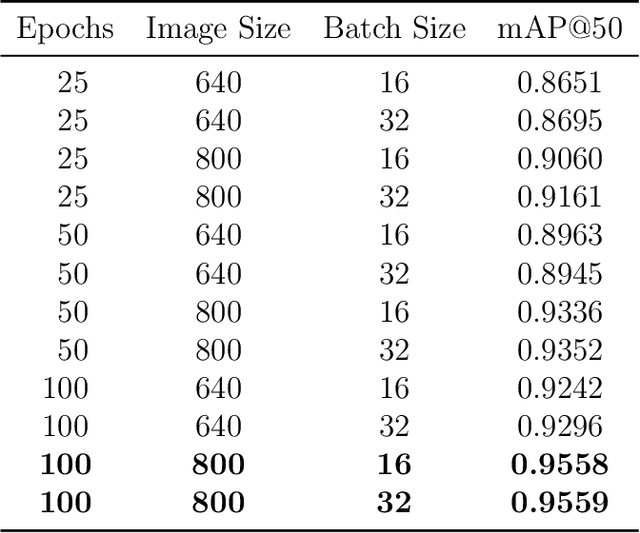

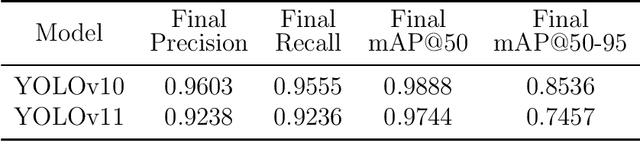

Abstract:Unmanned Ground Vehicles (UGVs) are emerging as a crucial tool in the world of precision agriculture. The combination of UGVs with machine learning allows us to find solutions for a range of complex agricultural problems. This research focuses on developing a UGV capable of autonomously traversing agricultural fields and capturing data. The project, known as AGRO (Autonomous Ground Rover Observer) leverages machine learning, computer vision and other sensor technologies. AGRO uses its capabilities to determine pistachio yields, performing self-localization and real-time environmental mapping while avoiding obstacles. The main objective of this research work is to automate resource-consuming operations so that AGRO can support farmers in making data-driven decisions. Furthermore, AGRO provides a foundation for advanced machine learning techniques as it captures the world around it.

Feature Analysis of Encrypted Malicious Traffic

Dec 06, 2023

Abstract:In recent years there has been a dramatic increase in the number of malware attacks that use encrypted HTTP traffic for self-propagation or communication. Antivirus software and firewalls typically will not have access to encryption keys, and therefore direct detection of malicious encrypted data is unlikely to succeed. However, previous work has shown that traffic analysis can provide indications of malicious intent, even in cases where the underlying data remains encrypted. In this paper, we apply three machine learning techniques to the problem of distinguishing malicious encrypted HTTP traffic from benign encrypted traffic and obtain results comparable to previous work. We then consider the problem of feature analysis in some detail. Previous work has often relied on human expertise to determine the most useful and informative features in this problem domain. We demonstrate that such feature-related information can be obtained directly from machine learning models themselves. We argue that such a machine learning based approach to feature analysis is preferable, as it is more reliable, and we can, for example, uncover relatively unintuitive interactions between features.

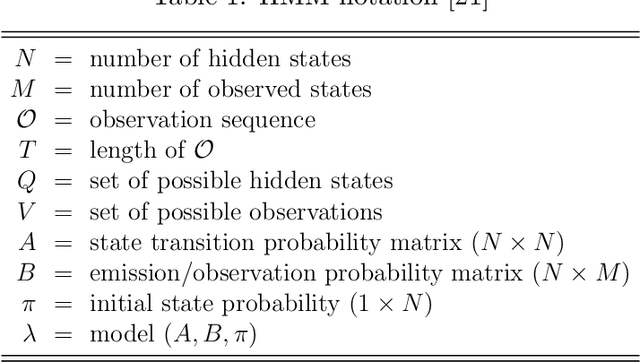

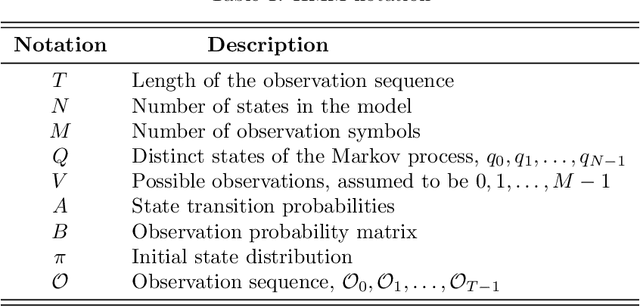

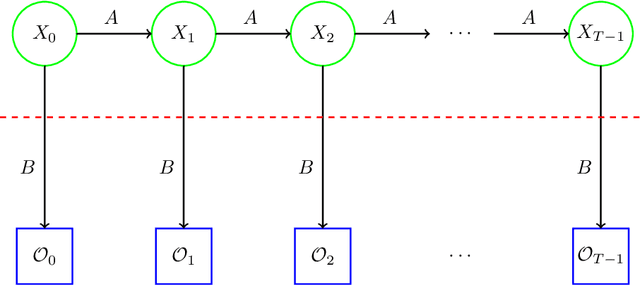

Hidden Markov Models with Random Restarts vs Boosting for Malware Detection

Jul 17, 2023Abstract:Effective and efficient malware detection is at the forefront of research into building secure digital systems. As with many other fields, malware detection research has seen a dramatic increase in the application of machine learning algorithms. One machine learning technique that has been used widely in the field of pattern matching in general-and malware detection in particular-is hidden Markov models (HMMs). HMM training is based on a hill climb, and hence we can often improve a model by training multiple times with different initial values. In this research, we compare boosted HMMs (using AdaBoost) to HMMs trained with multiple random restarts, in the context of malware detection. These techniques are applied to a variety of challenging malware datasets. We find that random restarts perform surprisingly well in comparison to boosting. Only in the most difficult "cold start" cases (where training data is severely limited) does boosting appear to offer sufficient improvement to justify its higher computational cost in the scoring phase.

Creating Valid Adversarial Examples of Malware

Jun 23, 2023Abstract:Machine learning is becoming increasingly popular as a go-to approach for many tasks due to its world-class results. As a result, antivirus developers are incorporating machine learning models into their products. While these models improve malware detection capabilities, they also carry the disadvantage of being susceptible to adversarial attacks. Although this vulnerability has been demonstrated for many models in white-box settings, a black-box attack is more applicable in practice for the domain of malware detection. We present a generator of adversarial malware examples using reinforcement learning algorithms. The reinforcement learning agents utilize a set of functionality-preserving modifications, thus creating valid adversarial examples. Using the proximal policy optimization (PPO) algorithm, we achieved an evasion rate of 53.84% against the gradient-boosted decision tree (GBDT) model. The PPO agent previously trained against the GBDT classifier scored an evasion rate of 11.41% against the neural network-based classifier MalConv and an average evasion rate of 2.31% against top antivirus programs. Furthermore, we discovered that random application of our functionality-preserving portable executable modifications successfully evades leading antivirus engines, with an average evasion rate of 11.65%. These findings indicate that machine learning-based models used in malware detection systems are vulnerable to adversarial attacks and that better safeguards need to be taken to protect these systems.

Multifamily Malware Models

Jun 27, 2022Abstract:When training a machine learning model, there is likely to be a tradeoff between accuracy and the diversity of the dataset. Previous research has shown that if we train a model to detect one specific malware family, we generally obtain stronger results as compared to a case where we train a single model on multiple diverse families. However, during the detection phase, it would be more efficient to have a single model that can reliably detect multiple families, rather than having to score each sample against multiple models. In this research, we conduct experiments based on byte $n$-gram features to quantify the relationship between the generality of the training dataset and the accuracy of the corresponding machine learning models, all within the context of the malware detection problem. We find that neighborhood-based algorithms generalize surprisingly well, far outperforming the other machine learning techniques considered.

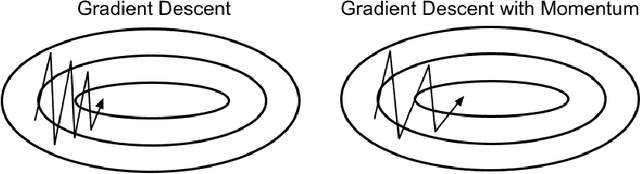

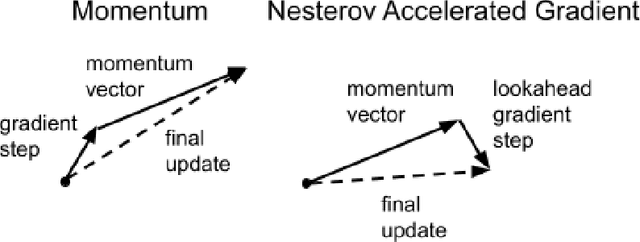

Hidden Markov Models with Momentum

Jun 08, 2022

Abstract:Momentum is a popular technique for improving convergence rates during gradient descent. In this research, we experiment with adding momentum to the Baum-Welch expectation-maximization algorithm for training Hidden Markov Models. We compare discrete Hidden Markov Models trained with and without momentum on English text and malware opcode data. The effectiveness of momentum is determined by measuring the changes in model score and classification accuracy due to momentum. Our extensive experiments indicate that adding momentum to Baum-Welch can reduce the number of iterations required for initial convergence during HMM training, particularly in cases where the model is slow to converge. However, momentum does not seem to improve the final model performance at a high number of iterations.

Convolutional Neural Networks for Image Spam Detection

Apr 02, 2022Abstract:Spam can be defined as unsolicited bulk email. In an effort to evade text-based filters, spammers sometimes embed spam text in an image, which is referred to as image spam. In this research, we consider the problem of image spam detection, based on image analysis. We apply convolutional neural networks (CNN) to this problem, we compare the results obtained using CNNs to other machine learning techniques, and we compare our results to previous related work. We consider both real-world image spam and challenging image spam-like datasets. Our results improve on previous work by employing CNNs based on a novel feature set consisting of a combination of the raw image and Canny edges.

A Comparison of Static, Dynamic, and Hybrid Analysis for Malware Detection

Mar 13, 2022

Abstract:In this research, we compare malware detection techniques based on static, dynamic, and hybrid analysis. Specifically, we train Hidden Markov Models (HMMs ) on both static and dynamic feature sets and compare the resulting detection rates over a substantial number of malware families. We also consider hybrid cases, where dynamic analysis is used in the training phase, with static techniques used in the detection phase, and vice versa. In our experiments, a fully dynamic approach generally yields the best detection rates. We discuss the implications of this research for malware detection based on hybrid techniques.

Clickbait Detection in YouTube Videos

Jul 26, 2021

Abstract:YouTube videos often include captivating descriptions and intriguing thumbnails designed to increase the number of views, and thereby increase the revenue for the person who posted the video. This creates an incentive for people to post clickbait videos, in which the content might deviate significantly from the title, description, or thumbnail. In effect, users are tricked into clicking on clickbait videos. In this research, we consider the challenging problem of detecting clickbait YouTube videos. We experiment with multiple state-of-the-art machine learning techniques using a variety of textual features.

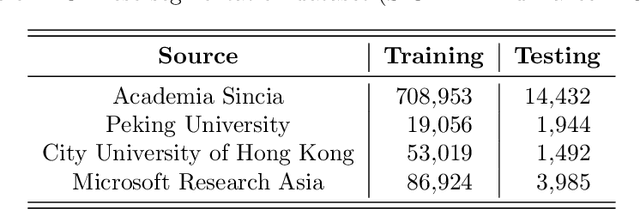

Sentiment Analysis for Troll Detection on Weibo

Mar 07, 2021

Abstract:The impact of social media on the modern world is difficult to overstate. Virtually all companies and public figures have social media accounts on popular platforms such as Twitter and Facebook. In China, the micro-blogging service provider, Sina Weibo, is the most popular such service. To influence public opinion, Weibo trolls -- the so called Water Army -- can be hired to post deceptive comments. In this paper, we focus on troll detection via sentiment analysis and other user activity data on the Sina Weibo platform. We implement techniques for Chinese sentence segmentation, word embedding, and sentiment score calculation. In recent years, troll detection and sentiment analysis have been studied, but we are not aware of previous research that considers troll detection based on sentiment analysis. We employ the resulting techniques to develop and test a sentiment analysis approach for troll detection, based on a variety of machine learning strategies. Experimental results are generated and analyzed. A Chrome extension is presented that implements our proposed technique, which enables real-time troll detection when a user browses Sina Weibo.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge