Fabio Aiolli

Integrating Background Knowledge in Medical Semantic Segmentation with Logic Tensor Networks

Sep 26, 2025Abstract:Semantic segmentation is a fundamental task in medical image analysis, aiding medical decision-making by helping radiologists distinguish objects in an image. Research in this field has been driven by deep learning applications, which have the potential to scale these systems even in the presence of noise and artifacts. However, these systems are not yet perfected. We argue that performance can be improved by incorporating common medical knowledge into the segmentation model's loss function. To this end, we introduce Logic Tensor Networks (LTNs) to encode medical background knowledge using first-order logic (FOL) rules. The encoded rules span from constraints on the shape of the produced segmentation, to relationships between different segmented areas. We apply LTNs in an end-to-end framework with a SwinUNETR for semantic segmentation. We evaluate our method on the task of segmenting the hippocampus in brain MRI scans. Our experiments show that LTNs improve the baseline segmentation performance, especially when training data is scarce. Despite being in its preliminary stages, we argue that neurosymbolic methods are general enough to be adapted and applied to other medical semantic segmentation tasks.

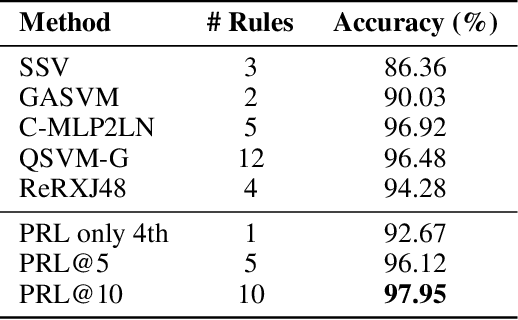

(Sometimes) Less is More: Mitigating the Complexity of Rule-based Representation for Interpretable Classification

Sep 26, 2025Abstract:Deep neural networks are widely used in practical applications of AI, however, their inner structure and complexity made them generally not easily interpretable. Model transparency and interpretability are key requirements for multiple scenarios where high performance is not enough to adopt the proposed solution. In this work, a differentiable approximation of $L_0$ regularization is adapted into a logic-based neural network, the Multi-layer Logical Perceptron (MLLP), to study its efficacy in reducing the complexity of its discrete interpretable version, the Concept Rule Set (CRS), while retaining its performance. The results are compared to alternative heuristics like Random Binarization of the network weights, to determine if better results can be achieved when using a less-noisy technique that sparsifies the network based on the loss function instead of a random distribution. The trade-off between the CRS complexity and its performance is discussed.

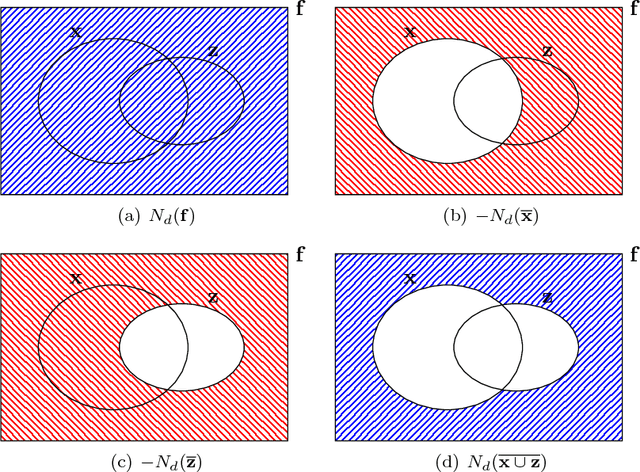

LTNtorch: PyTorch Implementation of Logic Tensor Networks

Sep 24, 2024Abstract:Logic Tensor Networks (LTN) is a Neuro-Symbolic framework that effectively incorporates deep learning and logical reasoning. In particular, LTN allows defining a logical knowledge base and using it as the objective of a neural model. This makes learning by logical reasoning possible as the parameters of the model are optimized by minimizing a loss function composed of a set of logical formulas expressing facts about the learning task. The framework learns via gradient-descent optimization. Fuzzy logic, a relaxation of classical logic permitting continuous truth values in the interval [0,1], makes this learning possible. Specifically, the training of an LTN consists of three steps. Firstly, (1) the training data is used to ground the formulas. Then, (2) the formulas are evaluated, and the loss function is computed. Lastly, (3) the gradients are back-propagated through the logical computational graph, and the weights of the neural model are changed so the knowledge base is maximally satisfied. LTNtorch is the fully documented and tested PyTorch implementation of Logic Tensor Networks. This paper presents the formalization of LTN and how LTNtorch implements it. Moreover, it provides a basic binary classification example.

Novel Applications for VAE-based Anomaly Detection Systems

Apr 26, 2022

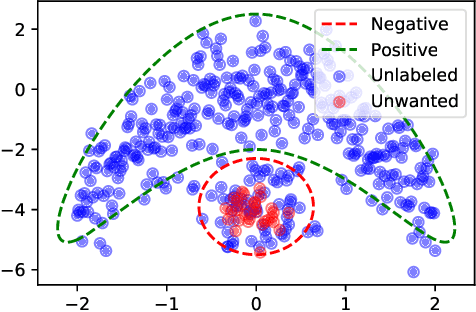

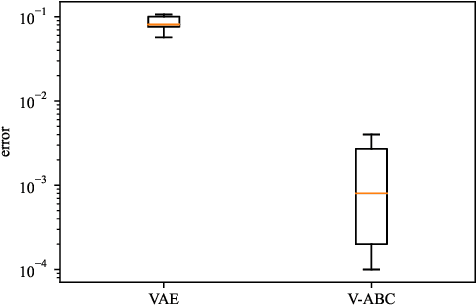

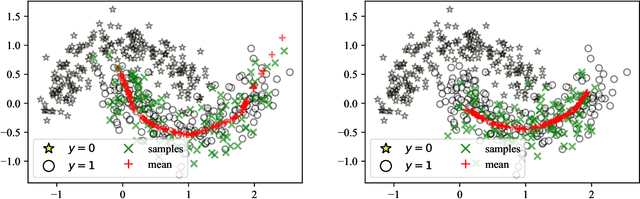

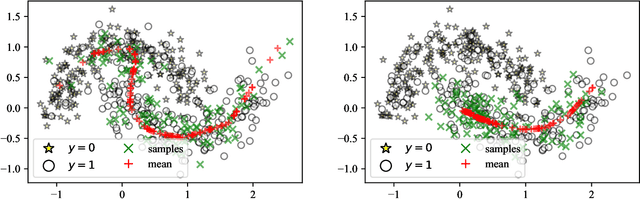

Abstract:The recent rise in deep learning technologies fueled innovation and boosted scientific research. Their achievements enabled new research directions for deep generative modeling (DGM), an increasingly popular approach that can create novel and unseen data, starting from a given data set. As the technology shows promising applications, many ethical issues also arise. For example, their misuse can enable disinformation campaigns and powerful phishing attempts. Research also indicates different biases affect deep learning models, leading to social issues such as misrepresentation. In this work, we formulate a novel setting to deal with similar problems, showing that a repurposed anomaly detection system effectively generates novel data, avoiding generating specified unwanted data. We propose Variational Auto-encoding Binary Classifiers (V-ABC): a novel model that repurposes and extends the Auto-encoding Binary Classifier (ABC) anomaly detector, using the Variational Auto-encoder (VAE). We survey the limitations of existing approaches and explore many tools to show the model's inner workings in an interpretable way. This proposal has excellent potential for generative applications: models that rely on user-generated data could automatically filter out unwanted content, such as offensive language, obscene images, and misleading information.

Bayes Point Rule Set Learning

Apr 11, 2022

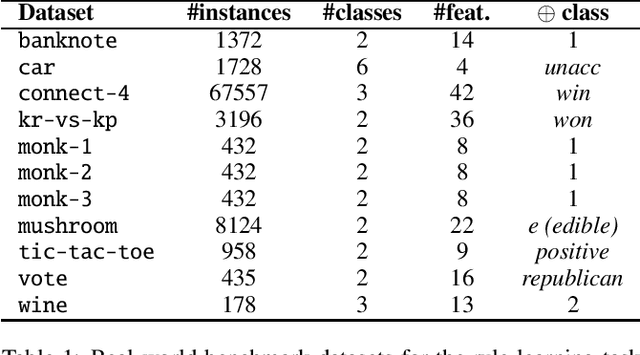

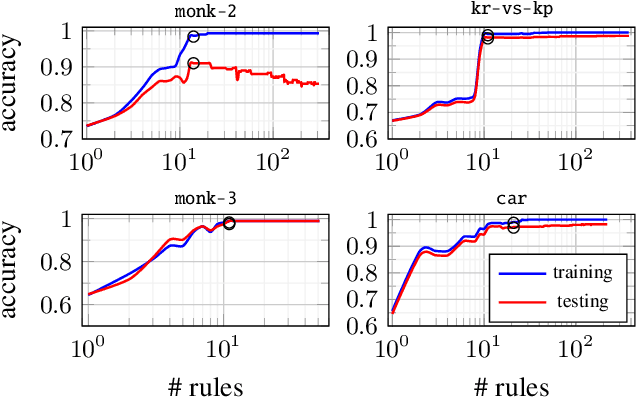

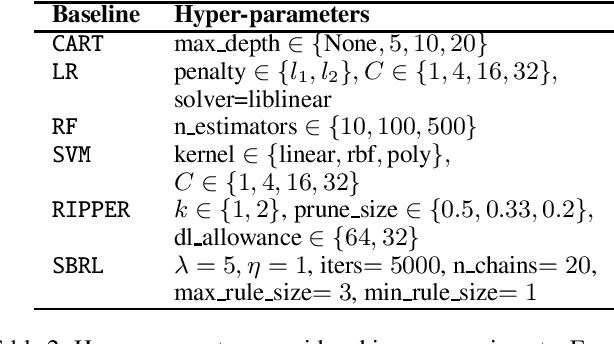

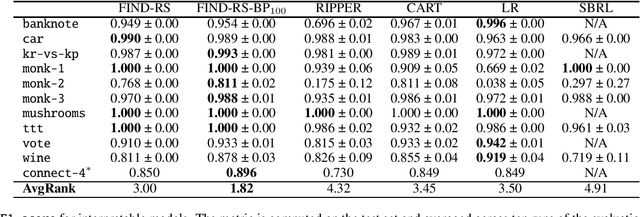

Abstract:Interpretability is having an increasingly important role in the design of machine learning algorithms. However, interpretable methods tend to be less accurate than their black-box counterparts. Among others, DNFs (Disjunctive Normal Forms) are arguably the most interpretable way to express a set of rules. In this paper, we propose an effective bottom-up extension of the popular FIND-S algorithm to learn DNF-type rulesets. The algorithm greedily finds a partition of the positive examples. The produced DNF is a set of conjunctive rules, each corresponding to the most specific rule consistent with a part of positive and all negative examples. We also propose two principled extensions of this method, approximating the Bayes Optimal Classifier by aggregating DNF decision rules. Finally, we provide a methodology to significantly improve the explainability of the learned rules while retaining their generalization capabilities. An extensive comparison with state-of-the-art symbolic and statistical methods on several benchmark data sets shows that our proposal provides an excellent balance between explainability and accuracy.

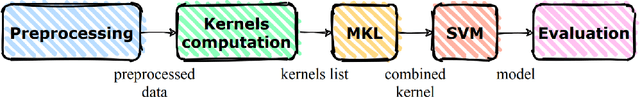

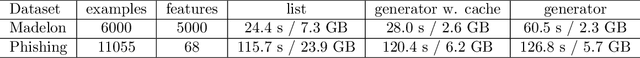

MKLpy: a python-based framework for Multiple Kernel Learning

Jul 20, 2020

Abstract:Multiple Kernel Learning is a recent and powerful paradigm to learn the kernel function from data. In this paper, we introduce MKLpy, a python-based framework for Multiple Kernel Learning. The library provides Multiple Kernel Learning algorithms for classification tasks, mechanisms to compute kernel functions for different data types, and evaluation strategies. The library is meant to maximize the usability and to simplify the development of novel solutions.

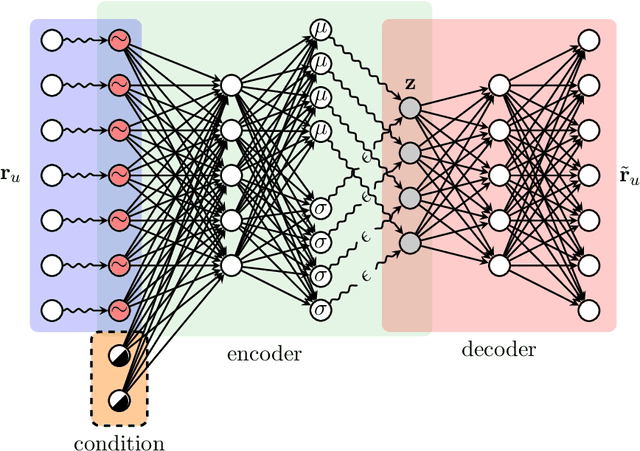

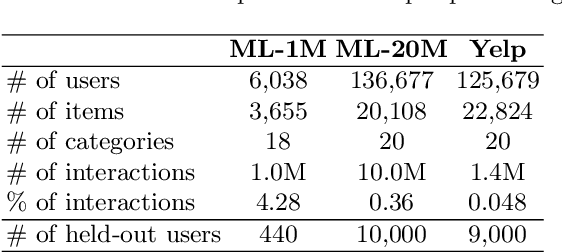

Conditioned Variational Autoencoder for top-N item recommendation

May 04, 2020

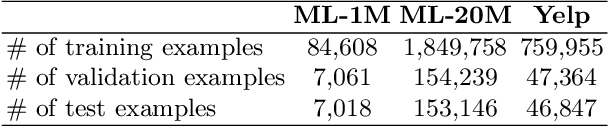

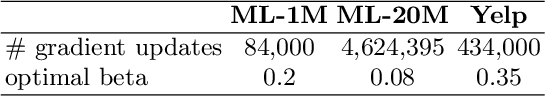

Abstract:In this paper, we propose a Conditioned Variational Autoencoder (C-VAE) for constrained top-N item recommendation where the recommended items must satisfy a given condition. The proposed model architecture is similar to a standard VAE in which the condition vector is fed into the encoder. The constrained ranking is learned during training thanks to a new reconstruction loss that takes the input condition into account. We show that our model generalizes the state-of-the-art Mult-VAE collaborative filtering model. Moreover, we provide insights on what C-VAE learns in the latent space, providing a human-friendly interpretation. Experimental results underline the potential of C-VAE in providing accurate recommendations under constraints. Finally, the performed analyses suggest that C-VAE can be used in other recommendation scenarios, such as context-aware recommendation.

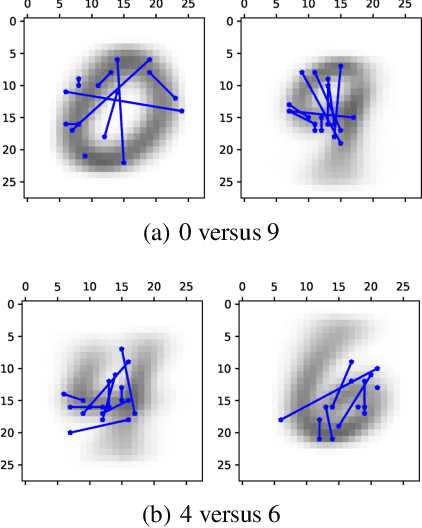

Interpretable preference learning: a game theoretic framework for large margin on-line feature and rule learning

Dec 19, 2018

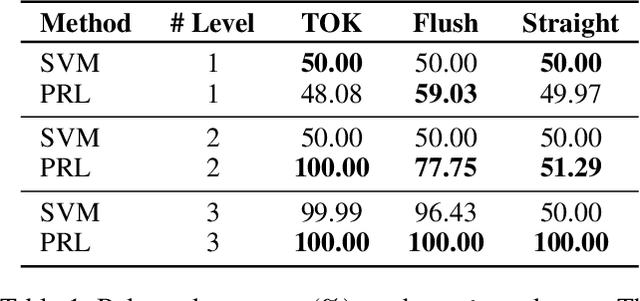

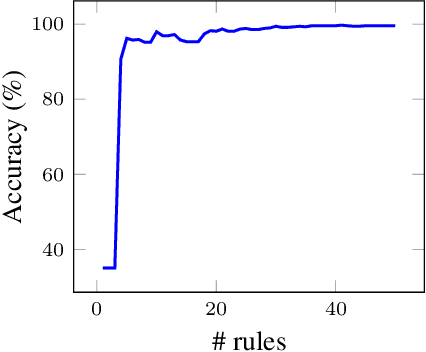

Abstract:A large body of research is currently investigating on the connection between machine learning and game theory. In this work, game theory notions are injected into a preference learning framework. Specifically, a preference learning problem is seen as a two-players zero-sum game. An algorithm is proposed to incrementally include new useful features into the hypothesis. This can be particularly important when dealing with a very large number of potential features like, for instance, in relational learning and rule extraction. A game theoretical analysis is used to demonstrate the convergence of the algorithm. Furthermore, leveraging on the natural analogy between features and rules, the resulting models can be easily interpreted by humans. An extensive set of experiments on classification tasks shows the effectiveness of the proposed method in terms of interpretability and feature selection quality, with accuracy at the state-of-the-art.

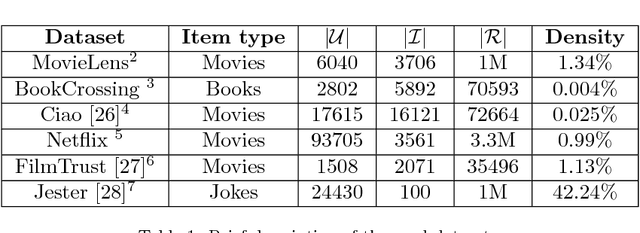

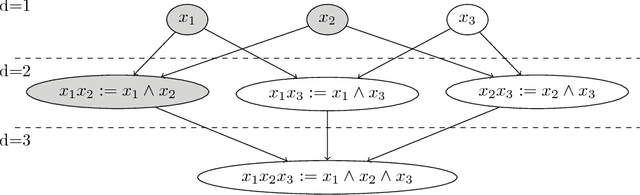

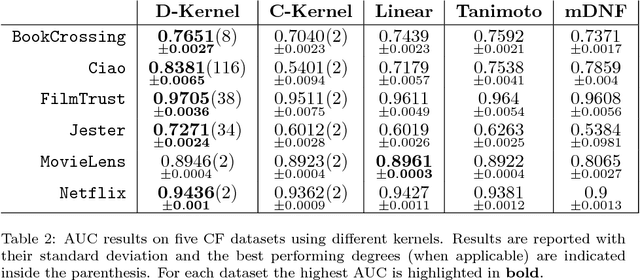

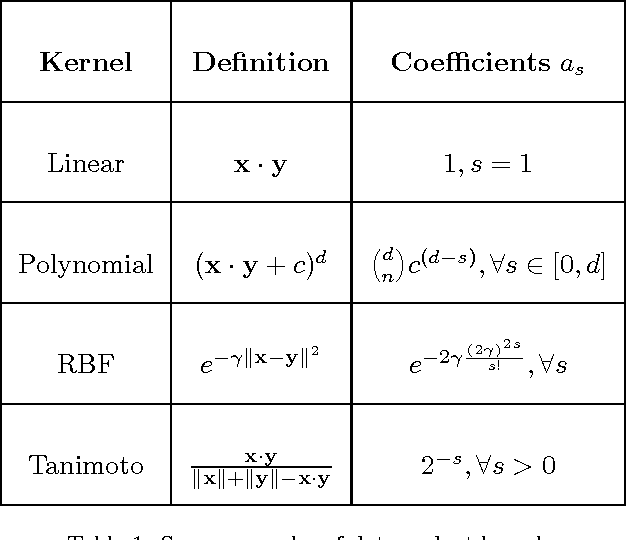

Boolean kernels for collaborative filtering in top-N item recommendation

Jul 18, 2017

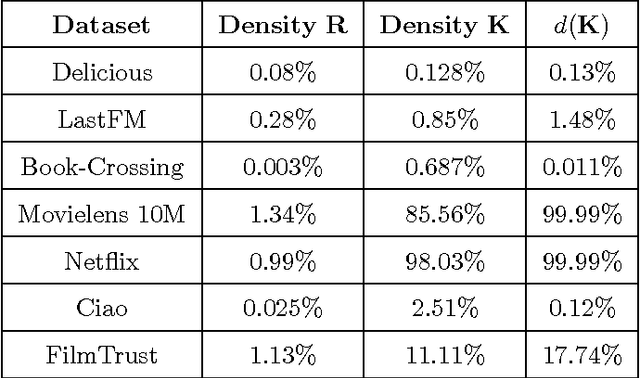

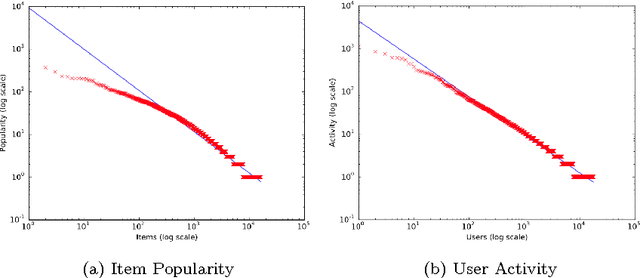

Abstract:In many personalized recommendation problems available data consists only of positive interactions (implicit feedback) between users and items. This problem is also known as One-Class Collaborative Filtering (OC-CF). Linear models usually achieve state-of-the-art performances on OC-CF problems and many efforts have been devoted to build more expressive and complex representations able to improve the recommendations. Recent analysis show that collaborative filtering (CF) datasets have peculiar characteristics such as high sparsity and a long tailed distribution of the ratings. In this paper we propose a boolean kernel, called Disjunctive kernel, which is less expressive than the linear one but it is able to alleviate the sparsity issue in CF contexts. The embedding of this kernel is composed by all the combinations of a certain arity d of the input variables, and these combined features are semantically interpreted as disjunctions of the input variables. Experiments on several CF datasets show the effectiveness and the efficiency of the proposed kernel.

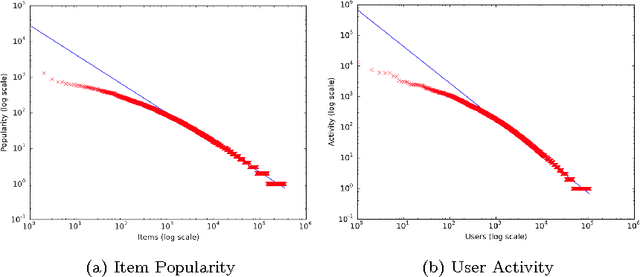

Exploiting sparsity to build efficient kernel based collaborative filtering for top-N item recommendation

Dec 17, 2016

Abstract:The increasing availability of implicit feedback datasets has raised the interest in developing effective collaborative filtering techniques able to deal asymmetrically with unambiguous positive feedback and ambiguous negative feedback. In this paper, we propose a principled kernel-based collaborative filtering method for top-N item recommendation with implicit feedback. We present an efficient implementation using the linear kernel, and we show how to generalize it to kernels of the dot product family preserving the efficiency. We also investigate on the elements which influence the sparsity of a standard cosine kernel. This analysis shows that the sparsity of the kernel strongly depends on the properties of the dataset, in particular on the long tail distribution. We compare our method with state-of-the-art algorithms achieving good results both in terms of efficiency and effectiveness.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge