Interpretable preference learning: a game theoretic framework for large margin on-line feature and rule learning

Paper and Code

Dec 19, 2018

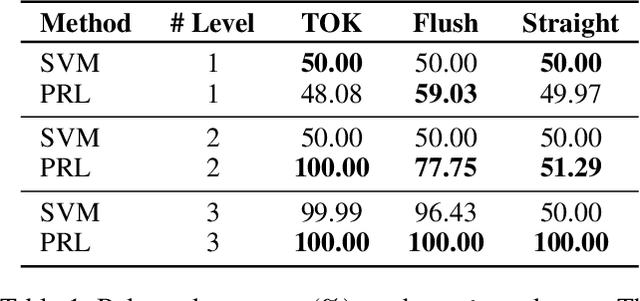

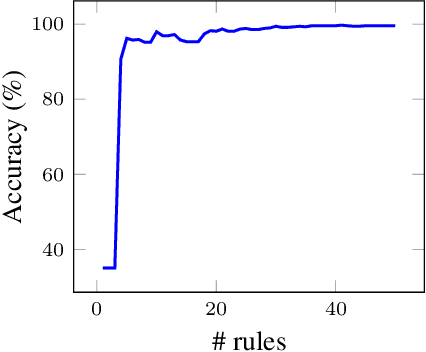

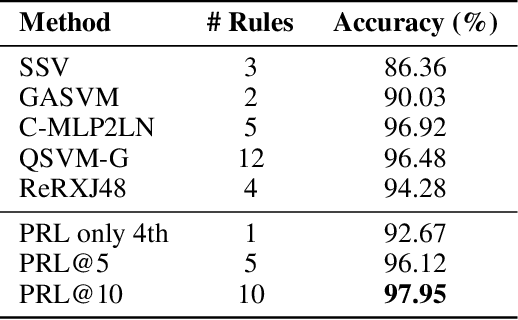

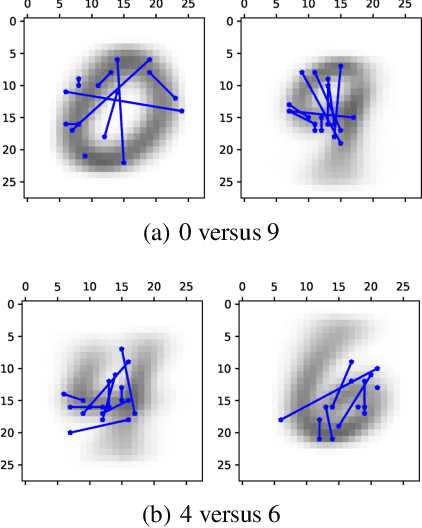

A large body of research is currently investigating on the connection between machine learning and game theory. In this work, game theory notions are injected into a preference learning framework. Specifically, a preference learning problem is seen as a two-players zero-sum game. An algorithm is proposed to incrementally include new useful features into the hypothesis. This can be particularly important when dealing with a very large number of potential features like, for instance, in relational learning and rule extraction. A game theoretical analysis is used to demonstrate the convergence of the algorithm. Furthermore, leveraging on the natural analogy between features and rules, the resulting models can be easily interpreted by humans. An extensive set of experiments on classification tasks shows the effectiveness of the proposed method in terms of interpretability and feature selection quality, with accuracy at the state-of-the-art.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge