Novel Applications for VAE-based Anomaly Detection Systems

Paper and Code

Apr 26, 2022

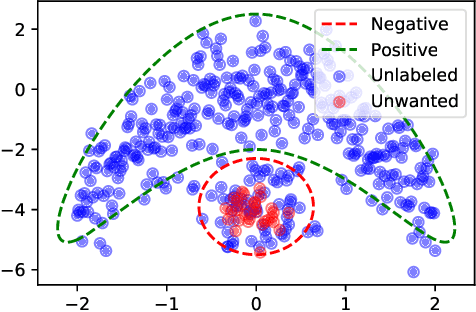

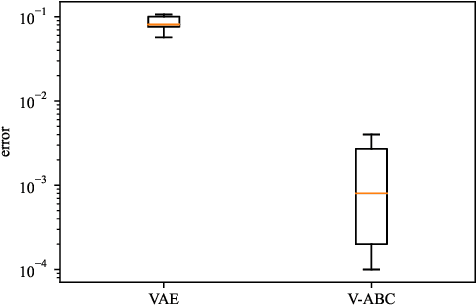

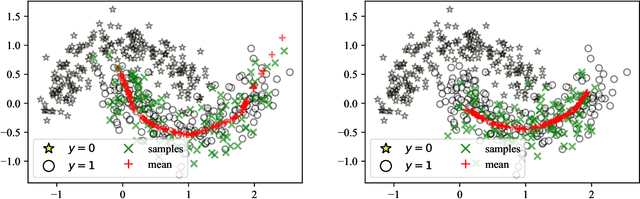

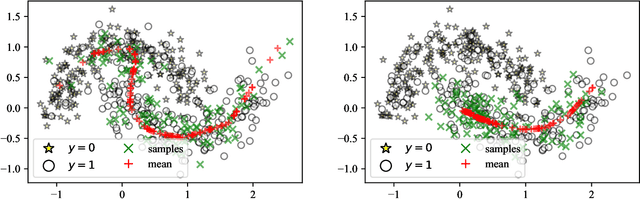

The recent rise in deep learning technologies fueled innovation and boosted scientific research. Their achievements enabled new research directions for deep generative modeling (DGM), an increasingly popular approach that can create novel and unseen data, starting from a given data set. As the technology shows promising applications, many ethical issues also arise. For example, their misuse can enable disinformation campaigns and powerful phishing attempts. Research also indicates different biases affect deep learning models, leading to social issues such as misrepresentation. In this work, we formulate a novel setting to deal with similar problems, showing that a repurposed anomaly detection system effectively generates novel data, avoiding generating specified unwanted data. We propose Variational Auto-encoding Binary Classifiers (V-ABC): a novel model that repurposes and extends the Auto-encoding Binary Classifier (ABC) anomaly detector, using the Variational Auto-encoder (VAE). We survey the limitations of existing approaches and explore many tools to show the model's inner workings in an interpretable way. This proposal has excellent potential for generative applications: models that rely on user-generated data could automatically filter out unwanted content, such as offensive language, obscene images, and misleading information.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge