Ermis Soumalias

The Pseudo-Dimension of Contracts

Jan 24, 2025

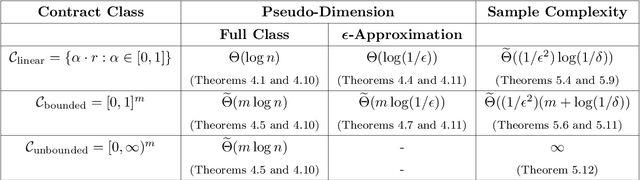

Abstract:Algorithmic contract design studies scenarios where a principal incentivizes an agent to exert effort on her behalf. In this work, we focus on settings where the agent's type is drawn from an unknown distribution, and formalize an offline learning framework for learning near-optimal contracts from sample agent types. A central tool in our analysis is the notion of pseudo-dimension from statistical learning theory. Beyond its role in establishing upper bounds on the sample complexity, pseudo-dimension measures the intrinsic complexity of a class of contracts, offering a new perspective on the tradeoffs between simplicity and optimality in contract design. Our main results provide essentially optimal tradeoffs between pseudo-dimension and representation error (defined as the loss in principal's utility) with respect to linear and bounded contracts. Using these tradeoffs, we derive sample- and time-efficient learning algorithms, and demonstrate their near-optimality by providing almost matching lower bounds on the sample complexity. Conversely, for unbounded contracts, we prove an impossibility result showing that no learning algorithm exists. Finally, we extend our techniques in three important ways. First, we provide refined pseudo-dimension and sample complexity guarantees for the combinatorial actions model, revealing a novel connection between the number of critical values and sample complexity. Second, we extend our results to menus of contracts, showing that their pseudo-dimension scales linearly with the menu size. Third, we adapt our algorithms to the online learning setting, where we show that, a polynomial number of type samples suffice to learn near-optimal bounded contracts. Combined with prior work, this establishes a formal separation between expert advice and bandit feedback for this setting.

Prices, Bids, Values: Everything, Everywhere, All at Once

Nov 14, 2024

Abstract:We study the design of iterative combinatorial auctions (ICAs). The main challenge in this domain is that the bundle space grows exponentially in the number of items. To address this, several papers have recently proposed machine learning (ML)-based preference elicitation algorithms that aim to elicit only the most important information from bidders to maximize efficiency. The SOTA ML-based algorithms elicit bidders' preferences via value queries (i.e., "What is your value for the bundle $\{A,B\}$?"). However, the most popular iterative combinatorial auction in practice elicits information via more practical \emph{demand queries} (i.e., "At prices $p$, what is your most preferred bundle of items?"). In this paper, we examine the advantages of value and demand queries from both an auction design and an ML perspective. We propose a novel ML algorithm that provably integrates the full information from both query types. As suggested by our theoretical analysis, our experimental results verify that combining demand and value queries results in significantly better learning performance. Building on these insights, we present MLHCA, the most efficient ICA ever designed. MLHCA substantially outperforms the previous SOTA in realistic auction settings, delivering large efficiency gains. Compared to the previous SOTA, MLHCA reduces efficiency loss by up to a factor of 10, and in the most challenging and realistic domain, MLHCA outperforms the previous SOTA using 30% fewer queries. Thus, MLHCA achieves efficiency improvements that translate to welfare gains of hundreds of millions of USD, while also reducing the cognitive load on the bidders, establishing a new benchmark both for practicability and for economic impact.

Truthful Aggregation of LLMs with an Application to Online Advertising

May 09, 2024

Abstract:We address the challenge of aggregating the preferences of multiple agents over LLM-generated replies to user queries, where agents might modify or exaggerate their preferences. New agents may participate for each new query, making fine-tuning LLMs on these preferences impractical. To overcome these challenges, we propose an auction mechanism that operates without fine-tuning or access to model weights. This mechanism is designed to provably converge to the ouput of the optimally fine-tuned LLM as computational resources are increased. The mechanism can also incorporate contextual information about the agents when avaiable, which significantly accelerates its convergence. A well-designed payment rule ensures that truthful reporting is the optimal strategy for all agents, while also promoting an equity property by aligning each agent's utility with her contribution to social welfare - an essential feature for the mechanism's long-term viability. While our approach can be applied whenever monetary transactions are permissible, our flagship application is in online advertising. In this context, advertisers try to steer LLM-generated responses towards their brand interests, while the platform aims to maximize advertiser value and ensure user satisfaction. Experimental results confirm that our mechanism not only converges efficiently to the optimally fine-tuned LLM but also significantly boosts advertiser value and platform revenue, all with minimal computational overhead.

Machine Learning-powered Combinatorial Clock Auction

Aug 20, 2023Abstract:We study the design of iterative combinatorial auctions (ICAs). The main challenge in this domain is that the bundle space grows exponentially in the number of items. To address this, several papers have recently proposed machine learning (ML)-based preference elicitation algorithms that aim to elicit only the most important information from bidders. However, from a practical point of view, the main shortcoming of this prior work is that those designs elicit bidders' preferences via value queries (i.e., ``What is your value for the bundle $\{A,B\}$?''). In most real-world ICA domains, value queries are considered impractical, since they impose an unrealistically high cognitive burden on bidders, which is why they are not used in practice. In this paper, we address this shortcoming by designing an ML-powered combinatorial clock auction that elicits information from the bidders only via demand queries (i.e., ``At prices $p$, what is your most preferred bundle of items?''). We make two key technical contributions: First, we present a novel method for training an ML model on demand queries. Second, based on those trained ML models, we introduce an efficient method for determining the demand query with the highest clearing potential, for which we also provide a theoretical foundation. We experimentally evaluate our ML-based demand query mechanism in several spectrum auction domains and compare it against the most established real-world ICA: the combinatorial clock auction (CCA). Our mechanism significantly outperforms the CCA in terms of efficiency in all domains, it achieves higher efficiency in a significantly reduced number of rounds, and, using linear prices, it exhibits vastly higher clearing potential. Thus, with this paper we bridge the gap between research and practice and propose the first practical ML-powered ICA.

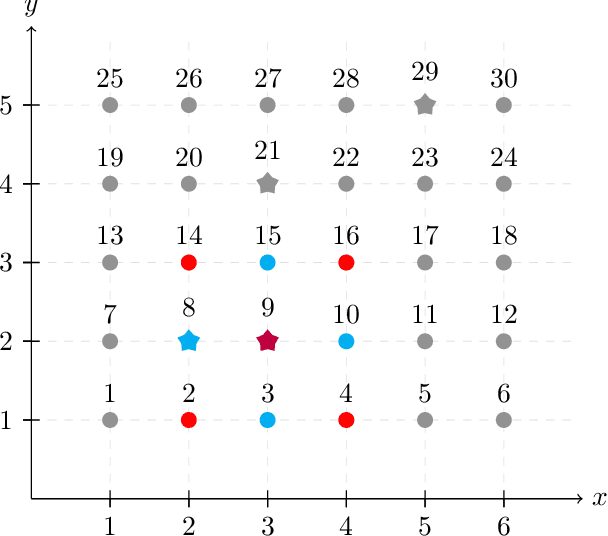

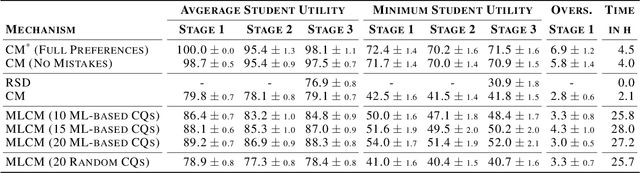

Machine Learning-powered Course Allocation

Oct 03, 2022

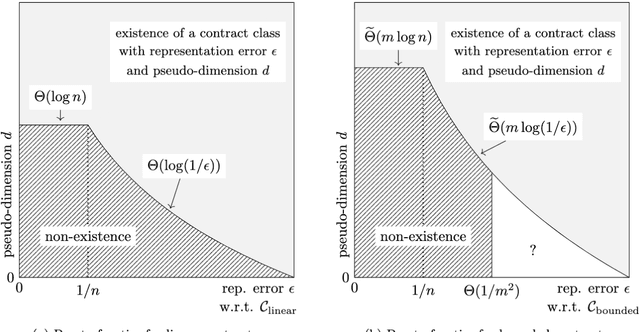

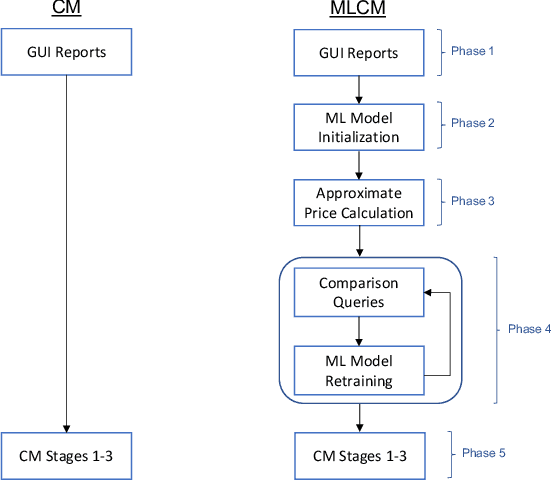

Abstract:We introduce a machine learning-powered course allocation mechanism. Concretely, we extend the state-of-the-art Course Match mechanism with a machine learning-based preference elicitation module. In an iterative, asynchronous manner, this module generates pairwise comparison queries that are tailored to each individual student. Regarding incentives, our machine learning-powered course match (MLCM) mechanism retains the attractive strategyproofness in the large property of Course Match. Regarding welfare, we perform computational experiments using a simulator that was fitted to real-world data. We find that, compared to Course Match, MLCM is able to increase average student utility by 4%-9% and minimum student utility by 10%-21%, even with only ten comparison queries.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge