Erik Elmroth

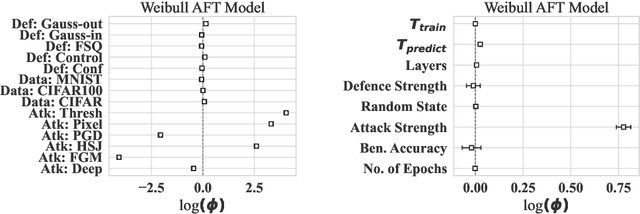

A Cost-Aware Approach to Adversarial Robustness in Neural Networks

Sep 11, 2024

Abstract:Considering the growing prominence of production-level AI and the threat of adversarial attacks that can evade a model at run-time, evaluating the robustness of models to these evasion attacks is of critical importance. Additionally, testing model changes likely means deploying the models to (e.g. a car or a medical imaging device), or a drone to see how it affects performance, making un-tested changes a public problem that reduces development speed, increases cost of development, and makes it difficult (if not impossible) to parse cause from effect. In this work, we used survival analysis as a cloud-native, time-efficient and precise method for predicting model performance in the presence of adversarial noise. For neural networks in particular, the relationships between the learning rate, batch size, training time, convergence time, and deployment cost are highly complex, so researchers generally rely on benchmark datasets to assess the ability of a model to generalize beyond the training data. To address this, we propose using accelerated failure time models to measure the effect of hardware choice, batch size, number of epochs, and test-set accuracy by using adversarial attacks to induce failures on a reference model architecture before deploying the model to the real world. We evaluate several GPU types and use the Tree Parzen Estimator to maximize model robustness and minimize model run-time simultaneously. This provides a way to evaluate the model and optimise it in a single step, while simultaneously allowing us to model the effect of model parameters on training time, prediction time, and accuracy. Using this technique, we demonstrate that newer, more-powerful hardware does decrease the training time, but with a monetary and power cost that far outpaces the marginal gains in accuracy.

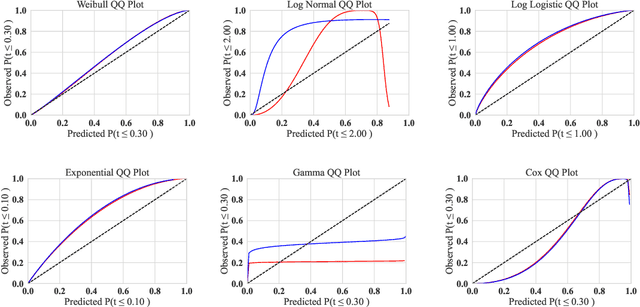

A Systematic Approach to Robustness Modelling for Deep Convolutional Neural Networks

Jan 24, 2024

Abstract:Convolutional neural networks have shown to be widely applicable to a large number of fields when large amounts of labelled data are available. The recent trend has been to use models with increasingly larger sets of tunable parameters to increase model accuracy, reduce model loss, or create more adversarially robust models -- goals that are often at odds with one another. In particular, recent theoretical work raises questions about the ability for even larger models to generalize to data outside of the controlled train and test sets. As such, we examine the role of the number of hidden layers in the ResNet model, demonstrated on the MNIST, CIFAR10, CIFAR100 datasets. We test a variety of parameters including the size of the model, the floating point precision, and the noise level of both the training data and the model output. To encapsulate the model's predictive power and computational cost, we provide a method that uses induced failures to model the probability of failure as a function of time and relate that to a novel metric that allows us to quickly determine whether or not the cost of training a model outweighs the cost of attacking it. Using this approach, we are able to approximate the expected failure rate using a small number of specially crafted samples rather than increasingly larger benchmark datasets. We demonstrate the efficacy of this technique on both the MNIST and CIFAR10 datasets using 8-, 16-, 32-, and 64-bit floating-point numbers, various data pre-processing techniques, and several attacks on five configurations of the ResNet model. Then, using empirical measurements, we examine the various trade-offs between cost, robustness, latency, and reliability to find that larger models do not significantly aid in adversarial robustness despite costing significantly more to train.

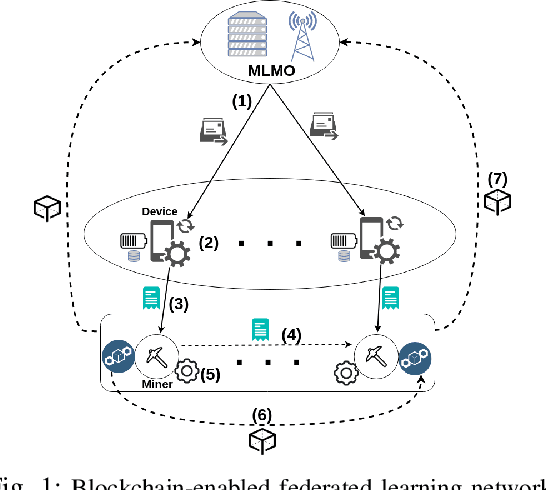

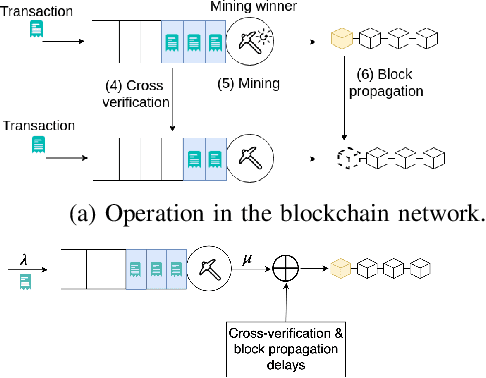

Resource Management for Blockchain-enabled Federated Learning: A Deep Reinforcement Learning Approach

May 01, 2020

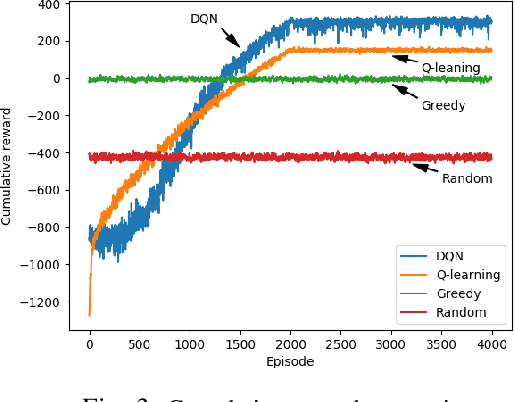

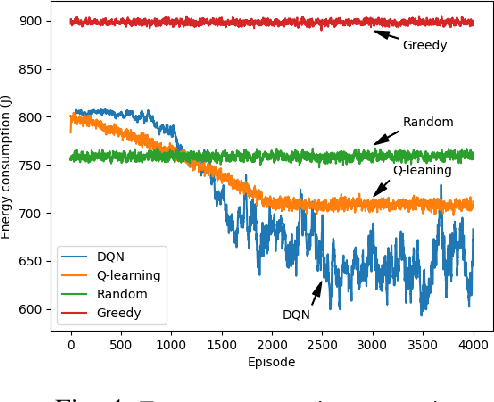

Abstract:Blockchain-enabled Federated Learning (BFL) enables mobile devices to collaboratively train neural network models required by a Machine Learning Model Owner (MLMO) while keeping data on the mobile devices. Then, the model updates are stored in the blockchain in a decentralized and reliable manner. However, the issue of BFL is that the mobile devices have energy and CPU constraints that may reduce the system lifetime and training efficiency. The other issue is that the training latency may increase due to the blockchain mining process. To address these issues, the MLMO needs to (i) decide how much data and energy that the mobile devices use for the training and (ii) determine the block generation rate to minimize the system latency, energy consumption, and incentive cost while achieving the target accuracy for the model. Under the uncertainty of the BFL environment, it is challenging for the MLMO to determine the optimal decisions. We propose to use the Deep Reinforcement Learning (DRL) to derive the optimal decisions for the MLMO.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge