Erfan Pakdamanian

DeepTake: Prediction of Driver Takeover Behavior using Multimodal Data

Jan 15, 2021

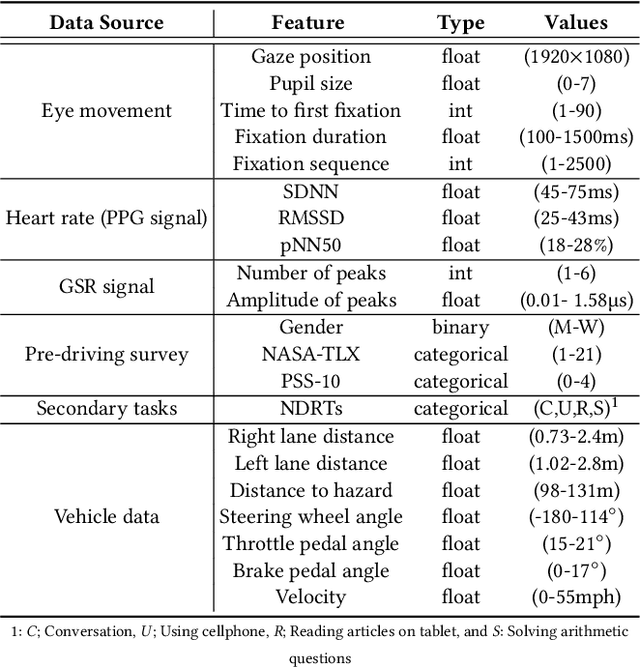

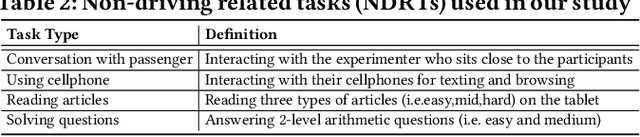

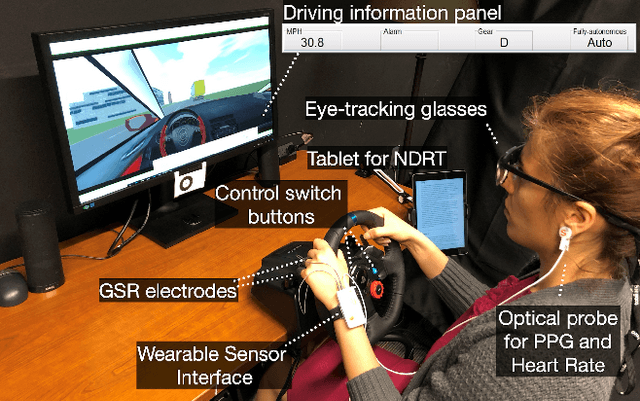

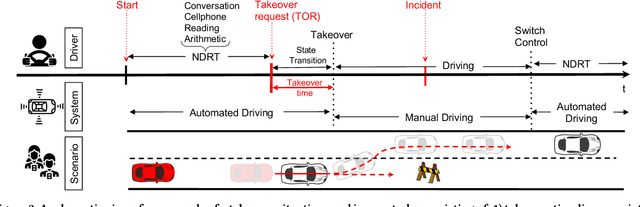

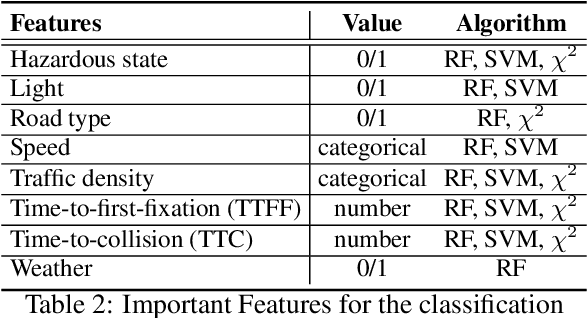

Abstract:Automated vehicles promise a future where drivers can engage in non-driving tasks without hands on the steering wheels for a prolonged period. Nevertheless, automated vehicles may still need to occasionally hand the control back to drivers due to technology limitations and legal requirements. While some systems determine the need for driver takeover using driver context and road condition to initiate a takeover request, studies show that the driver may not react to it. We present DeepTake, a novel deep neural network-based framework that predicts multiple aspects of takeover behavior to ensure that the driver is able to safely take over the control when engaged in non-driving tasks. Using features from vehicle data, driver biometrics, and subjective measurements, DeepTake predicts the driver's intention, time, and quality of takeover. We evaluate DeepTake performance using multiple evaluation metrics. Results show that DeepTake reliably predicts the takeover intention, time, and quality, with an accuracy of 96%, 93%, and 83%, respectively. Results also indicate that DeepTake outperforms previous state-of-the-art methods on predicting driver takeover time and quality. Our findings have implications for the algorithm development of driver monitoring and state detection.

EyeCar: Modeling the Visual Attention Allocation of Drivers in Semi-Autonomous Vehicles

Dec 24, 2019

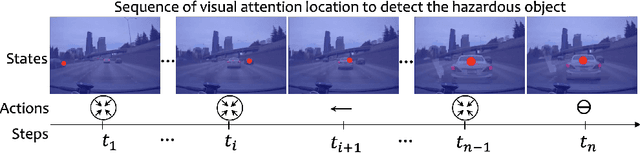

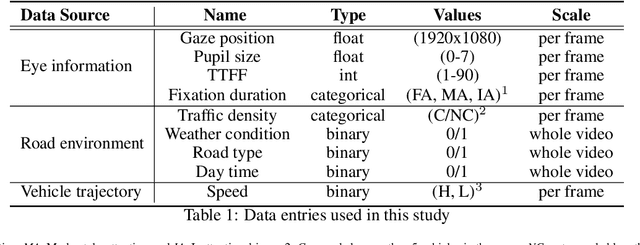

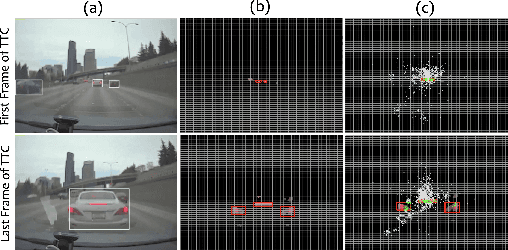

Abstract:A safe transition between autonomous and manual control requires sustained visual attention of the driver for the perception and assessment of hazards in dynamic driving environments. Thus, drivers must retain a certain level of situation awareness to safely takeover. Understanding the visual attention allocation of drivers can pave the way for inferring their dynamic state of situational awareness. We propose a reinforcement and inverse-reinforcement learning framework for modeling passive drivers' visual attention allocation in semi-autonomous vehicles. The proposed approach measures the eye-movement of passive drivers to evaluate their responses to real-world rear-end collisions. The results show substantial individual differences in the eye fixation patterns by driving experience, even among fully attentive drivers. Experienced drivers were more attentive to the situational dynamics and were able to identify potentially hazardous objects before any collisions occurred. These models of visual attention could potentially be integrated into autonomous systems to continuously monitor and guide effective intervention. Keywords: Visual attention allocation; Situation awareness; Eye movements; Eye fixation; Eye-Tracking; Reinforcement Learning; Inverse Reinforcement Learning

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge