Enrico Calabrese

Instantaneous Stereo Depth Estimation of Real-World Stimuli with a Neuromorphic Stereo-Vision Setup

Apr 06, 2021

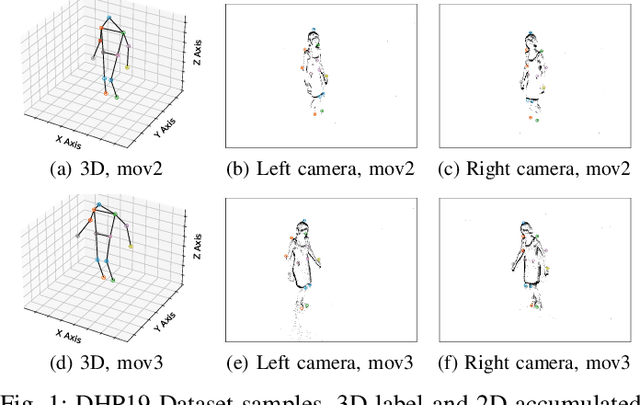

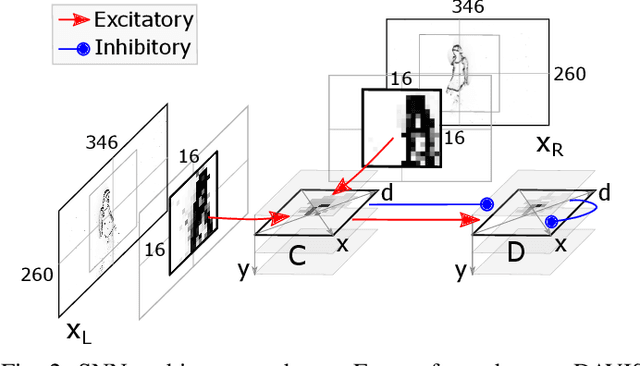

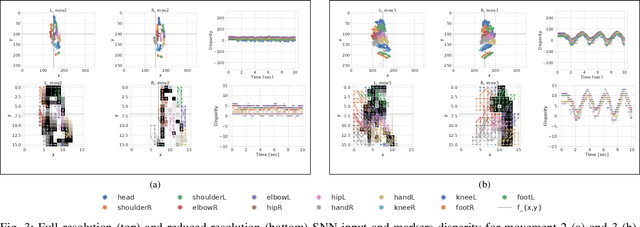

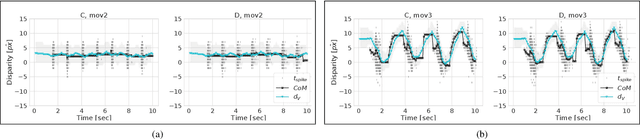

Abstract:The stereo-matching problem, i.e., matching corresponding features in two different views to reconstruct depth, is efficiently solved in biology. Yet, it remains the computational bottleneck for classical machine vision approaches. By exploiting the properties of event cameras, recently proposed Spiking Neural Network (SNN) architectures for stereo vision have the potential of simplifying the stereo-matching problem. Several solutions that combine event cameras with spike-based neuromorphic processors already exist. However, they are either simulated on digital hardware or tested on simplified stimuli. In this work, we use the Dynamic Vision Sensor 3D Human Pose Dataset (DHP19) to validate a brain-inspired event-based stereo-matching architecture implemented on a mixed-signal neuromorphic processor with real-world data. Our experiments show that this SNN architecture, composed of coincidence detectors and disparity sensitive neurons, is able to provide a coarse estimate of the input disparity instantaneously, thereby detecting the presence of a stimulus moving in depth in real-time.

NullHop: A Flexible Convolutional Neural Network Accelerator Based on Sparse Representations of Feature Maps

Mar 06, 2018

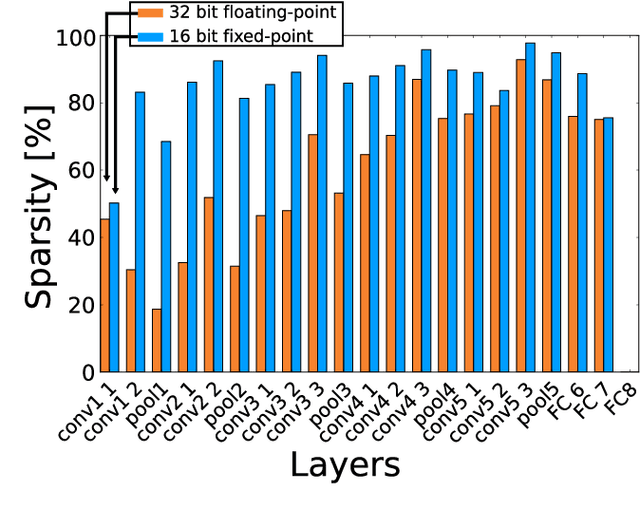

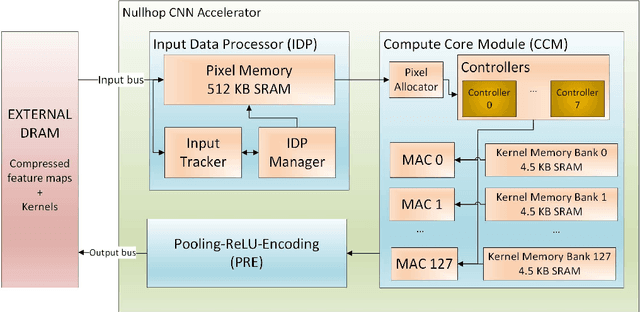

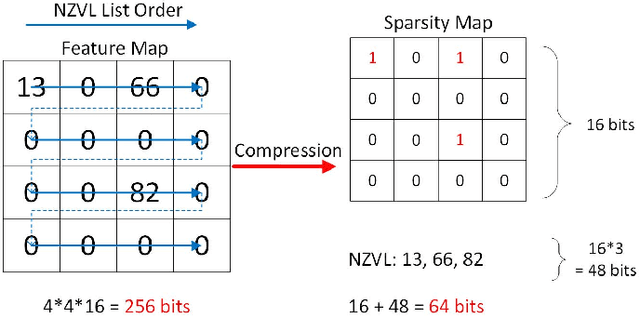

Abstract:Convolutional neural networks (CNNs) have become the dominant neural network architecture for solving many state-of-the-art (SOA) visual processing tasks. Even though Graphical Processing Units (GPUs) are most often used in training and deploying CNNs, their power efficiency is less than 10 GOp/s/W for single-frame runtime inference. We propose a flexible and efficient CNN accelerator architecture called NullHop that implements SOA CNNs useful for low-power and low-latency application scenarios. NullHop exploits the sparsity of neuron activations in CNNs to accelerate the computation and reduce memory requirements. The flexible architecture allows high utilization of available computing resources across kernel sizes ranging from 1x1 to 7x7. NullHop can process up to 128 input and 128 output feature maps per layer in a single pass. We implemented the proposed architecture on a Xilinx Zynq FPGA platform and present results showing how our implementation reduces external memory transfers and compute time in five different CNNs ranging from small ones up to the widely known large VGG16 and VGG19 CNNs. Post-synthesis simulations using Mentor Modelsim in a 28nm process with a clock frequency of 500 MHz show that the VGG19 network achieves over 450 GOp/s. By exploiting sparsity, NullHop achieves an efficiency of 368%, maintains over 98% utilization of the MAC units, and achieves a power efficiency of over 3TOp/s/W in a core area of 6.3mm$^2$. As further proof of NullHop's usability, we interfaced its FPGA implementation with a neuromorphic event camera for real time interactive demonstrations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge