Emil Keyder

Object-centered image stitching

Nov 23, 2020

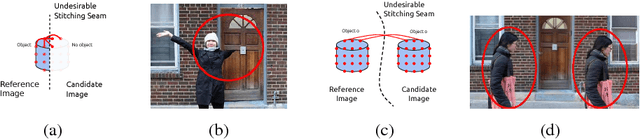

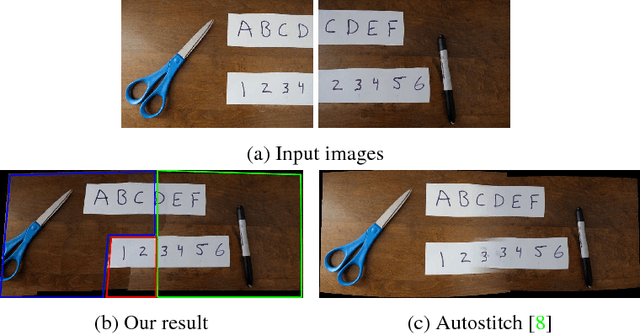

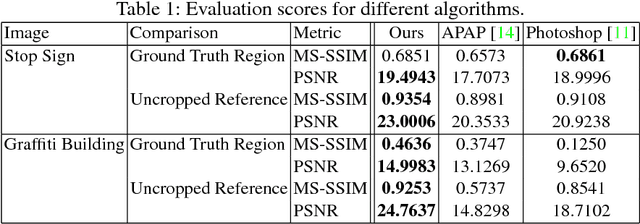

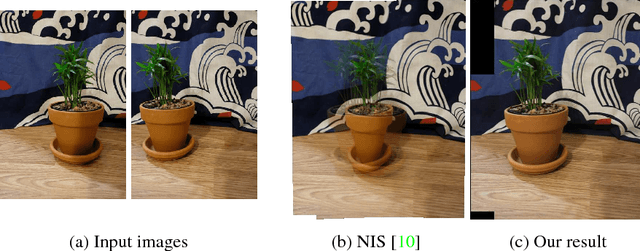

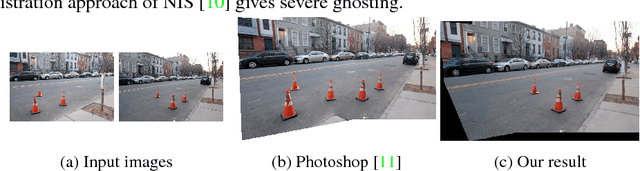

Abstract:Image stitching is typically decomposed into three phases: registration, which aligns the source images with a common target image; seam finding, which determines for each target pixel the source image it should come from; and blending, which smooths transitions over the seams. As described in [1], the seam finding phase attempts to place seams between pixels where the transition between source images is not noticeable. Here, we observe that the most problematic failures of this approach occur when objects are cropped, omitted, or duplicated. We therefore take an object-centered approach to the problem, leveraging recent advances in object detection [2,3,4]. We penalize candidate solutions with this class of error by modifying the energy function used in the seam finding stage. This produces substantially more realistic stitching results on challenging imagery. In addition, these methods can be used to determine when there is non-recoverable occlusion in the input data, and also suggest a simple evaluation metric that can be used to evaluate the output of stitching algorithms.

Robust image stitching with multiple registrations

Nov 23, 2020

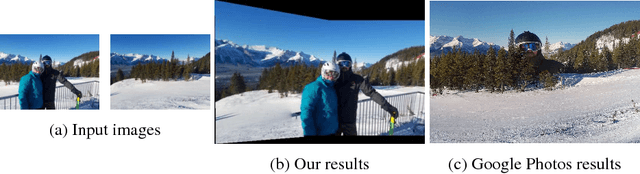

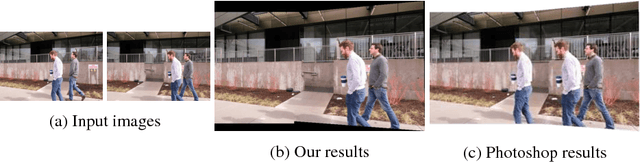

Abstract:Panorama creation is one of the most widely deployed techniques in computer vision. In addition to industry applications such as Google Street View, it is also used by millions of consumers in smartphones and other cameras. Traditionally, the problem is decomposed into three phases: registration, which picks a single transformation of each source image to align it to the other inputs, seam finding, which selects a source image for each pixel in the final result, and blending, which fixes minor visual artifacts. Here, we observe that the use of a single registration often leads to errors, especially in scenes with significant depth variation or object motion. We propose instead the use of multiple registrations, permitting regions of the image at different depths to be captured with greater accuracy. MRF inference techniques naturally extend to seam finding over multiple registrations, and we show here that their energy functions can be readily modified with new terms that discourage duplication and tearing, common problems that are exacerbated by the use of multiple registrations. Our techniques are closely related to layer-based stereo, and move image stitching closer to explicit scene modeling. Experimental evidence demonstrates that our techniques often generate significantly better panoramas when there is substantial motion or parallax.

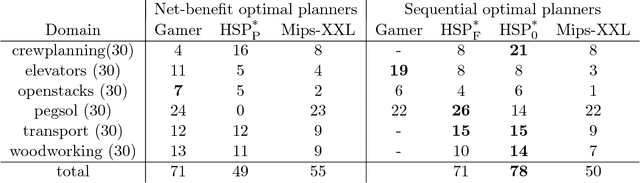

Soft Goals Can Be Compiled Away

Jan 15, 2014

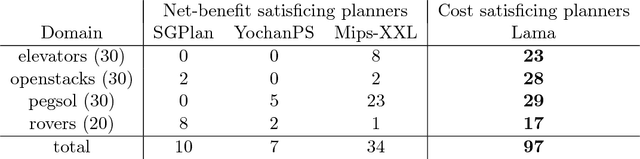

Abstract:Soft goals extend the classical model of planning with a simple model of preferences. The best plans are then not the ones with least cost but the ones with maximum utility, where the utility of a plan is the sum of the utilities of the soft goals achieved minus the plan cost. Finding plans with high utility appears to involve two linked problems: choosing a subset of soft goals to achieve and finding a low-cost plan to achieve them. New search algorithms and heuristics have been developed for planning with soft goals, and a new track has been introduced in the International Planning Competition (IPC) to test their performance. In this note, we show however that these extensions are not needed: soft goals do not increase the expressive power of the basic model of planning with action costs, as they can easily be compiled away. We apply this compilation to the problems of the net-benefit track of the most recent IPC, and show that optimal and satisficing cost-based planners do better on the compiled problems than optimal and satisficing net-benefit planners on the original problems with explicit soft goals. Furthermore, we show that penalties, or negative preferences expressing conditions to avoid, can also be compiled away using a similar idea.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge