Elvira Zainulina

Self-supervised Physics-based Denoising for Computed Tomography

Nov 01, 2022

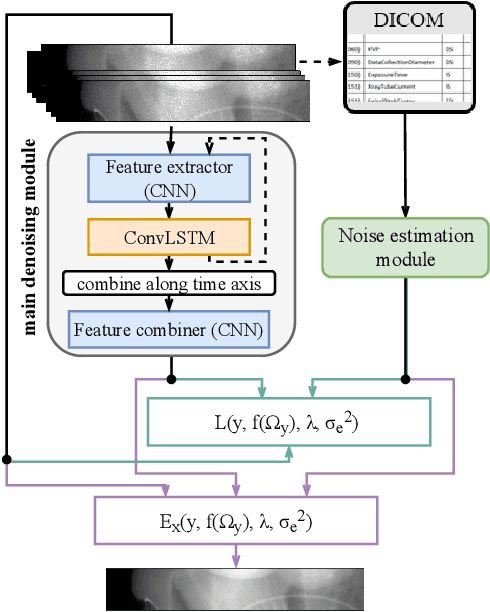

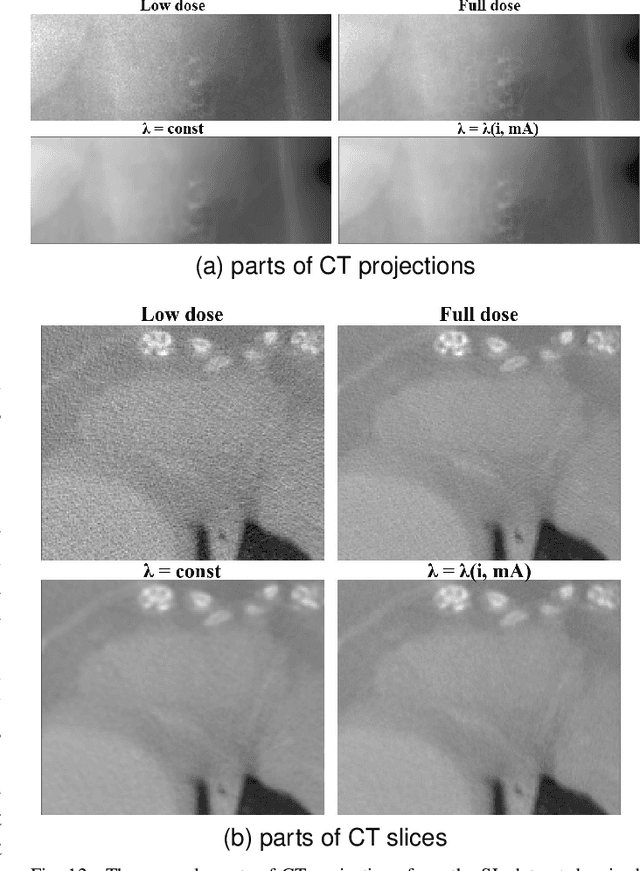

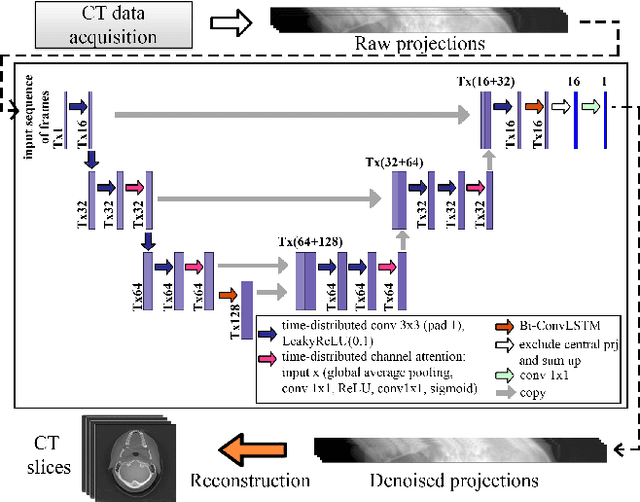

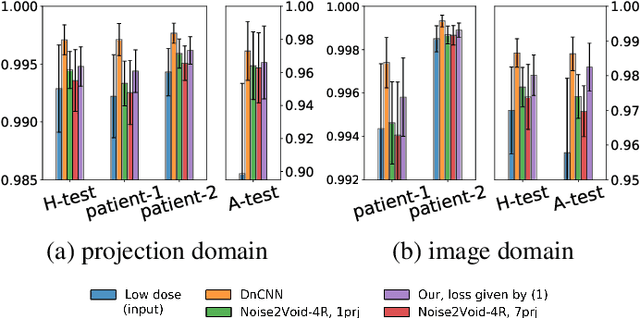

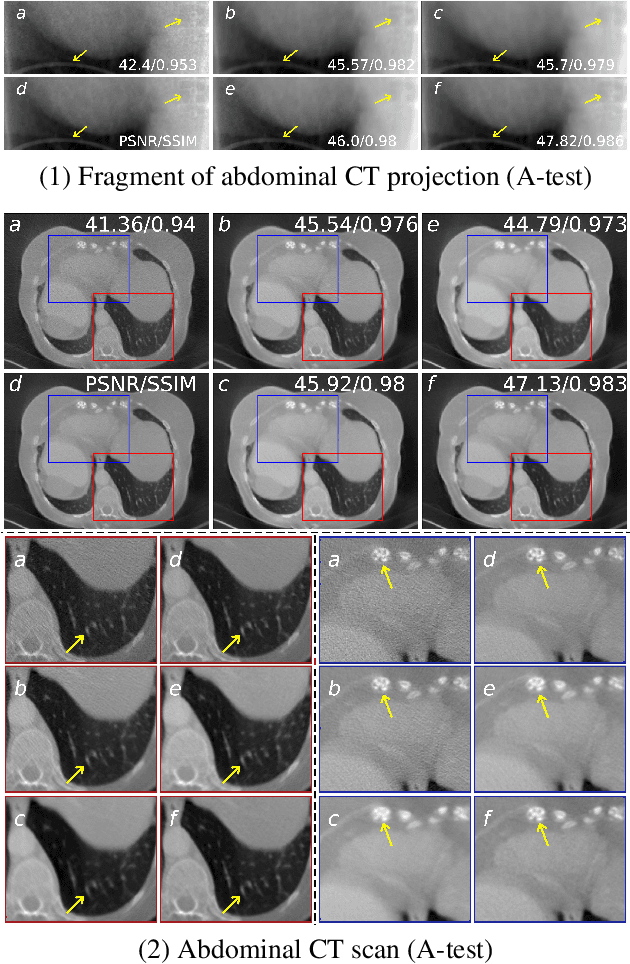

Abstract:Computed Tomography (CT) imposes risk on the patients due to its inherent X-ray radiation, stimulating the development of low-dose CT (LDCT) imaging methods. Lowering the radiation dose reduces the health risks but leads to noisier measurements, which decreases the tissue contrast and causes artifacts in CT images. Ultimately, these issues could affect the perception of medical personnel and could cause misdiagnosis. Modern deep learning noise suppression methods alleviate the challenge but require low-noise-high-noise CT image pairs for training, rarely collected in regular clinical workflows. In this work, we introduce a new self-supervised approach for CT denoising Noise2NoiseTD-ANM that can be trained without the high-dose CT projection ground truth images. Unlike previously proposed self-supervised techniques, the introduced method exploits the connections between the adjacent projections and the actual model of CT noise distribution. Such a combination allows for interpretable no-reference denoising using nothing but the original noisy LDCT projections. Our experiments with LDCT data demonstrate that the proposed method reaches the level of the fully supervised models, sometimes superseding them, easily generalizes to various noise levels, and outperforms state-of-the-art self-supervised denoising algorithms.

No-reference denoising of low-dose CT projections

Feb 03, 2021

Abstract:Low-dose computed tomography (LDCT) became a clear trend in radiology with an aspiration to refrain from delivering excessive X-ray radiation to the patients. The reduction of the radiation dose decreases the risks to the patients but raises the noise level, affecting the quality of the images and their ultimate diagnostic value. One mitigation option is to consider pairs of low-dose and high-dose CT projections to train a denoising model using deep learning algorithms; however, such pairs are rarely available in practice. In this paper, we present a new self-supervised method for CT denoising. Unlike existing self-supervised approaches, the proposed method requires only noisy CT projections and exploits the connections between adjacent images. The experiments carried out on an LDCT dataset demonstrate that our method is almost as accurate as the supervised approach, while also outperforming the considered self-supervised denoising methods.

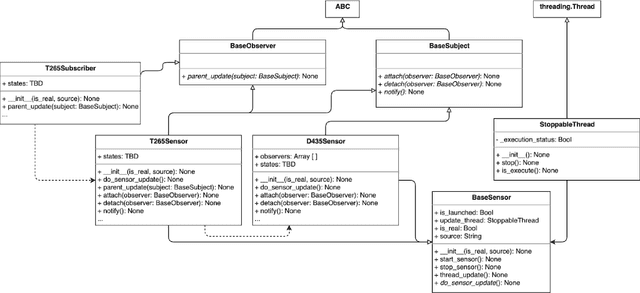

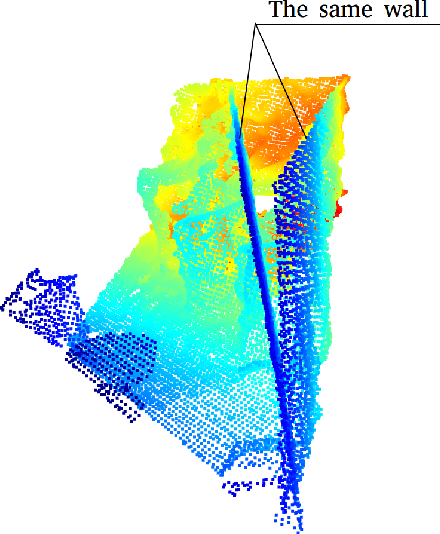

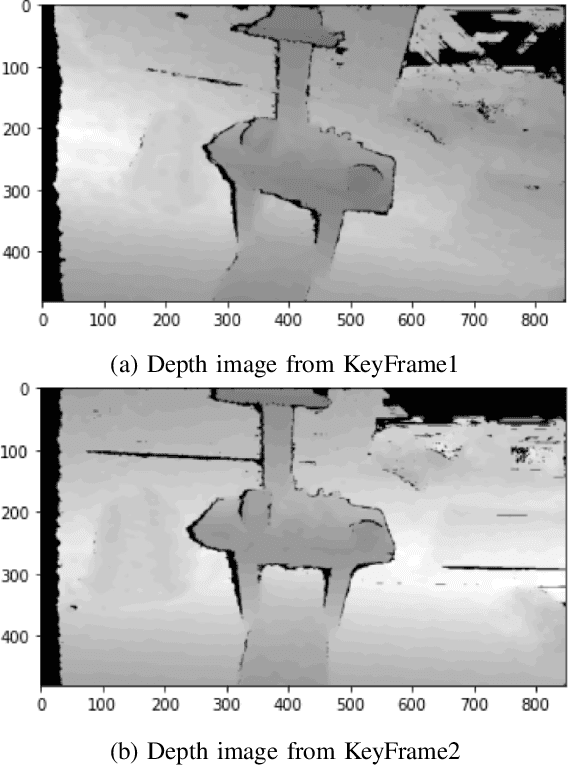

Coupling of localization and depth data for mapping using Intel RealSense T265 and D435i cameras

Apr 01, 2020

Abstract:We propose to couple two types of Intel RealSense sensors (tracking T265 and depth D435i) in order to obtain localization and 3D occupancy map of the indoor environment. We implemented a python-based observer pattern with multi-threaded approach for camera data synchronization. We compared different point cloud (PC) alignment methods (using transformations obtained from tracking camera and from ICP family methods). Tracking camera and PC alignment allow us to generate a set of transformations between frames. Based on these transformations we obtained different trajectories and provided their analysis. Finally, having poses for all frames, we combined depth data. Firstly we obtained a joint PC representing the whole scene. Then we used Octomap representation to build a map.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge