Coupling of localization and depth data for mapping using Intel RealSense T265 and D435i cameras

Paper and Code

Apr 01, 2020

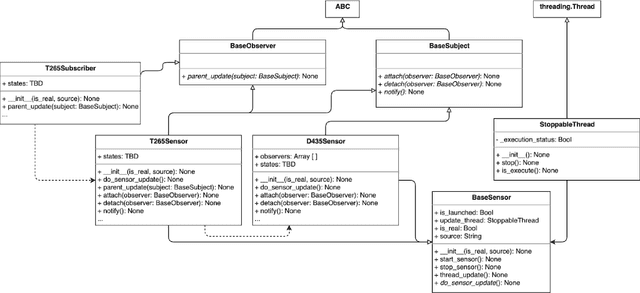

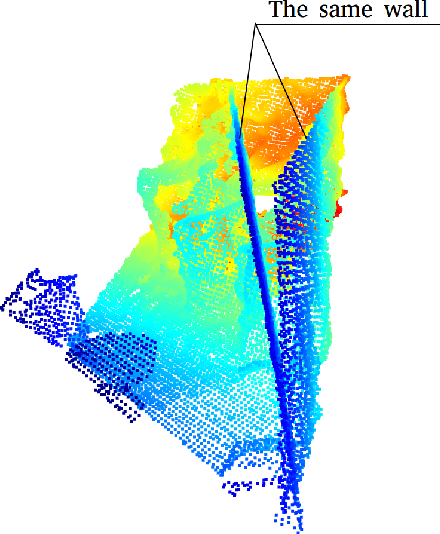

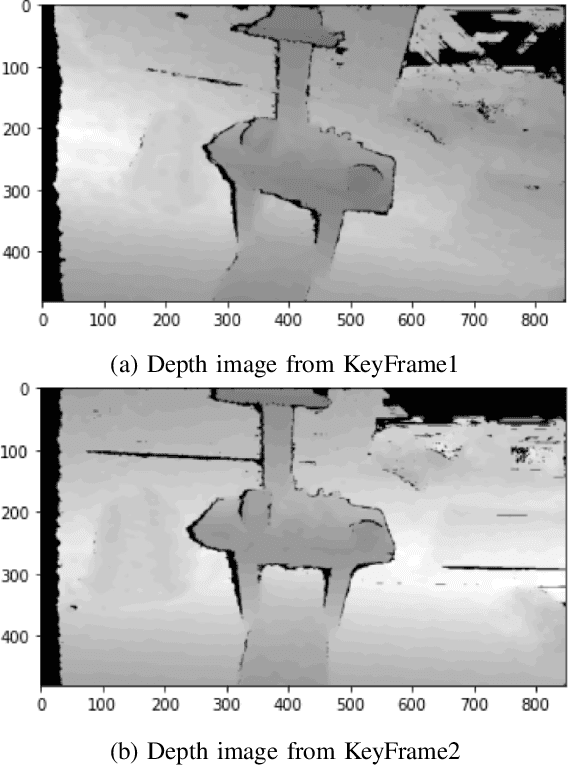

We propose to couple two types of Intel RealSense sensors (tracking T265 and depth D435i) in order to obtain localization and 3D occupancy map of the indoor environment. We implemented a python-based observer pattern with multi-threaded approach for camera data synchronization. We compared different point cloud (PC) alignment methods (using transformations obtained from tracking camera and from ICP family methods). Tracking camera and PC alignment allow us to generate a set of transformations between frames. Based on these transformations we obtained different trajectories and provided their analysis. Finally, having poses for all frames, we combined depth data. Firstly we obtained a joint PC representing the whole scene. Then we used Octomap representation to build a map.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge