Elmer Salazar

University of Texas at Dallas

MC3G: Model Agnostic Causally Constrained Counterfactual Generation

Aug 24, 2025

Abstract:Machine learning models increasingly influence decisions in high-stakes settings such as finance, law and hiring, driving the need for transparent, interpretable outcomes. However, while explainable approaches can help understand the decisions being made, they may inadvertently reveal the underlying proprietary algorithm: an undesirable outcome for many practitioners. Consequently, it is crucial to balance meaningful transparency with a form of recourse that clarifies why a decision was made and offers actionable steps following which a favorable outcome can be obtained. Counterfactual explanations offer a powerful mechanism to address this need by showing how specific input changes lead to a more favorable prediction. We propose Model-Agnostic Causally Constrained Counterfactual Generation (MC3G), a novel framework that tackles limitations in the existing counterfactual methods. First, MC3G is model-agnostic: it approximates any black-box model using an explainable rule-based surrogate model. Second, this surrogate is used to generate counterfactuals that produce a favourable outcome for the original underlying black box model. Third, MC3G refines cost computation by excluding the ``effort" associated with feature changes that occur automatically due to causal dependencies. By focusing only on user-initiated changes, MC3G provides a more realistic and fair representation of the effort needed to achieve a favourable outcome. We show that MC3G delivers more interpretable and actionable counterfactual recommendations compared to existing techniques all while having a lower cost. Our findings highlight MC3G's potential to enhance transparency, accountability, and practical utility in decision-making processes that incorporate machine-learning approaches.

CoGS: Model Agnostic Causality Constrained Counterfactual Explanations using goal-directed ASP

Oct 30, 2024

Abstract:Machine learning models are increasingly used in critical areas such as loan approvals and hiring, yet they often function as black boxes, obscuring their decision-making processes. Transparency is crucial, as individuals need explanations to understand decisions, primarily if the decisions result in an undesired outcome. Our work introduces CoGS (Counterfactual Generation with s(CASP)), a model-agnostic framework capable of generating counterfactual explanations for classification models. CoGS leverages the goal-directed Answer Set Programming system s(CASP) to compute realistic and causally consistent modifications to feature values, accounting for causal dependencies between them. By using rule-based machine learning algorithms (RBML), notably the FOLD-SE algorithm, CoGS extracts the underlying logic of a statistical model to generate counterfactual solutions. By tracing a step-by-step path from an undesired outcome to a desired one, CoGS offers interpretable and actionable explanations of the changes required to achieve the desired outcome. We present details of the CoGS framework along with its evaluation.

CoGS: Causality Constrained Counterfactual Explanations using goal-directed ASP

Jul 11, 2024

Abstract:Machine learning models are increasingly used in areas such as loan approvals and hiring, yet they often function as black boxes, obscuring their decision-making processes. Transparency is crucial, and individuals need explanations to understand decisions, especially for the ones not desired by the user. Ethical and legal considerations require informing individuals of changes in input attribute values (features) that could lead to a desired outcome for the user. Our work aims to generate counterfactual explanations by considering causal dependencies between features. We present the CoGS (Counterfactual Generation with s(CASP)) framework that utilizes the goal-directed Answer Set Programming system s(CASP) to generate counterfactuals from rule-based machine learning models, specifically the FOLD-SE algorithm. CoGS computes realistic and causally consistent changes to attribute values taking causal dependencies between them into account. It finds a path from an undesired outcome to a desired one using counterfactuals. We present details of the CoGS framework along with its evaluation.

CFGs: Causality Constrained Counterfactual Explanations using goal-directed ASP

May 24, 2024Abstract:Machine learning models that automate decision-making are increasingly used in consequential areas such as loan approvals, pretrial bail approval, and hiring. Unfortunately, most of these models are black boxes, i.e., they are unable to reveal how they reach these prediction decisions. A need for transparency demands justification for such predictions. An affected individual might also desire explanations to understand why a decision was made. Ethical and legal considerations require informing the individual of changes in the input attribute (s) that could be made to produce a desirable outcome. Our work focuses on the latter problem of generating counterfactual explanations by considering the causal dependencies between features. In this paper, we present the framework CFGs, CounterFactual Generation with s(CASP), which utilizes the goal-directed Answer Set Programming (ASP) system s(CASP) to automatically generate counterfactual explanations from models generated by rule-based machine learning algorithms in particular. We benchmark CFGs with the FOLD-SE model. Reaching the counterfactual state from the initial state is planned and achieved using a series of interventions. To validate our proposal, we show how counterfactual explanations are computed and justified by imagining worlds where some or all factual assumptions are altered/changed. More importantly, we show how CFGs navigates between these worlds, namely, go from our initial state where we obtain an undesired outcome to the imagined goal state where we obtain the desired decision, taking into account the causal relationships among features.

Counterfactual Generation with Answer Set Programming

Feb 06, 2024Abstract:Machine learning models that automate decision-making are increasingly being used in consequential areas such as loan approvals, pretrial bail approval, hiring, and many more. Unfortunately, most of these models are black-boxes, i.e., they are unable to reveal how they reach these prediction decisions. A need for transparency demands justification for such predictions. An affected individual might also desire explanations to understand why a decision was made. Ethical and legal considerations may further require informing the individual of changes in the input attribute that could be made to produce a desirable outcome. This paper focuses on the latter problem of automatically generating counterfactual explanations. We propose a framework Counterfactual Generation with s(CASP) (CFGS) that utilizes answer set programming (ASP) and the s(CASP) goal-directed ASP system to automatically generate counterfactual explanations from rules generated by rule-based machine learning (RBML) algorithms. In our framework, we show how counterfactual explanations are computed and justified by imagining worlds where some or all factual assumptions are altered/changed. More importantly, we show how we can navigate between these worlds, namely, go from our original world/scenario where we obtain an undesired outcome to the imagined world/scenario where we obtain a desired/favourable outcome.

Counterfactual Explanation Generation with s(CASP)

Oct 23, 2023Abstract:Machine learning models that automate decision-making are increasingly being used in consequential areas such as loan approvals, pretrial bail, hiring, and many more. Unfortunately, most of these models are black-boxes, i.e., they are unable to reveal how they reach these prediction decisions. A need for transparency demands justification for such predictions. An affected individual might desire explanations to understand why a decision was made. Ethical and legal considerations may further require informing the individual of changes in the input attribute that could be made to produce a desirable outcome. This paper focuses on the latter problem of automatically generating counterfactual explanations. Our approach utilizes answer set programming and the s(CASP) goal-directed ASP system. Answer Set Programming (ASP) is a well-known knowledge representation and reasoning paradigm. s(CASP) is a goal-directed ASP system that executes answer-set programs top-down without grounding them. The query-driven nature of s(CASP) allows us to provide justifications as proof trees, which makes it possible to analyze the generated counterfactual explanations. We show how counterfactual explanations are computed and justified by imagining multiple possible worlds where some or all factual assumptions are untrue and, more importantly, how we can navigate between these worlds. We also show how our algorithm can be used to find the Craig Interpolant for a class of answer set programs for a failing query.

DiscASP: A Graph-based ASP System for Finding Relevant Consistent Concepts with Applications to Conversational Socialbots

Sep 17, 2021

Abstract:We consider the problem of finding relevant consistent concepts in a conversational AI system, particularly, for realizing a conversational socialbot. Commonsense knowledge about various topics can be represented as an answer set program. However, to advance the conversation, we need to solve the problem of finding relevant consistent concepts, i.e., find consistent knowledge in the "neighborhood" of the current topic being discussed that can be used to advance the conversation. Traditional ASP solvers will generate the whole answer set which is stripped of all the associations between the various atoms (concepts) and thus cannot be used to find relevant consistent concepts. Similarly, goal-directed implementations of ASP will only find concepts directly relevant to a query. We present the DiscASP system that will find the partial consistent model that is relevant to a given topic in a manner similar to how a human will find it. DiscASP is based on a novel graph-based algorithm for finding stable models of an answer set program. We present the DiscASP algorithm, its implementation, and its application to developing a conversational socialbot.

* In Proceedings ICLP 2021, arXiv:2109.07914

Knowledge-Assisted Reasoning of Model-Augmented System Requirements with Event Calculus and Goal-Directed Answer Set Programming

Sep 10, 2021

Abstract:We consider requirements for cyber-physical systems represented in constrained natural language. We present novel automated techniques for aiding in the development of these requirements so that they are consistent and can withstand perceived failures. We show how cyber-physical systems' requirements can be modeled using the event calculus (EC), a formalism used in AI for representing actions and change. We also show how answer set programming (ASP) and its query-driven implementation s(CASP) can be used to directly realize the event calculus model of the requirements. This event calculus model can be used to automatically validate the requirements. Since ASP is an expressive knowledge representation language, it can also be used to represent contextual knowledge about cyber-physical systems, which, in turn, can be used to find gaps in their requirements specifications. We illustrate our approach through an altitude alerting system from the avionics domain.

* In Proceedings HCVS 2021, arXiv:2109.03988

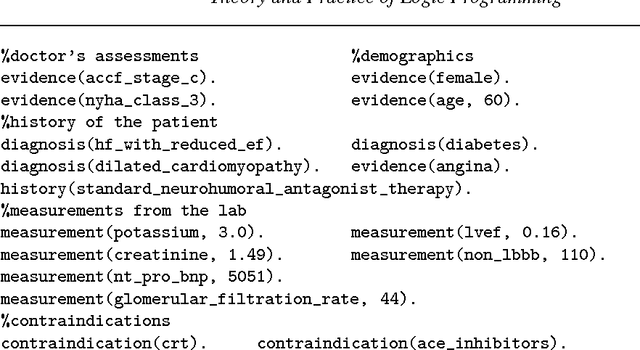

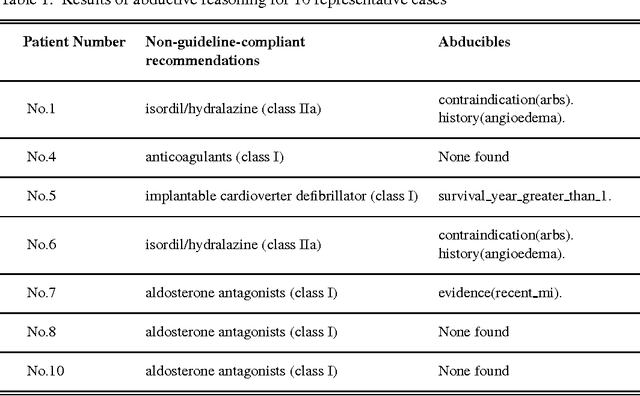

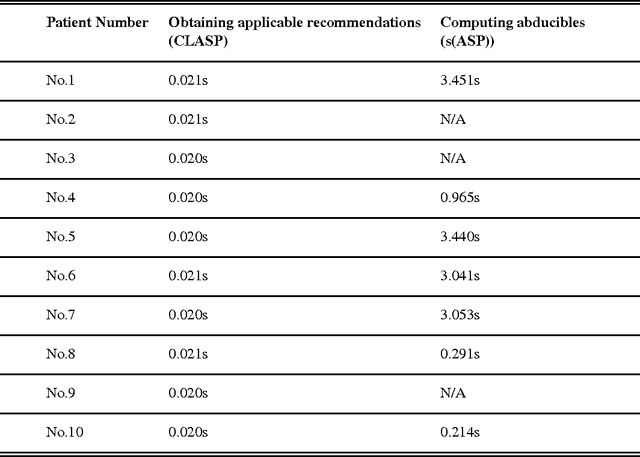

Improving Adherence to Heart Failure Management Guidelines via Abductive Reasoning

Jul 16, 2017

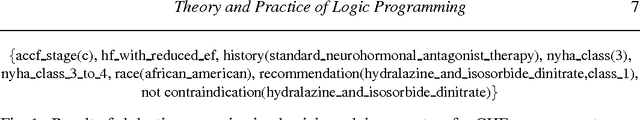

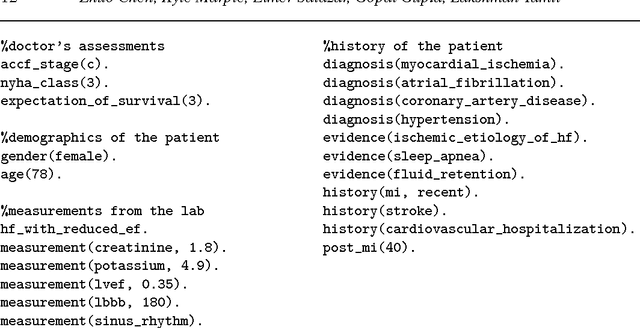

Abstract:Management of chronic diseases such as heart failure (HF) is a major public health problem. A standard approach to managing chronic diseases by medical community is to have a committee of experts develop guidelines that all physicians should follow. Due to their complexity, these guidelines are difficult to implement and are adopted slowly by the medical community at large. We have developed a physician advisory system that codes the entire set of clinical practice guidelines for managing HF using answer set programming(ASP). In this paper we show how abductive reasoning can be deployed to find missing symptoms and conditions that the patient must exhibit in order for a treatment prescribed by a physician to work effectively. Thus, if a physician does not make an appropriate recommendation or makes a non-adherent recommendation, our system will advise the physician about symptoms and conditions that must be in effect for that recommendation to apply. It is under consideration for acceptance in TPLP.

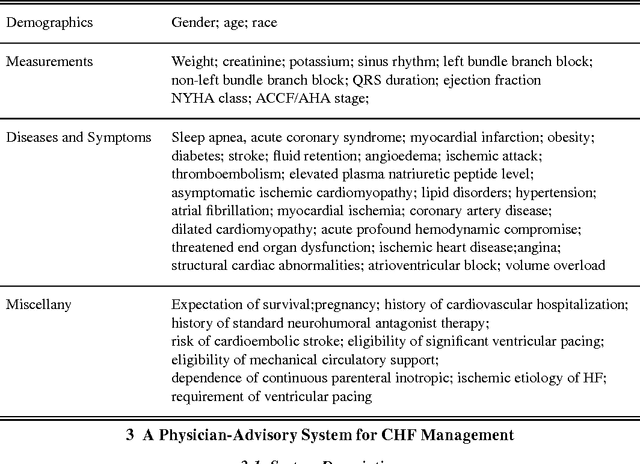

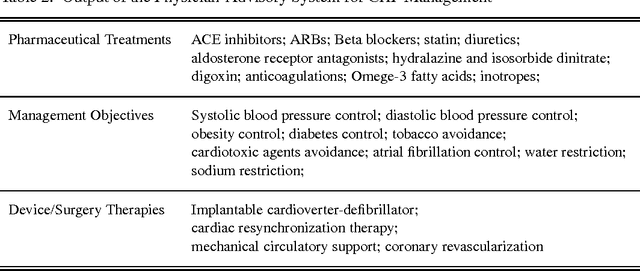

A Physician Advisory System for Chronic Heart Failure Management Based on Knowledge Patterns

Oct 25, 2016

Abstract:Management of chronic diseases such as heart failure, diabetes, and chronic obstructive pulmonary disease (COPD) is a major problem in health care. A standard approach that the medical community has devised to manage widely prevalent chronic diseases such as chronic heart failure (CHF) is to have a committee of experts develop guidelines that all physicians should follow. These guidelines typically consist of a series of complex rules that make recommendations based on a patient's information. Due to their complexity, often the guidelines are either ignored or not complied with at all, which can result in poor medical practices. It is not even clear whether it is humanly possible to follow these guidelines due to their length and complexity. In the case of CHF management, the guidelines run nearly 80 pages. In this paper we describe a physician-advisory system for CHF management that codes the entire set of clinical practice guidelines for CHF using answer set programming. Our approach is based on developing reasoning templates (that we call knowledge patterns) and using these patterns to systemically code the clinical guidelines for CHF as ASP rules. Use of the knowledge patterns greatly facilitates the development of our system. Given a patient's medical information, our system generates a recommendation for treatment just as a human physician would, using the guidelines. Our system will work even in the presence of incomplete information. Our work makes two contributions: (i) it shows that highly complex guidelines can be successfully coded as ASP rules, and (ii) it develops a series of knowledge patterns that facilitate the coding of knowledge expressed in a natural language and that can be used for other application domains. This paper is under consideration for acceptance in TPLP.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge