Ehsan Hallaji

TrustChain: A Blockchain Framework for Auditing and Verifying Aggregators in Decentralized Federated Learning

Feb 23, 2025Abstract:The server-less nature of Decentralized Federated Learning (DFL) requires allocating the aggregation role to specific participants in each federated round. Current DFL architectures ensure the trustworthiness of the aggregator node upon selection. However, most of these studies overlook the possibility that the aggregating node may turn rogue and act maliciously after being nominated. To address this problem, this paper proposes a DFL structure, called TrustChain, that scores the aggregators before selection based on their past behavior and additionally audits them after the aggregation. To do this, the statistical independence between the client updates and the aggregated model is continuously monitored using the Hilbert-Schmidt Independence Criterion (HSIC). The proposed method relies on several principles, including blockchain, anomaly detection, and concept drift analysis. The designed structure is evaluated on several federated datasets and attack scenarios with different numbers of Byzantine nodes.

FedNIA: Noise-Induced Activation Analysis for Mitigating Data Poisoning in FL

Feb 23, 2025Abstract:Federated learning systems are increasingly threatened by data poisoning attacks, where malicious clients compromise global models by contributing tampered updates. Existing defenses often rely on impractical assumptions, such as access to a central test dataset, or fail to generalize across diverse attack types, particularly those involving multiple malicious clients working collaboratively. To address this, we propose Federated Noise-Induced Activation Analysis (FedNIA), a novel defense framework to identify and exclude adversarial clients without relying on any central test dataset. FedNIA injects random noise inputs to analyze the layerwise activation patterns in client models leveraging an autoencoder that detects abnormal behaviors indicative of data poisoning. FedNIA can defend against diverse attack types, including sample poisoning, label flipping, and backdoors, even in scenarios with multiple attacking nodes. Experimental results on non-iid federated datasets demonstrate its effectiveness and robustness, underscoring its potential as a foundational approach for enhancing the security of federated learning systems.

A Study on the Importance of Features in Detecting Advanced Persistent Threats Using Machine Learning

Feb 11, 2025Abstract:Advanced Persistent Threats (APTs) pose a significant security risk to organizations and industries. These attacks often lead to severe data breaches and compromise the system for a long time. Mitigating these sophisticated attacks is highly challenging due to the stealthy and persistent nature of APTs. Machine learning models are often employed to tackle this challenge by bringing automation and scalability to APT detection. Nevertheless, these intelligent methods are data-driven, and thus, highly affected by the quality and relevance of input data. This paper aims to analyze measurements considered when recording network traffic and conclude which features contribute more to detecting APT samples. To do this, we study the features associated with various APT cases and determine their importance using a machine learning framework. To ensure the generalization of our findings, several feature selection techniques are employed and paired with different classifiers to evaluate their effectiveness. Our findings provide insights into how APT detection can be enhanced in real-world scenarios.

Federated Continual Learning: Concepts, Challenges, and Solutions

Feb 10, 2025Abstract:Federated Continual Learning (FCL) has emerged as a robust solution for collaborative model training in dynamic environments, where data samples are continuously generated and distributed across multiple devices. This survey provides a comprehensive review of FCL, focusing on key challenges such as heterogeneity, model stability, communication overhead, and privacy preservation. We explore various forms of heterogeneity and their impact on model performance. Solutions to non-IID data, resource-constrained platforms, and personalized learning are reviewed in an effort to show the complexities of handling heterogeneous data distributions. Next, we review techniques for ensuring model stability and avoiding catastrophic forgetting, which are critical in non-stationary environments. Privacy-preserving techniques are another aspect of FCL that have been reviewed in this work. This survey has integrated insights from federated learning and continual learning to present strategies for improving the efficacy and scalability of FCL systems, making it applicable to a wide range of real-world scenarios.

Decentralized Federated Learning: A Survey on Security and Privacy

Jan 25, 2024Abstract:Federated learning has been rapidly evolving and gaining popularity in recent years due to its privacy-preserving features, among other advantages. Nevertheless, the exchange of model updates and gradients in this architecture provides new attack surfaces for malicious users of the network which may jeopardize the model performance and user and data privacy. For this reason, one of the main motivations for decentralized federated learning is to eliminate server-related threats by removing the server from the network and compensating for it through technologies such as blockchain. However, this advantage comes at the cost of challenging the system with new privacy threats. Thus, performing a thorough security analysis in this new paradigm is necessary. This survey studies possible variations of threats and adversaries in decentralized federated learning and overviews the potential defense mechanisms. Trustability and verifiability of decentralized federated learning are also considered in this study.

Learning From High-Dimensional Cyber-Physical Data Streams for Diagnosing Faults in Smart Grids

Mar 15, 2023Abstract:The performance of fault diagnosis systems is highly affected by data quality in cyber-physical power systems. These systems generate massive amounts of data that overburden the system with excessive computational costs. Another issue is the presence of noise in recorded measurements, which prevents building a precise decision model. Furthermore, the diagnostic model is often provided with a mixture of redundant measurements that may deviate it from learning normal and fault distributions. This paper presents the effect of feature engineering on mitigating the aforementioned challenges in cyber-physical systems. Feature selection and dimensionality reduction methods are combined with decision models to simulate data-driven fault diagnosis in a 118-bus power system. A comparative study is enabled accordingly to compare several advanced techniques in both domains. Dimensionality reduction and feature selection methods are compared both jointly and separately. Finally, experiments are concluded, and a setting is suggested that enhances data quality for fault diagnosis.

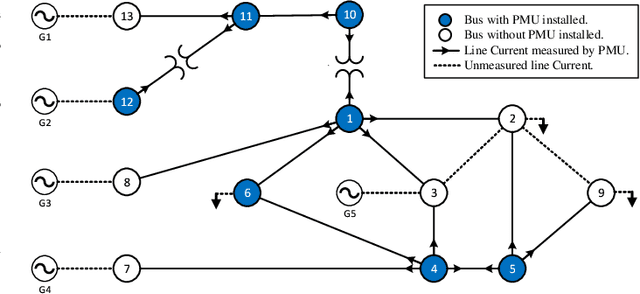

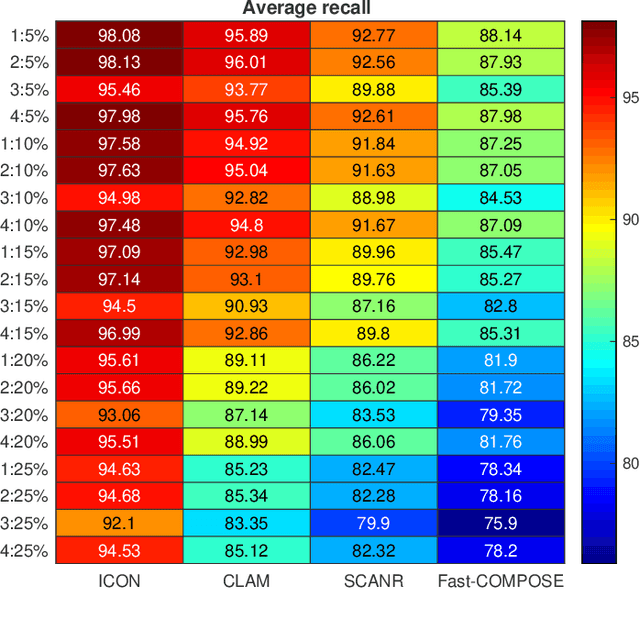

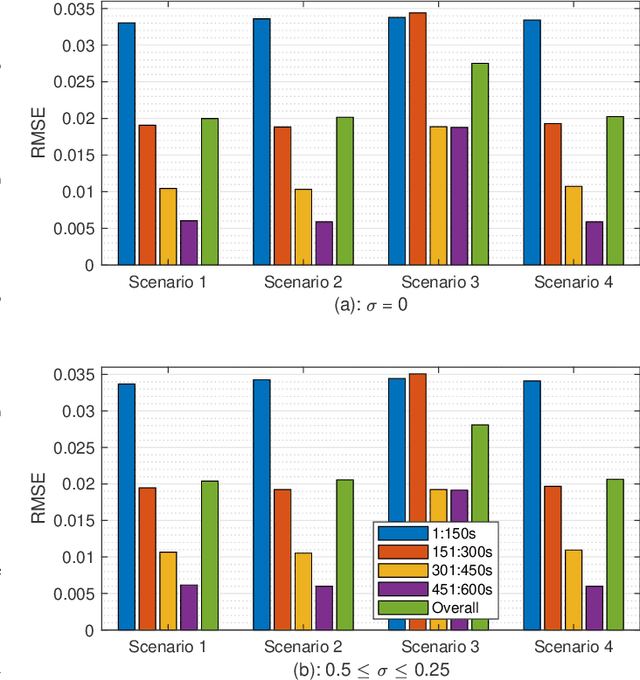

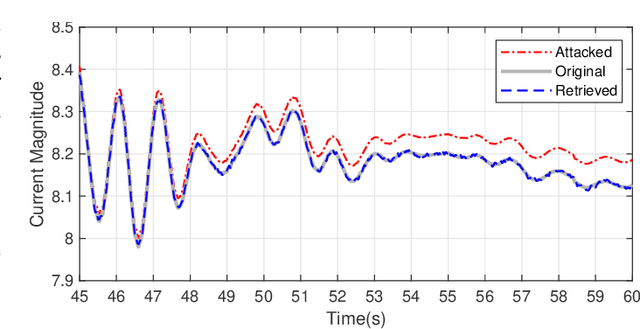

A Stream Learning Approach for Real-Time Identification of False Data Injection Attacks in Cyber-Physical Power Systems

Oct 13, 2022

Abstract:This paper presents a novel data-driven framework to aid in system state estimation when the power system is under unobservable false data injection attacks. The proposed framework dynamically detects and classifies false data injection attacks. Then, it retrieves the control signal using the acquired information. This process is accomplished in three main modules, with novel designs, for detection, classification, and control signal retrieval. The detection module monitors historical changes in phasor measurements and captures any deviation pattern caused by an attack on a complex plane. This approach can help to reveal characteristics of the attacks including the direction, magnitude, and ratio of the injected false data. Using this information, the signal retrieval module can easily recover the original control signal and remove the injected false data. Further information regarding the attack type can be obtained through the classifier module. The proposed ensemble learner is compatible with harsh learning conditions including the lack of labeled data, concept drift, concept evolution, recurring classes, and independence from external updates. The proposed novel classifier can dynamically learn from data and classify attacks under all these harsh learning conditions. The introduced framework is evaluated w.r.t. real-world data captured from the Central New York Power System. The obtained results indicate the efficacy and stability of the proposed framework.

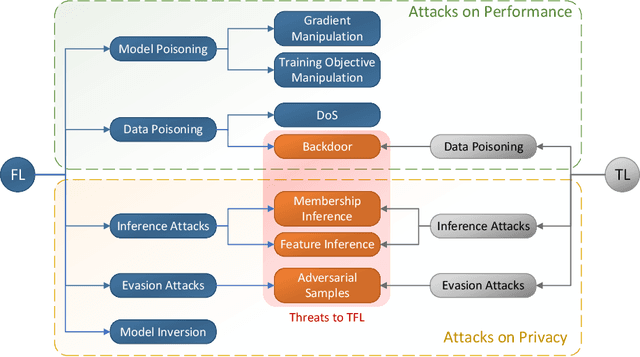

Federated and Transfer Learning: A Survey on Adversaries and Defense Mechanisms

Jul 05, 2022

Abstract:The advent of federated learning has facilitated large-scale data exchange amongst machine learning models while maintaining privacy. Despite its brief history, federated learning is rapidly evolving to make wider use more practical. One of the most significant advancements in this domain is the incorporation of transfer learning into federated learning, which overcomes fundamental constraints of primary federated learning, particularly in terms of security. This chapter performs a comprehensive survey on the intersection of federated and transfer learning from a security point of view. The main goal of this study is to uncover potential vulnerabilities and defense mechanisms that might compromise the privacy and performance of systems that use federated and transfer learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge