Edward Andert

CONClave -- Secure and Robust Cooperative Perception for CAVs Using Authenticated Consensus and Trust Scoring

Sep 04, 2024

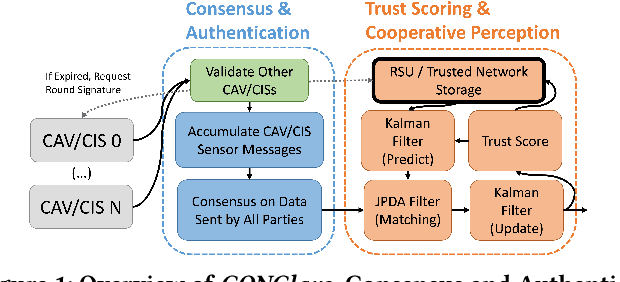

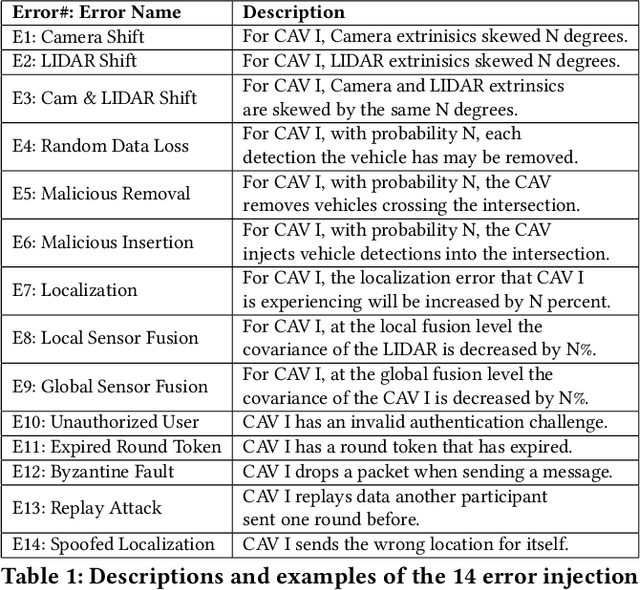

Abstract:Connected Autonomous Vehicles have great potential to improve automobile safety and traffic flow, especially in cooperative applications where perception data is shared between vehicles. However, this cooperation must be secured from malicious intent and unintentional errors that could cause accidents. Previous works typically address singular security or reliability issues for cooperative driving in specific scenarios rather than the set of errors together. In this paper, we propose CONClave, a tightly coupled authentication, consensus, and trust scoring mechanism that provides comprehensive security and reliability for cooperative perception in autonomous vehicles. CONClave benefits from the pipelined nature of the steps such that faults can be detected significantly faster and with less compute. Overall, CONClave shows huge promise in preventing security flaws, detecting even relatively minor sensing faults, and increasing the robustness and accuracy of cooperative perception in CAVs while adding minimal overhead.

IncidentNet: Traffic Incident Detection, Localization and Severity Estimation with Sparse Sensing

Aug 02, 2024Abstract:Prior art in traffic incident detection relies on high sensor coverage and is primarily based on decision-tree and random forest models that have limited representation capacity and, as a result, cannot detect incidents with high accuracy. This paper presents IncidentNet - a novel approach for classifying, localizing, and estimating the severity of traffic incidents using deep learning models trained on data captured from sparsely placed sensors in urban environments. Our model works on microscopic traffic data that can be collected using cameras installed at traffic intersections. Due to the unavailability of datasets that provide microscopic traffic details and traffic incident details simultaneously, we also present a methodology to generate a synthetic microscopic traffic dataset that matches given macroscopic traffic data. IncidentNet achieves a traffic incident detection rate of 98%, with false alarm rates of less than 7% in 197 seconds on average in urban environments with cameras on less than 20% of the traffic intersections.

GiPH: Generalizable Placement Learning for Adaptive Heterogeneous Computing

May 23, 2023Abstract:Careful placement of a computational application within a target device cluster is critical for achieving low application completion time. The problem is challenging due to its NP-hardness and combinatorial nature. In recent years, learning-based approaches have been proposed to learn a placement policy that can be applied to unseen applications, motivated by the problem of placing a neural network across cloud servers. These approaches, however, generally assume the device cluster is fixed, which is not the case in mobile or edge computing settings, where heterogeneous devices move in and out of range for a particular application. We propose a new learning approach called GiPH, which learns policies that generalize to dynamic device clusters via 1) a novel graph representation gpNet that efficiently encodes the information needed for choosing a good placement, and 2) a scalable graph neural network (GNN) that learns a summary of the gpNet information. GiPH turns the placement problem into that of finding a sequence of placement improvements, learning a policy for selecting this sequence that scales to problems of arbitrary size. We evaluate GiPH with a wide range of task graphs and device clusters and show that our learned policy rapidly find good placements for new problem instances. GiPH finds placements with up to 30.5% lower completion times, searching up to 3X faster than other search-based placement policies.

Accurate Cooperative Sensor Fusion by Parameterized Covariance Generation for Sensing and Localization Pipelines in CAVs

Sep 07, 2022

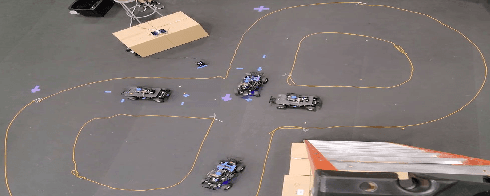

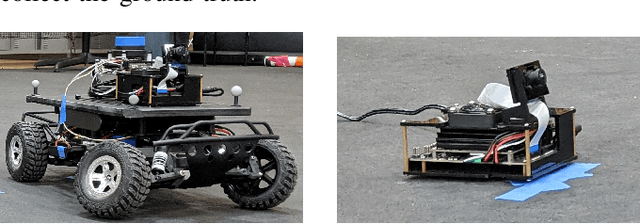

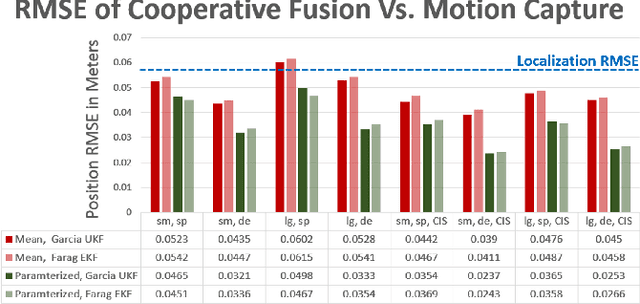

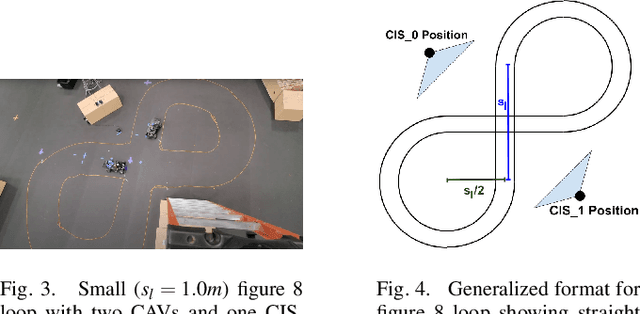

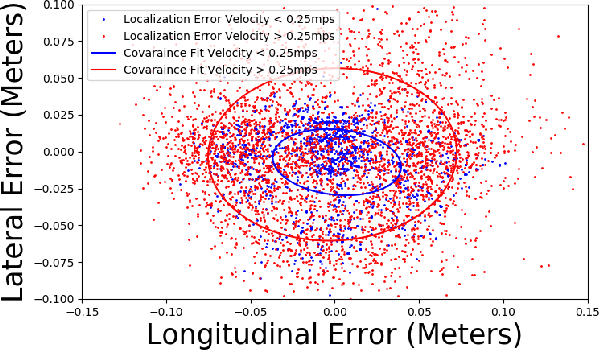

Abstract:A major challenge in cooperative sensing is to weight the measurements taken from the various sources to get an accurate result. Ideally, the weights should be inversely proportional to the error in the sensing information. However, previous cooperative sensor fusion approaches for autonomous vehicles use a fixed error model, in which the covariance of a sensor and its recognizer pipeline is just the mean of the measured covariance for all sensing scenarios. The approach proposed in this paper estimates error using key predictor terms that have high correlation with sensing and localization accuracy for accurate covariance estimation of each sensor observation. We adopt a tiered fusion model consisting of local and global sensor fusion steps. At the local fusion level, we add in a covariance generation stage using the error model for each sensor and the measured distance to generate the expected covariance matrix for each observation. At the global sensor fusion stage we add an additional stage to generate the localization covariance matrix from the key predictor term velocity and combines that with the covariance generated from the local fusion for accurate cooperative sensing. To showcase our method, we built a set of 1/10 scale model autonomous vehicles with scale accurate sensing capabilities and classified the error characteristics against a motion capture system. Results show an average and max improvement in RMSE when detecting vehicle positions of 1.42x and 1.78x respectively in a four-vehicle cooperative fusion scenario when using our error model versus a typical fixed error model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge