Eduardo Morales

Match Chat: Real Time Generative AI and Generative Computing for Tennis

Sep 16, 2025Abstract:We present Match Chat, a real-time, agent-driven assistant designed to enhance the tennis fan experience by delivering instant, accurate responses to match-related queries. Match Chat integrates Generative Artificial Intelligence (GenAI) with Generative Computing (GenComp) techniques to synthesize key insights during live tennis singles matches. The system debuted at the 2025 Wimbledon Championships and the 2025 US Open, where it provided about 1 million users with seamless access to streaming and static data through natural language queries. The architecture is grounded in an Agent-Oriented Architecture (AOA) combining rule engines, predictive models, and agents to pre-process and optimize user queries before passing them to GenAI components. The Match Chat system had an answer accuracy of 92.83% with an average response time of 6.25 seconds under loads of up to 120 requests per second (RPS). Over 96.08% of all queries were guided using interactive prompt design, contributing to a user experience that prioritized clarity, responsiveness, and minimal effort. The system was designed to mask architectural complexity, offering a frictionless and intuitive interface that required no onboarding or technical familiarity. Across both Grand Slam deployments, Match Chat maintained 100% uptime and supported nearly 1 million unique users, underscoring the scalability and reliability of the platform. This work introduces key design patterns for real-time, consumer-facing AI systems that emphasize speed, precision, and usability that highlights a practical path for deploying performant agentic systems in dynamic environments.

Automated Meta Prompt Engineering for Alignment with the Theory of Mind

May 13, 2025Abstract:We introduce a method of meta-prompting that jointly produces fluent text for complex tasks while optimizing the similarity of neural states between a human's mental expectation and a Large Language Model's (LLM) neural processing. A technique of agentic reinforcement learning is applied, in which an LLM as a Judge (LLMaaJ) teaches another LLM, through in-context learning, how to produce content by interpreting the intended and unintended generated text traits. To measure human mental beliefs around content production, users modify long form AI-generated text articles before publication at the US Open 2024 tennis Grand Slam. Now, an LLMaaJ can solve the Theory of Mind (ToM) alignment problem by anticipating and including human edits within the creation of text from an LLM. Throughout experimentation and by interpreting the results of a live production system, the expectations of human content reviewers had 100% of alignment with AI 53.8% of the time with an average iteration count of 4.38. The geometric interpretation of content traits such as factualness, novelty, repetitiveness, and relevancy over a Hilbert vector space combines spatial volume (all trait importance) with vertices alignment (individual trait relevance) enabled the LLMaaJ to optimize on Human ToM. This resulted in an increase in content quality by extending the coverage of tennis action. Our work that was deployed at the US Open 2024 has been used across other live events within sports and entertainment.

Large Scale Diverse Combinatorial Optimization: ESPN Fantasy Football Player Trades

Nov 05, 2021

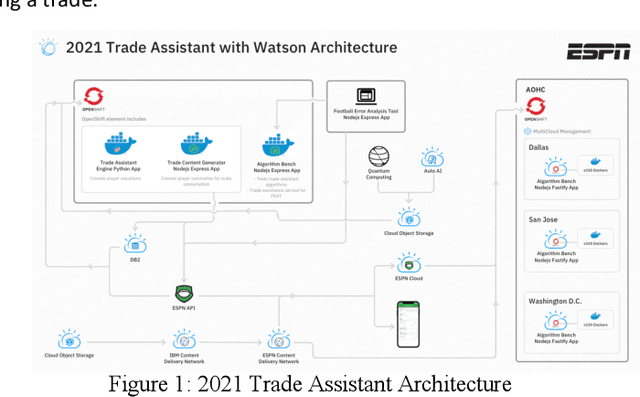

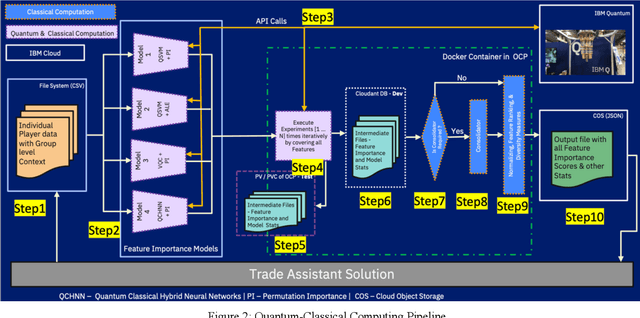

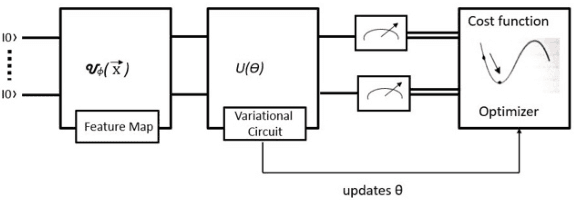

Abstract:Even skilled fantasy football managers can be disappointed by their mid-season rosters as some players inevitably fall short of draft day expectations. Team managers can quickly discover that their team has a low score ceiling even if they start their best active players. A novel and diverse combinatorial optimization system proposes high volume and unique player trades between complementary teams to balance trade fairness. Several algorithms create the valuation of each fantasy football player with an ensemble of computing models: Quantum Support Vector Classifier with Permutation Importance (QSVC-PI), Quantum Support Vector Classifier with Accumulated Local Effects (QSVC-ALE), Variational Quantum Circuit with Permutation Importance (VQC-PI), Hybrid Quantum Neural Network with Permutation Importance (HQNN-PI), eXtreme Gradient Boosting Classifier (XGB), and Subject Matter Expert (SME) rules. The valuation of each player is personalized based on league rules, roster, and selections. The cost of trading away a player is related to a team's roster, such as the depth at a position, slot count, and position importance. Teams are paired together for trading based on a cosine dissimilarity score so that teams can offset their strengths and weaknesses. A knapsack 0-1 algorithm computes outgoing players for each team. Postprocessors apply analytics and deep learning models to measure 6 different objective measures about each trade. Over the 2020 and 2021 National Football League (NFL) seasons, a group of 24 experts from IBM and ESPN evaluated trade quality through 10 Football Error Analysis Tool (FEAT) sessions. Our system started with 76.9% of high-quality trades and was deployed for the 2021 season with 97.3% of high-quality trades. To increase trade quantity, our quantum, classical, and rules-based computing have 100% trade uniqueness. We use Qiskit's quantum simulators throughout our work.

Deep Artificial Intelligence for Fantasy Football Language Understanding

Nov 04, 2021

Abstract:Fantasy sports allow fans to manage a team of their favorite athletes and compete with friends. The fantasy platform aligns the real-world statistical performance of athletes to fantasy scoring and has steadily risen in popularity to an estimated 9.1 million players per month with 4.4 billion player card views on the ESPN Fantasy Football platform from 2018-2019. In parallel, the sports media community produces news stories, blogs, forum posts, tweets, videos, podcasts and opinion pieces that are both within and outside the context of fantasy sports. However, human fantasy football players can only analyze an average of 3.9 sources of information. Our work discusses the results of a machine learning pipeline to manage an ESPN Fantasy Football team. The use of trained statistical entity detectors and document2vector models applied to over 100,000 news sources and 2.3 million articles, videos and podcasts each day enables the system to comprehend natural language with an analogy test accuracy of 100% and keyword test accuracy of 80%. Deep learning feedforward neural networks provide player classifications such as if a player will be a bust, boom, play with a hidden injury or play meaningful touches with a cumulative 72% accuracy. Finally, a multiple regression ensemble uses the deep learning output and ESPN projection data to provide a point projection for each of the top 500+ fantasy football players in 2018. The point projection maintained a RMSE of 6.78 points. The best fit probability density function from a set of 24 is selected to visualize score spreads. Within the first 6 weeks of the product launch, the total number of users spent a cumulative time of over 4.6 years viewing our AI insights. The training data for our models was provided by a 2015 to 2016 web archive from Webhose, ESPN statistics, and Rotowire injury reports. We used 2017 fantasy football data as a test set.

Knowledge-Based Hierarchical POMDPs for Task Planning

Apr 09, 2021

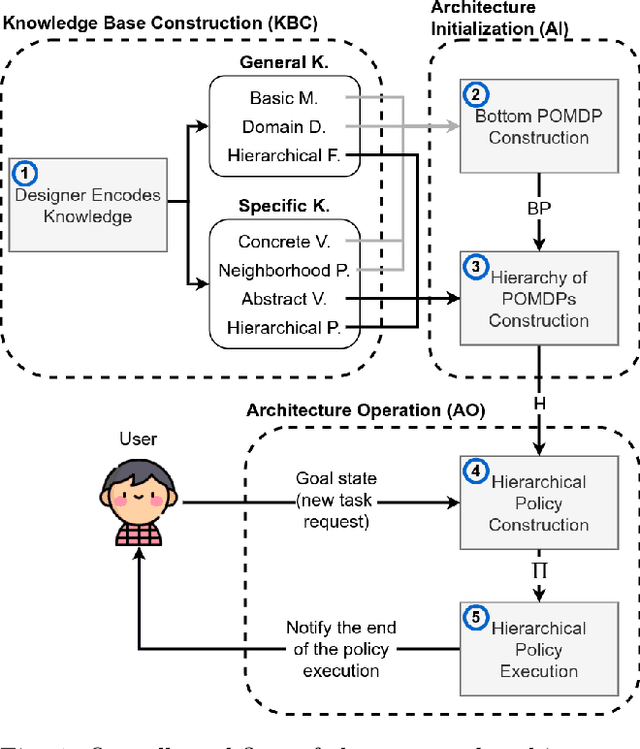

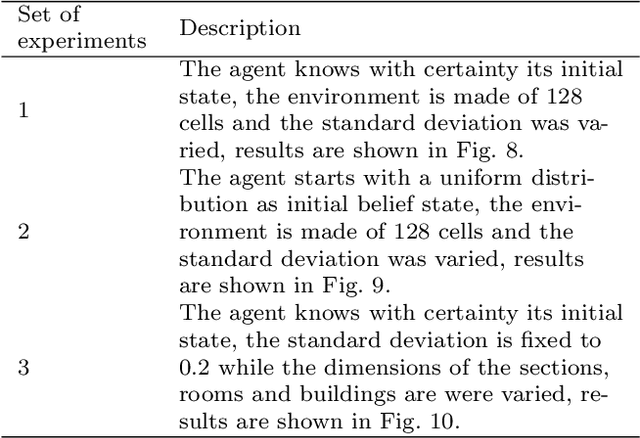

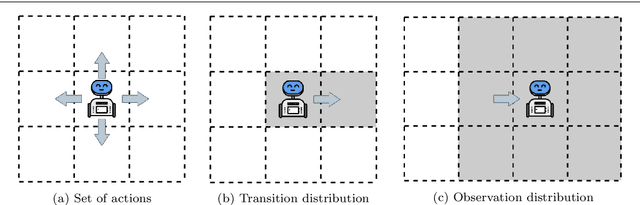

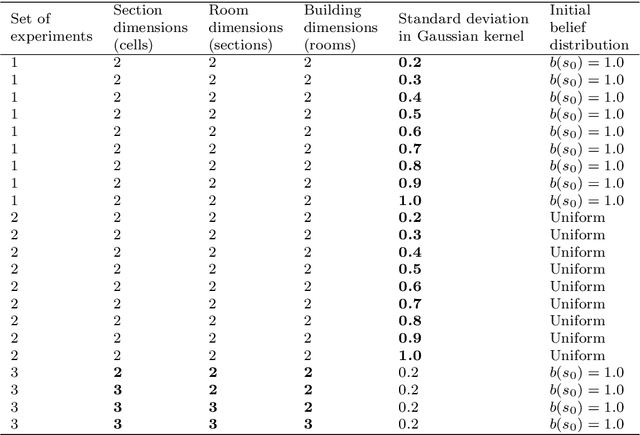

Abstract:The main goal in task planning is to build a sequence of actions that takes an agent from an initial state to a goal state. In robotics, this is particularly difficult because actions usually have several possible results, and sensors are prone to produce measurements with error. Partially observable Markov decision processes (POMDPs) are commonly employed, thanks to their capacity to model the uncertainty of actions that modify and monitor the state of a system. However, since solving a POMDP is computationally expensive, their usage becomes prohibitive for most robotic applications. In this paper, we propose a task planning architecture for service robotics. In the context of service robot design, we present a scheme to encode knowledge about the robot and its environment, that promotes the modularity and reuse of information. Also, we introduce a new recursive definition of a POMDP that enables our architecture to autonomously build a hierarchy of POMDPs, so that it can be used to generate and execute plans that solve the task at hand. Experimental results show that, in comparison to baseline methods, by following a recursive hierarchical approach the architecture is able to significantly reduce the planning time, while maintaining (or even improving) the robustness under several scenarios that vary in uncertainty and size.

Meta-learning of textual representations

Jul 19, 2019

Abstract:Recent progress in AutoML has lead to state-of-the-art methods (e.g., AutoSKLearn) that can be readily used by non-experts to approach any supervised learning problem. Whereas these methods are quite effective, they are still limited in the sense that they work for tabular (matrix formatted) data only. This paper describes one step forward in trying to automate the design of supervised learning methods in the context of text mining. We introduce a meta learning methodology for automatically obtaining a representation for text mining tasks starting from raw text. We report experiments considering 60 different textual representations and more than 80 text mining datasets associated to a wide variety of tasks. Experimental results show the proposed methodology is a promising solution to obtain highly effective off the shell text classification pipelines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge