Knowledge-Based Hierarchical POMDPs for Task Planning

Paper and Code

Apr 09, 2021

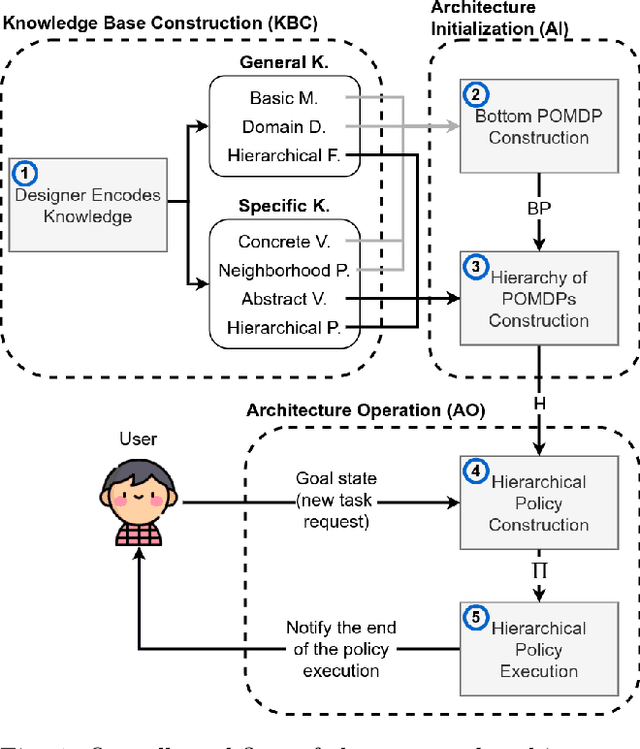

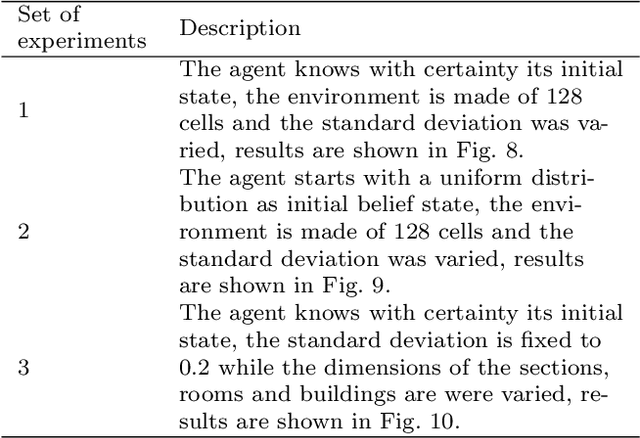

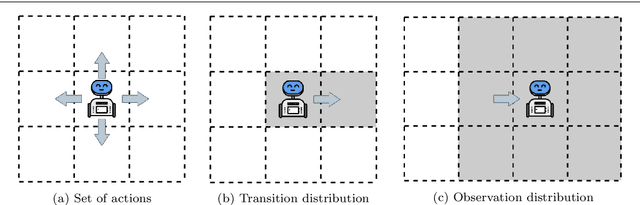

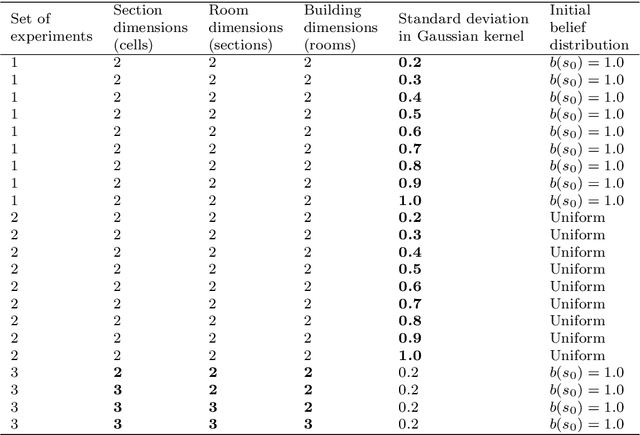

The main goal in task planning is to build a sequence of actions that takes an agent from an initial state to a goal state. In robotics, this is particularly difficult because actions usually have several possible results, and sensors are prone to produce measurements with error. Partially observable Markov decision processes (POMDPs) are commonly employed, thanks to their capacity to model the uncertainty of actions that modify and monitor the state of a system. However, since solving a POMDP is computationally expensive, their usage becomes prohibitive for most robotic applications. In this paper, we propose a task planning architecture for service robotics. In the context of service robot design, we present a scheme to encode knowledge about the robot and its environment, that promotes the modularity and reuse of information. Also, we introduce a new recursive definition of a POMDP that enables our architecture to autonomously build a hierarchy of POMDPs, so that it can be used to generate and execute plans that solve the task at hand. Experimental results show that, in comparison to baseline methods, by following a recursive hierarchical approach the architecture is able to significantly reduce the planning time, while maintaining (or even improving) the robustness under several scenarios that vary in uncertainty and size.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge