Eduardo Dadalto

Combine and Conquer: A Meta-Analysis on Data Shift and Out-of-Distribution Detection

Jun 23, 2024

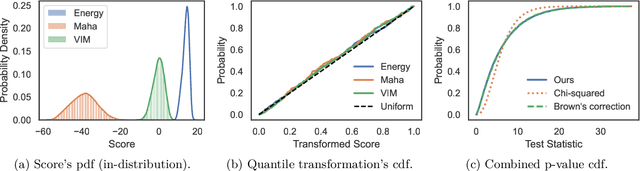

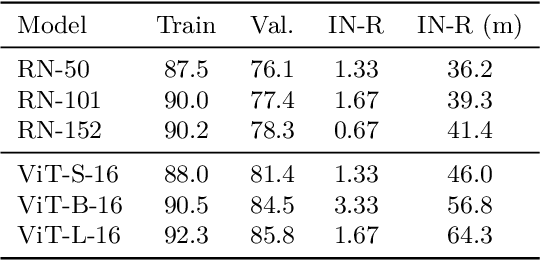

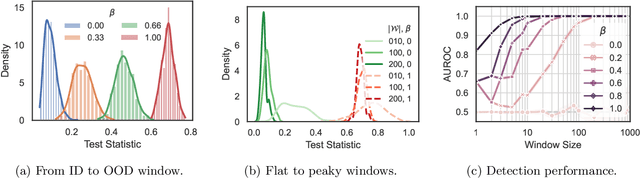

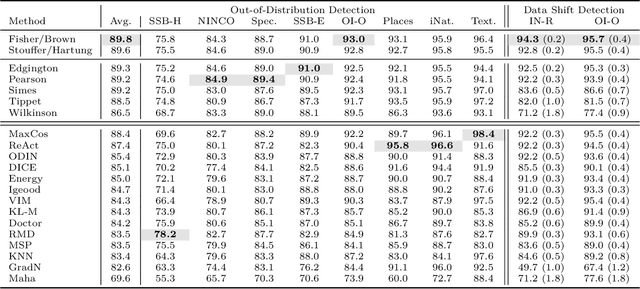

Abstract:This paper introduces a universal approach to seamlessly combine out-of-distribution (OOD) detection scores. These scores encompass a wide range of techniques that leverage the self-confidence of deep learning models and the anomalous behavior of features in the latent space. Not surprisingly, combining such a varied population using simple statistics proves inadequate. To overcome this challenge, we propose a quantile normalization to map these scores into p-values, effectively framing the problem into a multi-variate hypothesis test. Then, we combine these tests using established meta-analysis tools, resulting in a more effective detector with consolidated decision boundaries. Furthermore, we create a probabilistic interpretable criterion by mapping the final statistics into a distribution with known parameters. Through empirical investigation, we explore different types of shifts, each exerting varying degrees of impact on data. Our results demonstrate that our approach significantly improves overall robustness and performance across diverse OOD detection scenarios. Notably, our framework is easily extensible for future developments in detection scores and stands as the first to combine decision boundaries in this context. The code and artifacts associated with this work are publicly available\footnote{\url{https://github.com/edadaltocg/detectors}}.

A Functional Data Perspective and Baseline On Multi-Layer Out-of-Distribution Detection

Jun 06, 2023Abstract:A key feature of out-of-distribution (OOD) detection is to exploit a trained neural network by extracting statistical patterns and relationships through the multi-layer classifier to detect shifts in the expected input data distribution. Despite achieving solid results, several state-of-the-art methods rely on the penultimate or last layer outputs only, leaving behind valuable information for OOD detection. Methods that explore the multiple layers either require a special architecture or a supervised objective to do so. This work adopts an original approach based on a functional view of the network that exploits the sample's trajectories through the various layers and their statistical dependencies. It goes beyond multivariate features aggregation and introduces a baseline rooted in functional anomaly detection. In this new framework, OOD detection translates into detecting samples whose trajectories differ from the typical behavior characterized by the training set. We validate our method and empirically demonstrate its effectiveness in OOD detection compared to strong state-of-the-art baselines on computer vision benchmarks.

A Data-Driven Measure of Relative Uncertainty for Misclassification Detection

Jun 02, 2023Abstract:Misclassification detection is an important problem in machine learning, as it allows for the identification of instances where the model's predictions are unreliable. However, conventional uncertainty measures such as Shannon entropy do not provide an effective way to infer the real uncertainty associated with the model's predictions. In this paper, we introduce a novel data-driven measure of relative uncertainty to an observer for misclassification detection. By learning patterns in the distribution of soft-predictions, our uncertainty measure can identify misclassified samples based on the predicted class probabilities. Interestingly, according to the proposed measure, soft-predictions that correspond to misclassified instances can carry a large amount of uncertainty, even though they may have low Shannon entropy. We demonstrate empirical improvements over multiple image classification tasks, outperforming state-of-the-art misclassification detection methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge