Ebrahim Norouzi

ConExion: Concept Extraction with Large Language Models

Apr 22, 2025Abstract:In this paper, an approach for concept extraction from documents using pre-trained large language models (LLMs) is presented. Compared with conventional methods that extract keyphrases summarizing the important information discussed in a document, our approach tackles a more challenging task of extracting all present concepts related to the specific domain, not just the important ones. Through comprehensive evaluations of two widely used benchmark datasets, we demonstrate that our method improves the F1 score compared to state-of-the-art techniques. Additionally, we explore the potential of using prompts within these models for unsupervised concept extraction. The extracted concepts are intended to support domain coverage evaluation of ontologies and facilitate ontology learning, highlighting the effectiveness of LLMs in concept extraction tasks. Our source code and datasets are publicly available at https://github.com/ISE-FIZKarlsruhe/concept_extraction.

Semantic Web and Creative AI -- A Technical Report from ISWS 2023

Jan 30, 2025

Abstract:The International Semantic Web Research School (ISWS) is a week-long intensive program designed to immerse participants in the field. This document reports a collaborative effort performed by ten teams of students, each guided by a senior researcher as their mentor, attending ISWS 2023. Each team provided a different perspective to the topic of creative AI, substantiated by a set of research questions as the main subject of their investigation. The 2023 edition of ISWS focuses on the intersection of Semantic Web technologies and Creative AI. ISWS 2023 explored various intersections between Semantic Web technologies and creative AI. A key area of focus was the potential of LLMs as support tools for knowledge engineering. Participants also delved into the multifaceted applications of LLMs, including legal aspects of creative content production, humans in the loop, decentralised approaches to multimodal generative AI models, nanopublications and AI for personal scientific knowledge graphs, commonsense knowledge in automatic story and narrative completion, generative AI for art critique, prompt engineering, automatic music composition, commonsense prototyping and conceptual blending, and elicitation of tacit knowledge. As Large Language Models and semantic technologies continue to evolve, new exciting prospects are emerging: a future where the boundaries between creative expression and factual knowledge become increasingly permeable and porous, leading to a world of knowledge that is both informative and inspiring.

The landscape of ontologies in materials science and engineering: A survey and evaluation

Aug 12, 2024Abstract:Ontologies are widely used in materials science to describe experiments, processes, material properties, and experimental and computational workflows. Numerous online platforms are available for accessing and sharing ontologies in Materials Science and Engineering (MSE). Additionally, several surveys of these ontologies have been conducted. However, these studies often lack comprehensive analysis and quality control metrics. This paper provides an overview of ontologies used in Materials Science and Engineering to assist domain experts in selecting the most suitable ontology for a given purpose. Sixty selected ontologies are analyzed and compared based on the requirements outlined in this paper. Statistical data on ontology reuse and key metrics are also presented. The evaluation results provide valuable insights into the strengths and weaknesses of the investigated MSE ontologies. This enables domain experts to select suitable ontologies and to incorporate relevant terms from existing resources.

OAEI Machine Learning Dataset for Online Model Generation

Apr 29, 2024

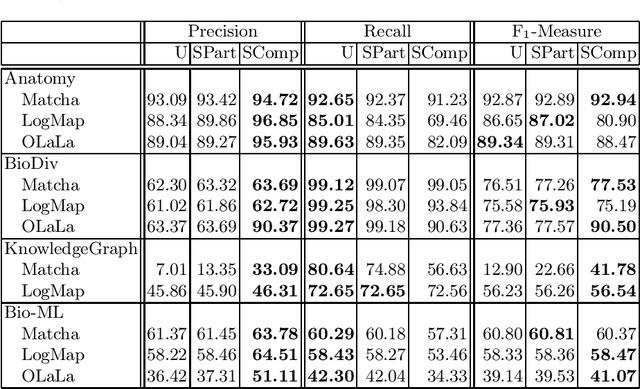

Abstract:Ontology and knowledge graph matching systems are evaluated annually by the Ontology Alignment Evaluation Initiative (OAEI). More and more systems use machine learning-based approaches, including large language models. The training and validation datasets are usually determined by the system developer and often a subset of the reference alignments are used. This sampling is against the OAEI rules and makes a fair comparison impossible. Furthermore, those models are trained offline (a trained and optimized model is packaged into the matcher) and therefore the systems are specifically trained for those tasks. In this paper, we introduce a dataset that contains training, validation, and test sets for most of the OAEI tracks. Thus, online model learning (the systems must adapt to the given input alignment without human intervention) is made possible to enable a fair comparison for ML-based systems. We showcase the usefulness of the dataset by fine-tuning the confidence thresholds of popular systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge