Dylan A. Shell

Limits of specifiability for sensor-based robotic planning tasks

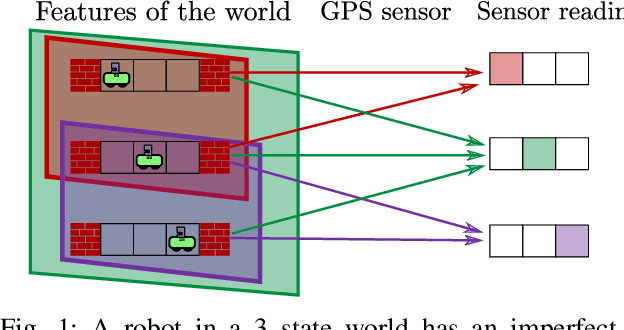

Mar 07, 2025Abstract:There is now a large body of techniques, many based on formal methods, for describing and realizing complex robotics tasks, including those involving a variety of rich goals and time-extended behavior. This paper explores the limits of what sorts of tasks are specifiable, examining how the precise grounding of specifications, that is, whether the specification is given in terms of the robot's states, its actions and observations, its knowledge, or some other information,is crucial to whether a given task can be specified. While prior work included some description of particular choices for this grounding, our contribution treats this aspect as a first-class citizen: we introduce notation to deal with a large class of problems, and examine how the grounding affects what tasks can be posed. The results demonstrate that certain classes of tasks are specifiable under different combinations of groundings.

An abstract theory of sensor eventification

Jun 30, 2024Abstract:Unlike traditional cameras, event cameras measure changes in light intensity and report differences. This paper examines the conditions necessary for other traditional sensors to admit eventified versions that provide adequate information despite outputting only changes. The requirements depend upon the regularity of the signal space, which we show may depend on several factors including structure arising from the interplay of the robot and its environment, the input-output computation needed to achieve its task, as well as the specific mode of access (synchronous, asynchronous, polled, triggered). Further, there are additional properties of stability (or non-oscillatory behavior) that can be desirable for a system to possess and that we show are also closely related to the preceding notions. This paper contributes theory and algorithms (plus a hardness result) that addresses these considerations while developing several elementary robot examples along the way.

* 21 pages, 14 figures

A Model for Optimal Resilient Planning Subject to Fallible Actuators

May 18, 2024Abstract:Robots incurring component failures ought to adapt their behavior to best realize still-attainable goals under reduced capacity. We formulate the problem of planning with actuators known a priori to be susceptible to failure within the Markov Decision Processes (MDP) framework. The model captures utilization-driven malfunction and state-action dependent likelihoods of actuator failure in order to enable reasoning about potential impairment and the long-term implications of impoverished future control. This leads to behavior differing qualitatively from plans which ignore failure. As actuators malfunction, there are combinatorially many configurations which can arise. We identify opportunities to save computation through re-use, exploiting the observation that differing configurations yield closely related problems. Our results show how strategic solutions are obtained so robots can respond when failures do occur -- for instance, in prudently scheduling utilization in order to keep critical actuators in reserve.

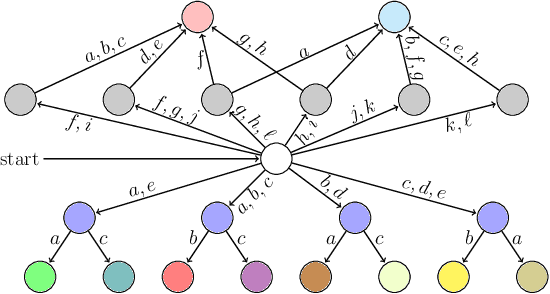

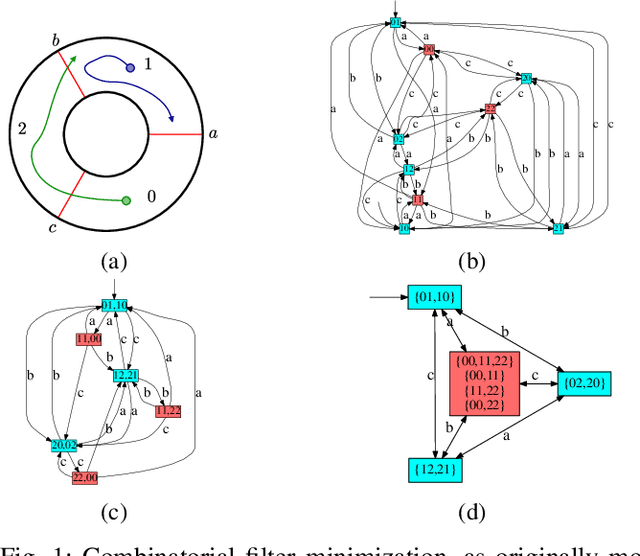

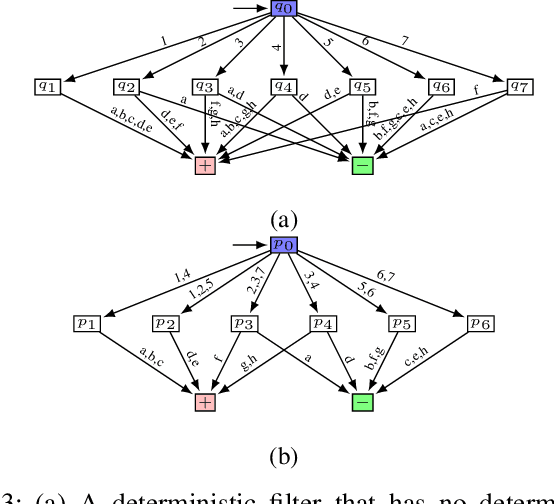

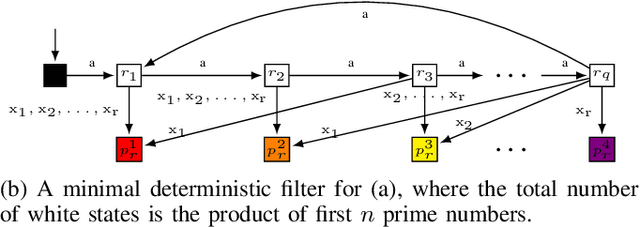

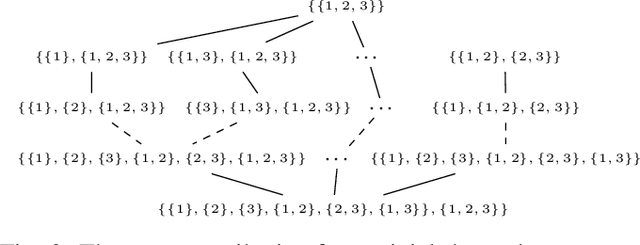

A fixed-parameter tractable algorithm for combinatorial filter reduction

Sep 22, 2023Abstract:What is the minimal information that a robot must retain to achieve its task? To design economical robots, the literature dealing with reduction of combinatorial filters approaches this problem algorithmically. As lossless state compression is NP-hard, prior work has examined, along with minimization algorithms, a variety of special cases in which specific properties enable efficient solution. Complementing those findings, this paper refines the present understanding from the perspective of parameterized complexity. We give a fixed-parameter tractable algorithm for the general reduction problem by exploiting a transformation into minimal clique covering. The transformation introduces new constraints that arise from sequential dependencies encoded within the input filter -- some of these constraints can be repaired, others are treated through enumeration. Through this approach, we identify parameters affecting filter reduction that are based upon inter-constraint couplings (expressed as a notion of their height and width), which add to the structural parameters present in the unconstrained problem of minimal clique covering.

Sensor selection for fine-grained behavior verification that respects privacy (extended version)

Aug 01, 2023Abstract:A useful capability is that of classifying some agent's behavior using data from a sequence, or trace, of sensor measurements. The sensor selection problem involves choosing a subset of available sensors to ensure that, when generated, observation traces will contain enough information to determine whether the agent's activities match some pattern. In generalizing prior work, this paper studies a formulation in which multiple behavioral itineraries may be supplied, with sensors selected to distinguish between behaviors. This allows one to pose fine-grained questions, e.g., to position the agent's activity on a spectrum. In addition, with multiple itineraries, one can also ask about choices of sensors where some behavior is always plausibly concealed by (or mistaken for) another. Using sensor ambiguity to limit the acquisition of knowledge is a strong privacy guarantee, a form of guarantee which some earlier work examined under formulations distinct from our inter-itinerary conflation approach. By concretely formulating privacy requirements for sensor selection, this paper connects both lines of work in a novel fashion: privacy-where there is a bound from above, and behavior verification-where sensors choices are bounded from below. We examine the worst-case computational complexity that results from both types of bounds, proving that upper bounds are more challenging under standard computational complexity assumptions. The problem is intractable in general, but we introduce an approach to solving this problem that can exploit interrelationships between constraints, and identify opportunities for optimizations. Case studies are presented to demonstrate the usefulness and scalability of our proposed solution, and to assess the impact of the optimizations.

Optimizing pre-scheduled, intermittently-observed MDPs

May 16, 2023

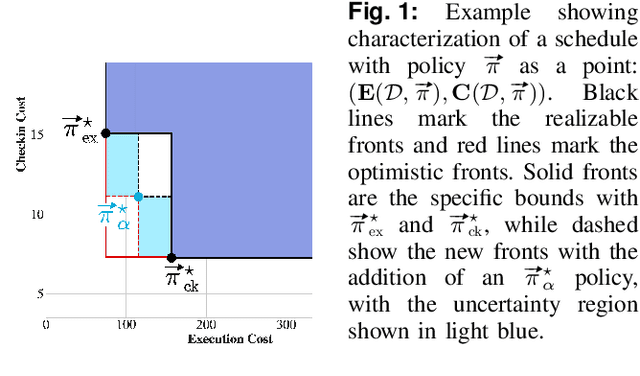

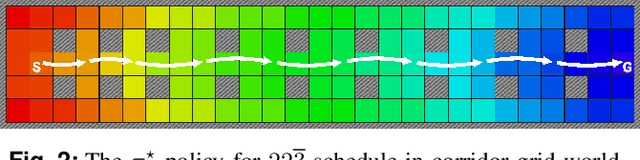

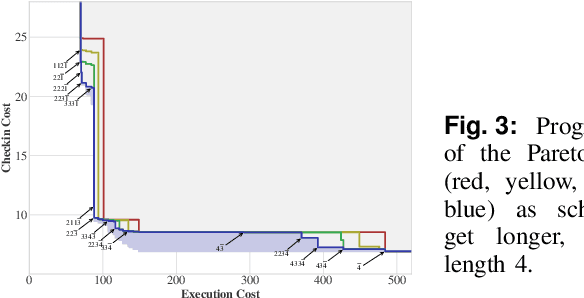

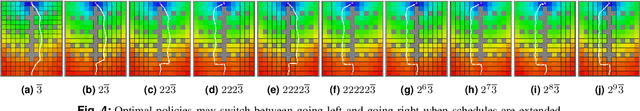

Abstract:A challenging category of robotics problems arises when sensing incurs substantial costs. This paper examines settings in which a robot wishes to limit its observations of state, for instance, motivated by specific considerations of energy management, stealth, or implicit coordination. We formulate the problem of planning under uncertainty when the robot's observations are intermittent but their timing is known via a pre-declared schedule. After having established the appropriate notion of an optimal policy for such settings, we tackle the problem of joint optimization of the cumulative execution cost and the number of state observations, both in expectation under discounts. To approach this multi-objective optimization problem, we introduce an algorithm that can identify the Pareto front for a class of schedules that are advantageous in the discounted setting. The algorithm proceeds in an accumulative fashion, prepending additions to a working set of schedules and then computing incremental changes to the value functions. Because full exhaustive construction becomes computationally prohibitive for moderate-sized problems, we propose a filtering approach to prune the working set. Empirical results demonstrate that this filtering is effective at reducing computation while incurring only negligible reduction in quality. In summarizing our findings, we provide some characterization of the run-time vs quality trade-off involved.

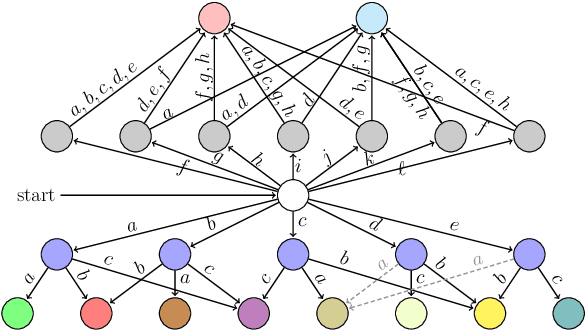

A general class of combinatorial filters that can be minimized efficiently

Sep 10, 2022

Abstract:State minimization of combinatorial filters is a fundamental problem that arises, for example, in building cheap, resource-efficient robots. But exact minimization is known to be NP-hard. This paper conducts a more nuanced analysis of this hardness than up till now, and uncovers two factors which contribute to this complexity. We show each factor is a distinct source of the problem's hardness and are able, thereby, to shed some light on the role played by (1) structure of the graph that encodes compatibility relationships, and (2) determinism-enforcing constraints. Just as a line of prior work has sought to introduce additional assumptions and identify sub-classes that lead to practical state reduction, we next use this new, sharper understanding to explore special cases for which exact minimization is efficient. We introduce a new algorithm for constraint repair that applies to a large sub-class of filters, subsuming three distinct special cases for which the possibility of optimal minimization in polynomial time was known earlier. While the efficiency in each of these three cases appeared, previously, to stem from seemingly dissimilar properties, when seen through the lens of the present work, their commonality now becomes clear. We also provide entirely new families of filters that are efficiently reducible.

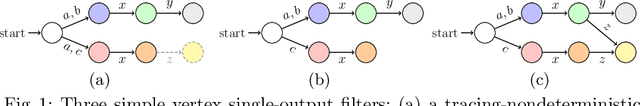

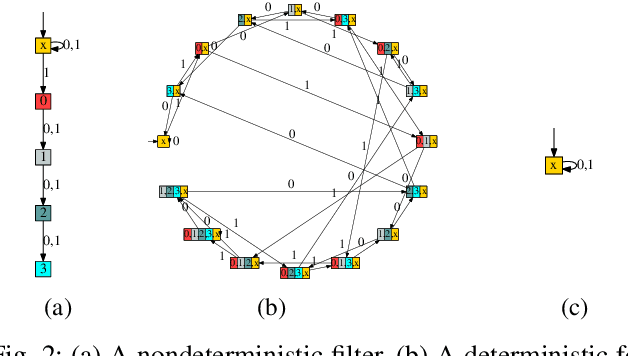

Nondeterminism subject to output commitment in combinatorial filters

Apr 01, 2022

Abstract:We study a class of filters -- discrete finite-state transition systems employed as incremental stream transducers -- that have application to robotics: e.g., to model combinatorial estimators and also as concise encodings of feedback plans/policies. The present paper examines their minimization problem under some new assumptions. Compared to strictly deterministic filters, allowing nondeterminism supplies opportunities for compression via re-use of states. But this paper suggests that the classic automata-theoretic concept of nondeterminism, though it affords said opportunities for reduction in state complexity, is problematic in many robotics settings. Instead, we argue for a new constrained type of nondeterminism that preserves input-output behavior for circumstances when, as for robots, causation forbids 'rewinding' of the world. We identify problem instances where compression under this constrained form of nondeterminism results in improvements over all deterministic filters. In this new setting, we examine computational complexity questions for the problem of reducing the state complexity of some given input filter. A hardness result for general deterministic input filters is presented, as well as for checking specific, narrower requirements, and some special cases. These results show that this class of nondeterminism gives problems of the same complexity class as classical nondeterminism, and the narrower questions help give a more nuanced understanding of the source of this complexity.

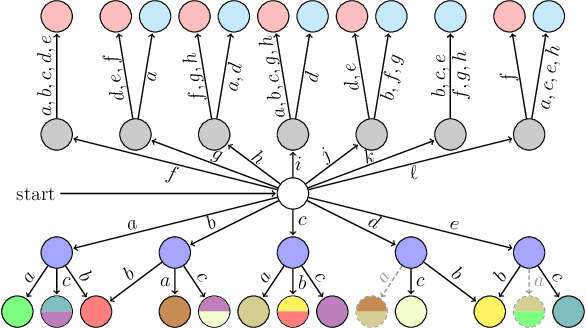

On nondeterminism in combinatorial filters

Jul 15, 2021

Abstract:The problem of combinatorial filter reduction arises from questions of resource optimization in robots; it is one specific way in which automation can help to achieve minimalism, to build better, simpler robots. This paper contributes a new definition of filter minimization that is broader than its antecedents, allowing filters (input, output, or both) to be nondeterministic. This changes the problem considerably. Nondeterministic filters are able to re-use states to obtain, essentially, more 'behavior' per vertex. We show that the gap in size can be significant (larger than polynomial), suggesting such cases will generally be more challenging than deterministic problems. Indeed, this is supported by the core computational complexity result established in this paper: producing nondeterministic minimizers is PSPACE-hard. The hardness separation for minimization which exists between deterministic filter and deterministic automata, thus, does not hold for the nondeterministic case.

Lattices of sensors reconsidered when less information is preferred

Jun 01, 2021

Abstract:To treat sensing limitations (with uncertainty in both conflation of information and noise) we model sensors as covers. This leads to a semilattice organization of abstract sensors that is appropriate even when additional information is problematic (e.g., for tasks involving privacy considerations).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge