Duarte Rondao

Explainable Convolutional Networks for Crater Detection and Lunar Landing Navigation

Aug 24, 2024

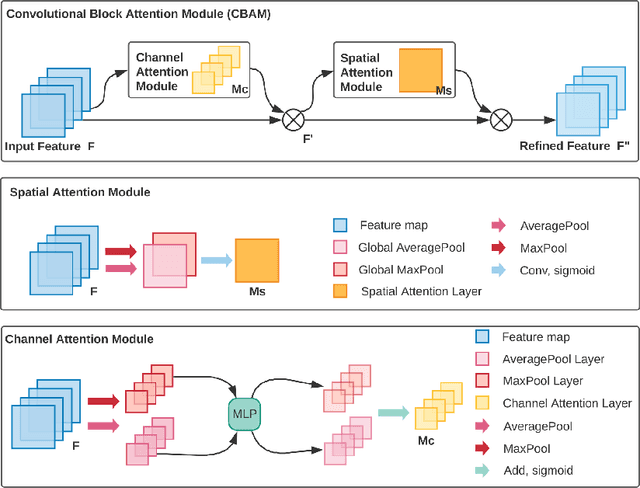

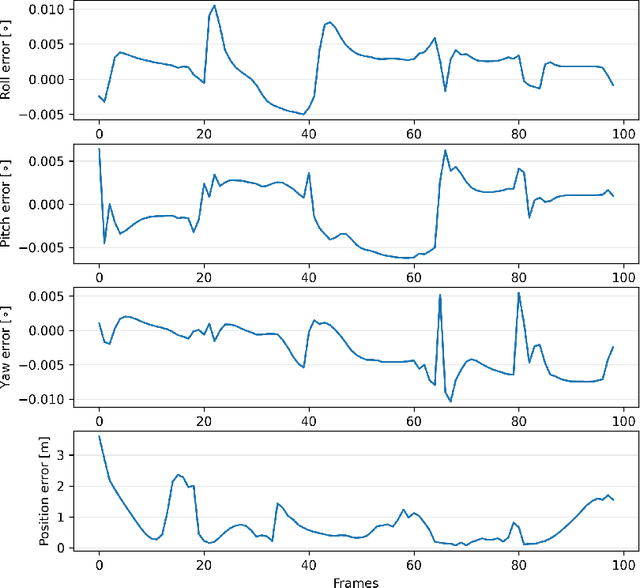

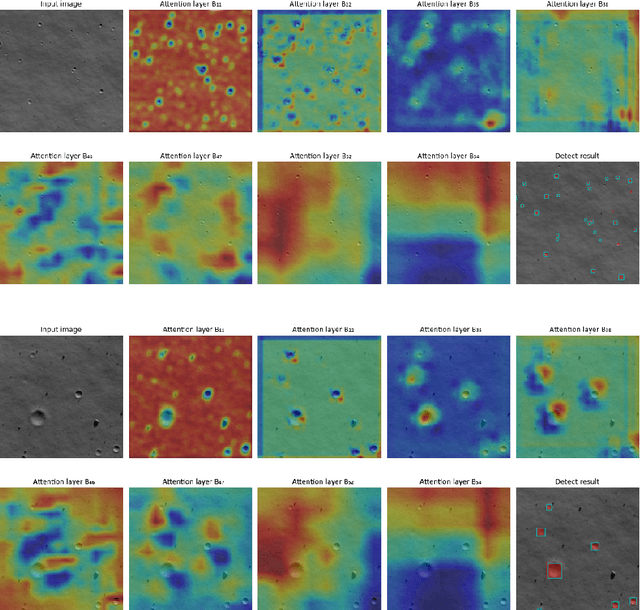

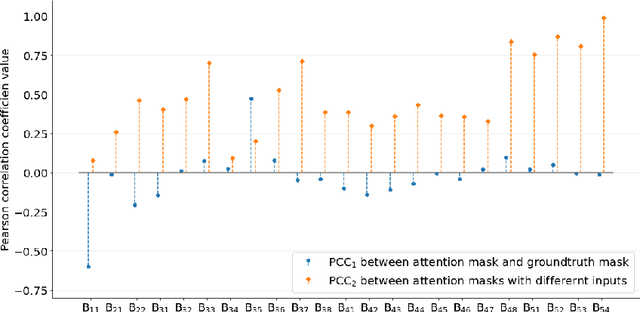

Abstract:The Lunar landing has drawn great interest in lunar exploration in recent years, and autonomous lunar landing navigation is fundamental to this task. AI is expected to play a critical role in autonomous and intelligent space missions, yet human experts question the reliability of AI solutions. Thus, the \gls{xai} for vision-based lunar landing is studied in this paper, aiming at providing transparent and understandable predictions for intelligent lunar landing. Attention-based Darknet53 is proposed as the feature extraction structure. For crater detection and navigation tasks, attention-based YOLOv3 and attention-Darknet53-LSTM are presented respectively. The experimental results show that the offered networks provide competitive performance on relative crater detection and pose estimation during the lunar landing. The explainability of the provided networks is achieved by introducing an attention mechanism into the network during model building. Moreover, the PCC is utilised to quantitively evaluate the explainability of the proposed networks, with the findings showing the functions of various convolutional layers in the network.

Orbital AI-based Autonomous Refuelling Solution

Sep 20, 2023

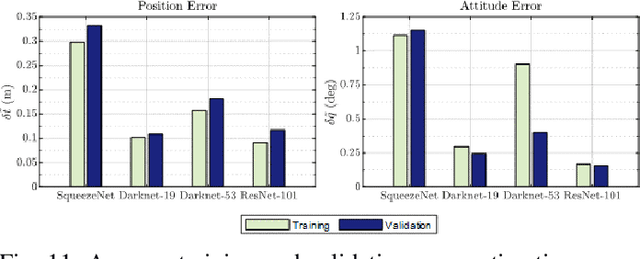

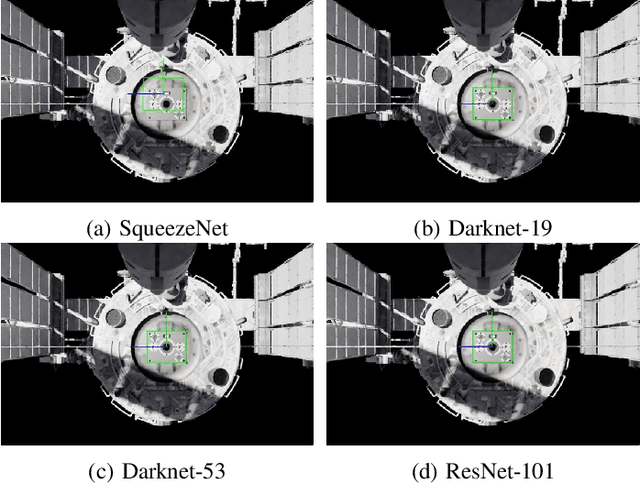

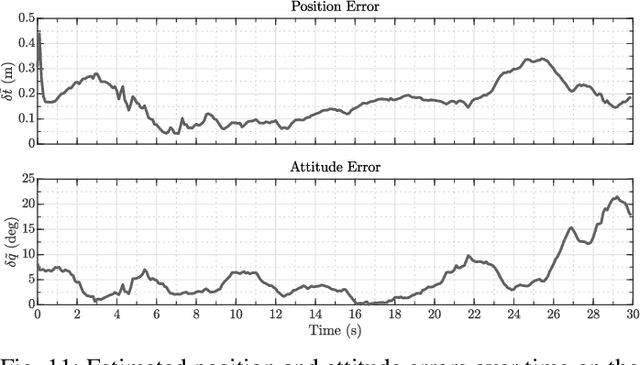

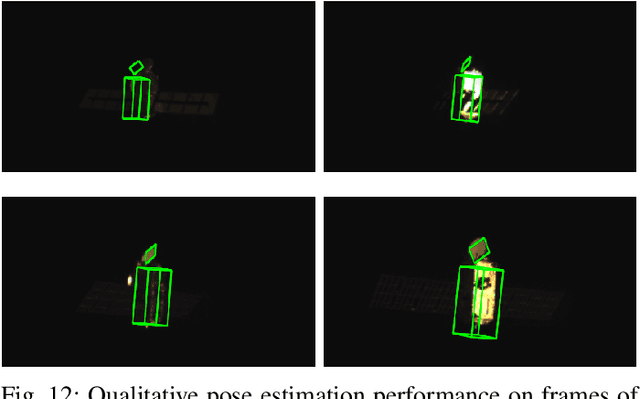

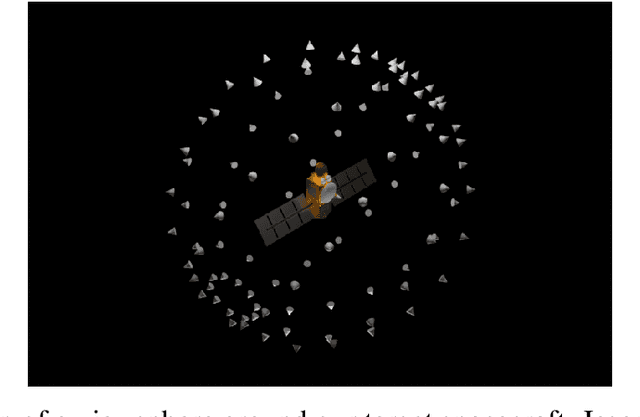

Abstract:Cameras are rapidly becoming the choice for on-board sensors towards space rendezvous due to their small form factor and inexpensive power, mass, and volume costs. When it comes to docking, however, they typically serve a secondary role, whereas the main work is done by active sensors such as lidar. This paper documents the development of a proposed AI-based (artificial intelligence) navigation algorithm intending to mature the use of on-board visible wavelength cameras as a main sensor for docking and on-orbit servicing (OOS), reducing the dependency on lidar and greatly reducing costs. Specifically, the use of AI enables the expansion of the relative navigation solution towards multiple classes of scenarios, e.g., in terms of targets or illumination conditions, which would otherwise have to be crafted on a case-by-case manner using classical image processing methods. Multiple convolutional neural network (CNN) backbone architectures are benchmarked on synthetically generated data of docking manoeuvres with the International Space Station (ISS), achieving position and attitude estimates close to 1% range-normalised and 1 deg, respectively. The integration of the solution with a physical prototype of the refuelling mechanism is validated in laboratory using a robotic arm to simulate a berthing procedure.

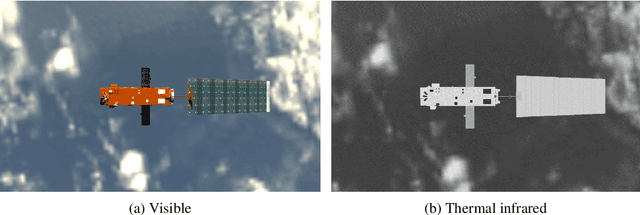

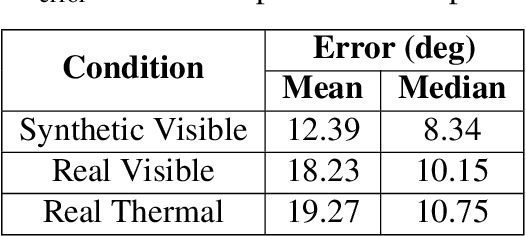

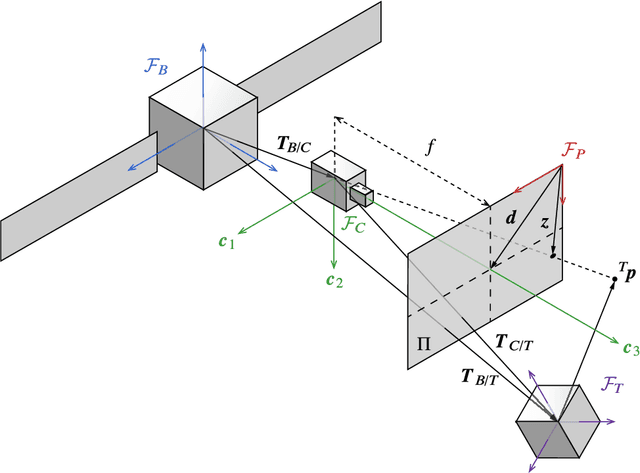

ChiNet: Deep Recurrent Convolutional Learning for Multimodal Spacecraft Pose Estimation

Aug 23, 2021

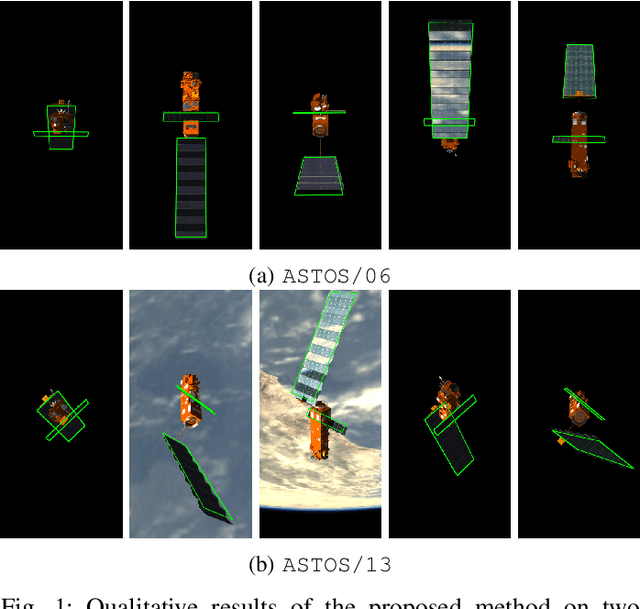

Abstract:This paper presents an innovative deep learning pipeline which estimates the relative pose of a spacecraft by incorporating the temporal information from a rendezvous sequence. It leverages the performance of long short-term memory (LSTM) units in modelling sequences of data for the processing of features extracted by a convolutional neural network (CNN) backbone. Three distinct training strategies, which follow a coarse-to-fine funnelled approach, are combined to facilitate feature learning and improve end-to-end pose estimation by regression. The capability of CNNs to autonomously ascertain feature representations from images is exploited to fuse thermal infrared data with red-green-blue (RGB) inputs, thus mitigating the effects of artefacts from imaging space objects in the visible wavelength. Each contribution of the proposed framework, dubbed ChiNet, is demonstrated on a synthetic dataset, and the complete pipeline is validated on experimental data.

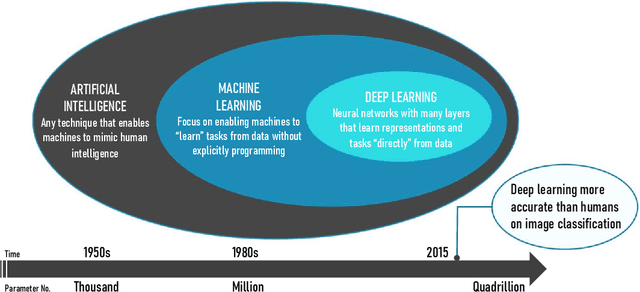

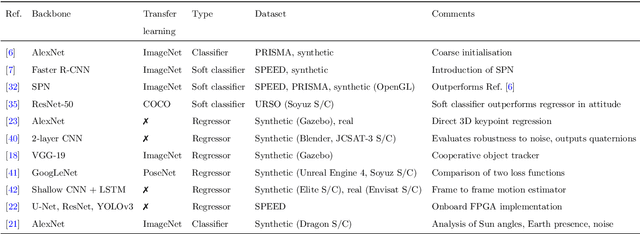

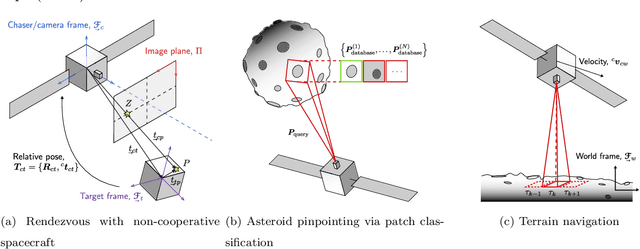

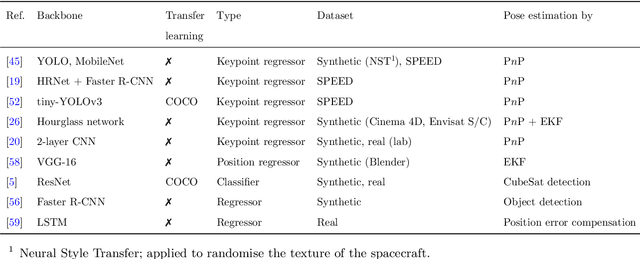

Deep Learning-based Spacecraft Relative Navigation Methods: A Survey

Aug 19, 2021

Abstract:Autonomous spacecraft relative navigation technology has been planned for and applied to many famous space missions. The development of on-board electronics systems has enabled the use of vision-based and LiDAR-based methods to achieve better performances. Meanwhile, deep learning has reached great success in different areas, especially in computer vision, which has also attracted the attention of space researchers. However, spacecraft navigation differs from ground tasks due to high reliability requirements but lack of large datasets. This survey aims to systematically investigate the current deep learning-based autonomous spacecraft relative navigation methods, focusing on concrete orbital applications such as spacecraft rendezvous and landing on small bodies or the Moon. The fundamental characteristics, primary motivations, and contributions of deep learning-based relative navigation algorithms are first summarised from three perspectives of spacecraft rendezvous, asteroid exploration, and terrain navigation. Furthermore, popular visual tracking benchmarks and their respective properties are compared and summarised. Finally, potential applications are discussed, along with expected impediments.

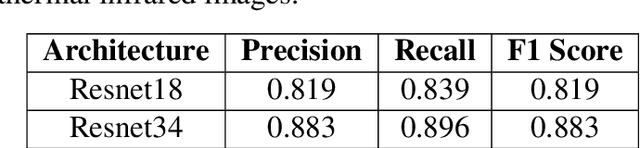

Using Convolutional Neural Networks for Relative Pose Estimation of a Non-Cooperative Spacecraft with Thermal Infrared Imagery

May 28, 2021

Abstract:Recent interest in on-orbit servicing and Active Debris Removal (ADR) missions have driven the need for technologies to enable non-cooperative rendezvous manoeuvres. Such manoeuvres put heavy burden on the perception capabilities of a chaser spacecraft. This paper demonstrates Convolutional Neural Networks (CNNs) capable of providing an initial coarse pose estimation of a target from a passive thermal infrared camera feed. Thermal cameras offer a promising alternative to visible cameras, which struggle in low light conditions and are susceptible to overexposure. Often, thermal information on the target is not available a priori; this paper therefore proposes using visible images to train networks. The robustness of the models is demonstrated on two different targets, first on synthetic data, and then in a laboratory environment for a realistic scenario that might be faced during an ADR mission. Given that there is much concern over the use of CNN in critical applications due to their black box nature, we use innovative techniques to explain what is important to our network and fault conditions.

Robust On-Manifold Optimization for Uncooperative Space Relative Navigation with a Single Camera

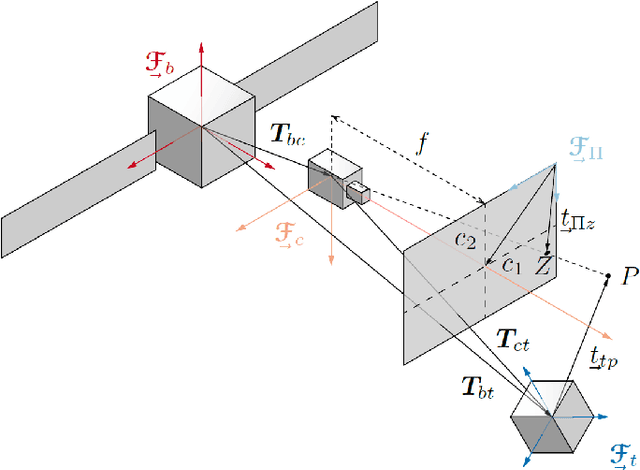

May 14, 2020

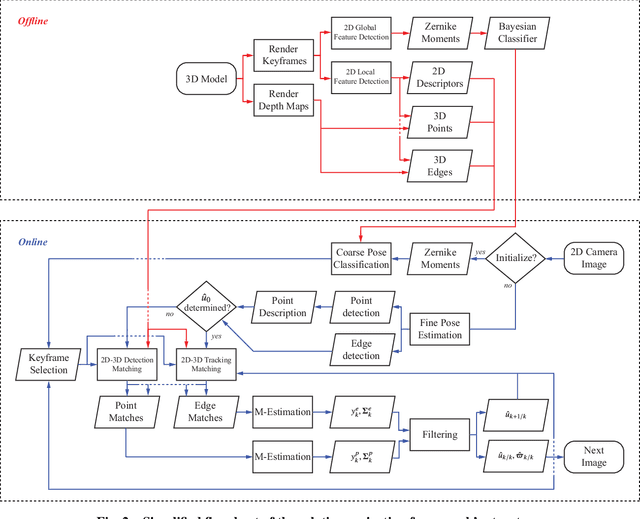

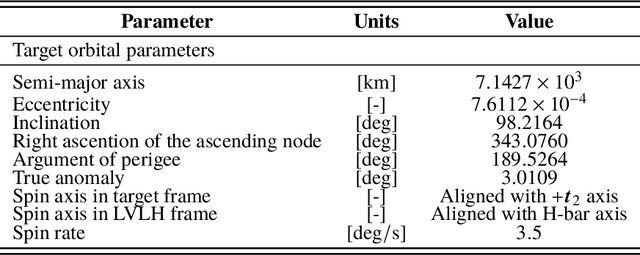

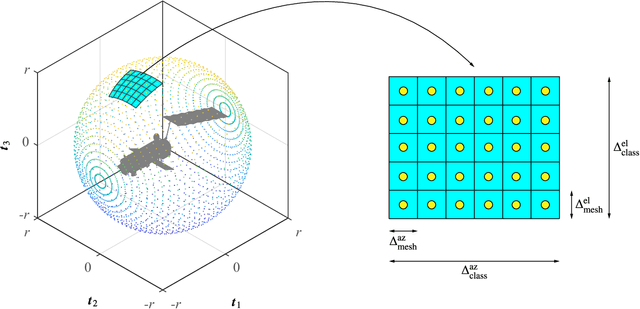

Abstract:Optical cameras are gaining popularity as the suitable sensor for relative navigation in space due to their attractive sizing, power and cost properties when compared to conventional flight hardware or costly laser-based systems. However, a camera cannot infer depth information on its own, which is often solved by introducing complementary sensors or a second camera. In this paper, an innovative model-based approach is instead demonstrated to estimate the six-dimensional pose of a target object relative to the chaser spacecraft using solely a monocular setup. The observed facet of the target is tackled as a classification problem, where the three-dimensional shape is learned offline using Gaussian mixture modeling. The estimate is refined by minimizing two different robust loss functions based on local feature correspondences. The resulting pseudo-measurements are then processed and fused with an extended Kalman filter. The entire optimization framework is designed to operate directly on the $SE\text{(3)}$ manifold, uncoupling the process and measurement models from the global attitude state representation. It is validated on realistic synthetic and laboratory datasets of a rendezvous trajectory with the complex spacecraft Envisat. It is demonstrated how it achieves an estimate of the relative pose with high accuracy over its full tumbling motion.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge