Christophe Honvault

Explainable Convolutional Networks for Crater Detection and Lunar Landing Navigation

Aug 24, 2024

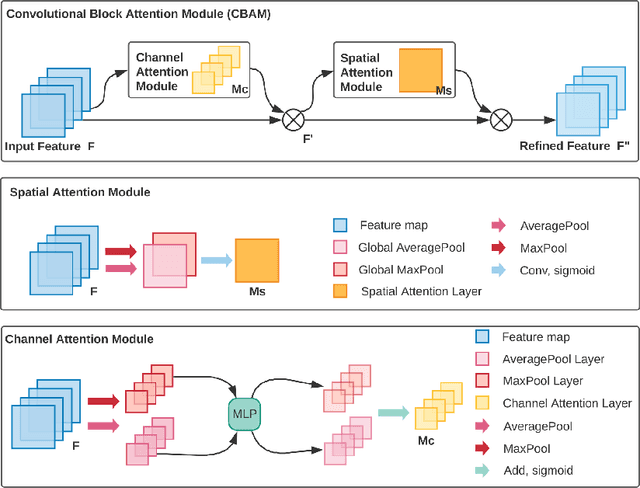

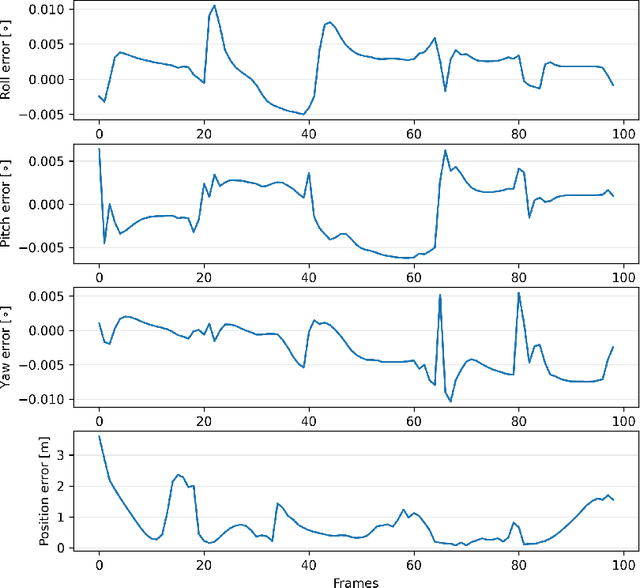

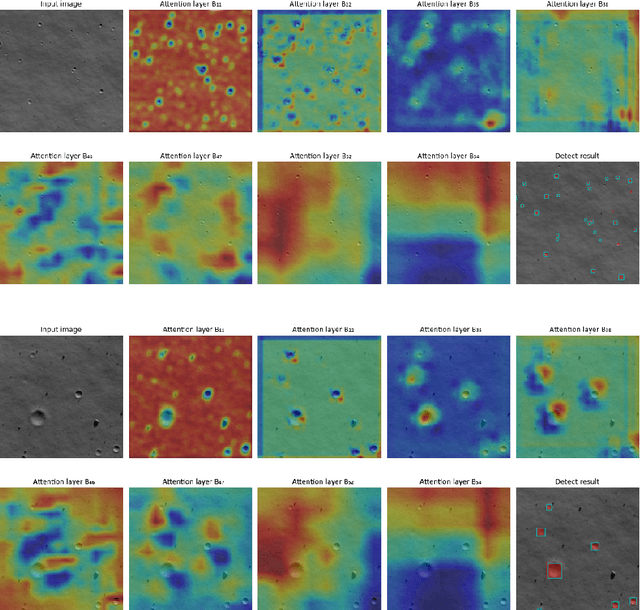

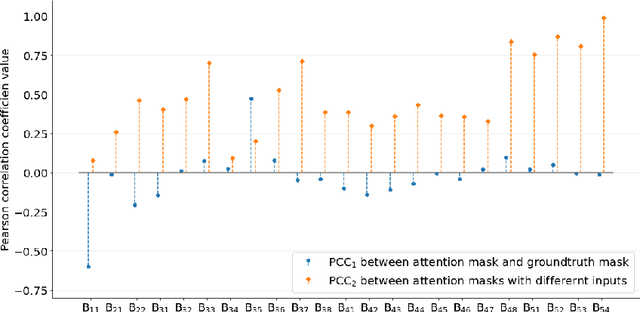

Abstract:The Lunar landing has drawn great interest in lunar exploration in recent years, and autonomous lunar landing navigation is fundamental to this task. AI is expected to play a critical role in autonomous and intelligent space missions, yet human experts question the reliability of AI solutions. Thus, the \gls{xai} for vision-based lunar landing is studied in this paper, aiming at providing transparent and understandable predictions for intelligent lunar landing. Attention-based Darknet53 is proposed as the feature extraction structure. For crater detection and navigation tasks, attention-based YOLOv3 and attention-Darknet53-LSTM are presented respectively. The experimental results show that the offered networks provide competitive performance on relative crater detection and pose estimation during the lunar landing. The explainability of the provided networks is achieved by introducing an attention mechanism into the network during model building. Moreover, the PCC is utilised to quantitively evaluate the explainability of the proposed networks, with the findings showing the functions of various convolutional layers in the network.

Robust Adversarial Attacks Detection for Deep Learning based Relative Pose Estimation for Space Rendezvous

Nov 10, 2023Abstract:Research on developing deep learning techniques for autonomous spacecraft relative navigation challenges is continuously growing in recent years. Adopting those techniques offers enhanced performance. However, such approaches also introduce heightened apprehensions regarding the trustability and security of such deep learning methods through their susceptibility to adversarial attacks. In this work, we propose a novel approach for adversarial attack detection for deep neural network-based relative pose estimation schemes based on the explainability concept. We develop for an orbital rendezvous scenario an innovative relative pose estimation technique adopting our proposed Convolutional Neural Network (CNN), which takes an image from the chaser's onboard camera and outputs accurately the target's relative position and rotation. We perturb seamlessly the input images using adversarial attacks that are generated by the Fast Gradient Sign Method (FGSM). The adversarial attack detector is then built based on a Long Short Term Memory (LSTM) network which takes the explainability measure namely SHapley Value from the CNN-based pose estimator and flags the detection of adversarial attacks when acting. Simulation results show that the proposed adversarial attack detector achieves a detection accuracy of 99.21%. Both the deep relative pose estimator and adversarial attack detector are then tested on real data captured from our laboratory-designed setup. The experimental results from our laboratory-designed setup demonstrate that the proposed adversarial attack detector achieves an average detection accuracy of 96.29%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge