Douglas A. Talbert

Investigating bankruptcy prediction models in the presence of extreme class imbalance and multiple stages of economy

Nov 22, 2019

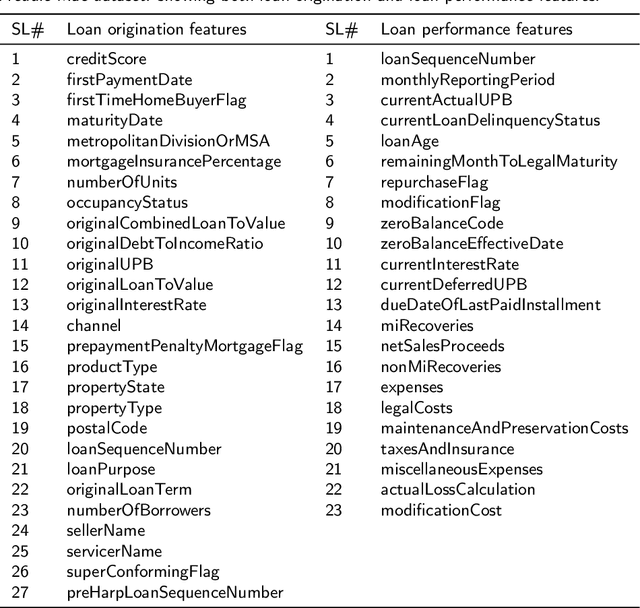

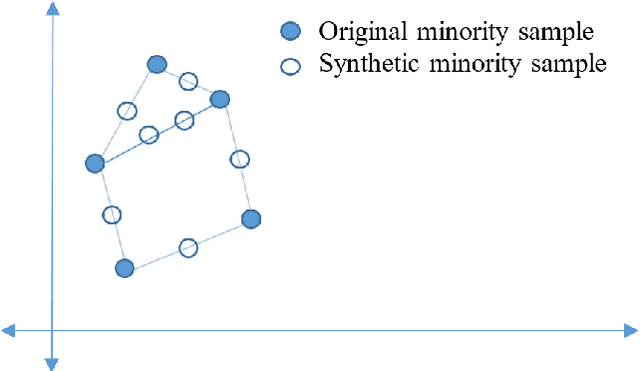

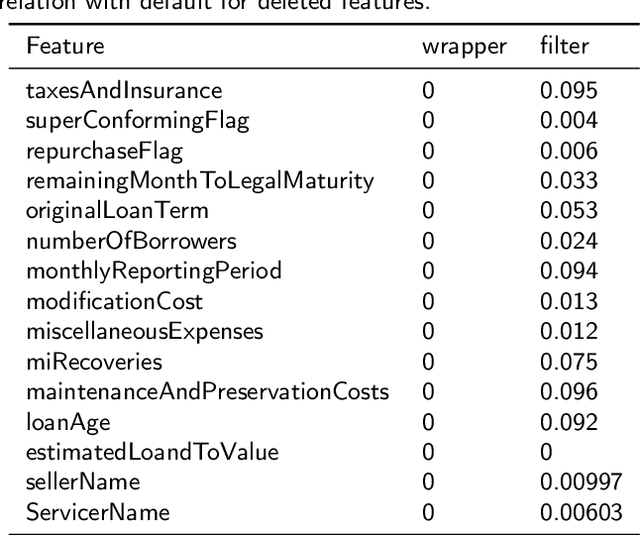

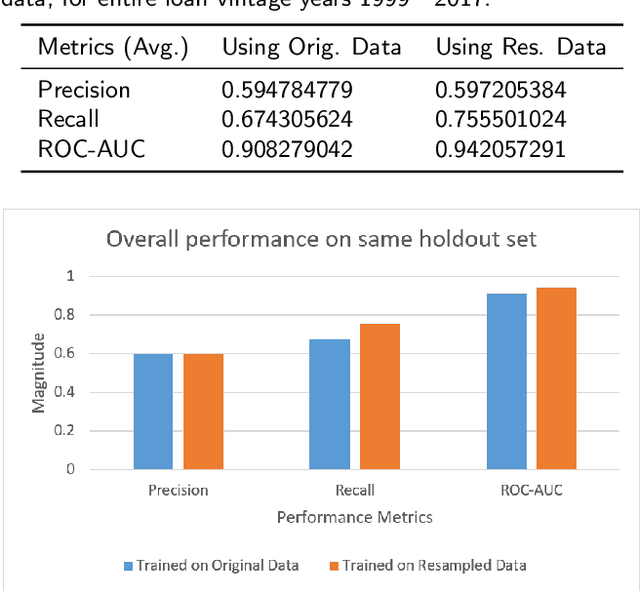

Abstract:In the area of credit risk analytics, current Bankruptcy Prediction Models (BPMs) struggle with (a) the availability of comprehensive and real-world data sets and (b) the presence of extreme class imbalance in the data (i.e., very few samples for the minority class) that degrades the performance of the prediction model. Moreover, little research has compared the relative performance of well-known BPM's on public datasets addressing the class imbalance problem. In this work, we apply eight classes of well-known BPMs, as suggested by a review of decades of literature, on a new public dataset named Freddie Mac Single-Family Loan-Level Dataset with resampling (i.e., adding synthetic minority samples) of the minority class to tackle class imbalance. Additionally, we apply some recent AI techniques (e.g., tree-based ensemble techniques) that demonstrate potentially better results on models trained with resampled data. In addition, from the analysis of 19 years (1999-2017) of data, we discover that models behave differently when presented with sudden changes in the economy (e.g., a global financial crisis) resulting in abrupt fluctuations in the national default rate. In summary, this study should aid practitioners/researchers in determining the appropriate model with respect to data that contains a class imbalance and various economic stages.

Mimic Learning to Generate a Shareable Network Intrusion Detection Model

May 02, 2019

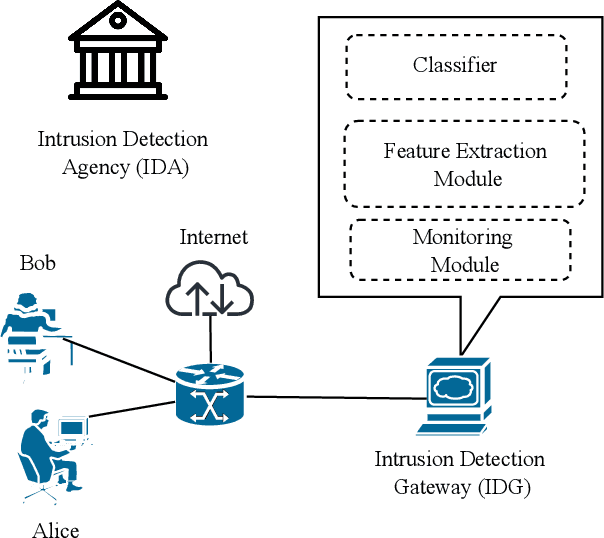

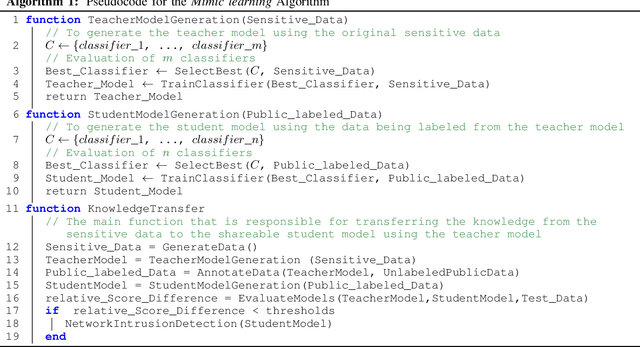

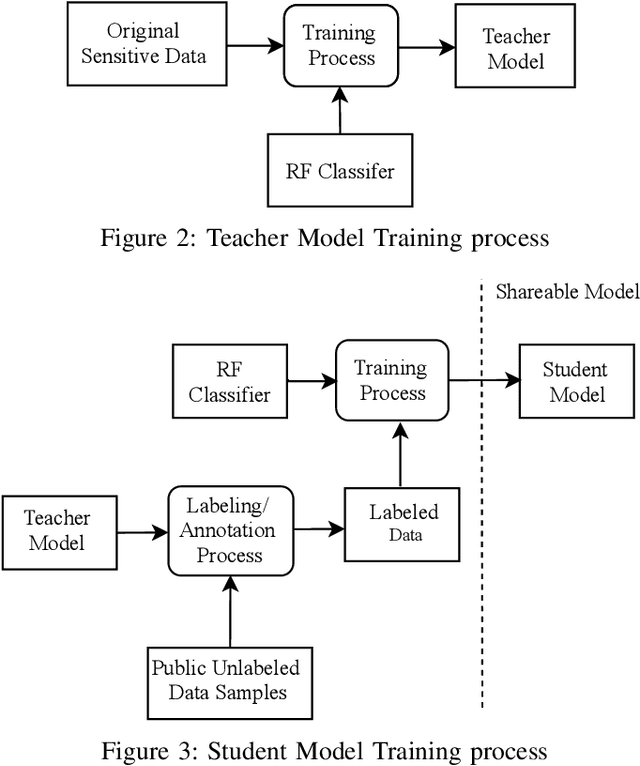

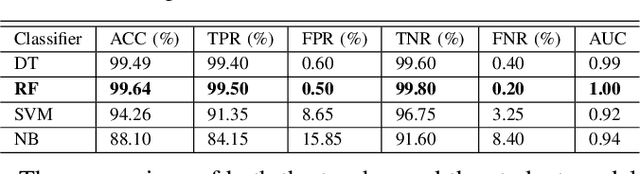

Abstract:Purveyors of malicious network attacks continue to increase the complexity and the sophistication of their techniques, and their ability to evade detection continues to improve as well. Hence, intrusion detection systems must also evolve to meet these increasingly challenging threats. Machine learning is often used to support this needed improvement. However, training a good prediction model can require a large set of labelled training data. Such datasets are difficult to obtain because privacy concerns prevent the majority of intrusion detection agencies from sharing their sensitive data. In this paper, we propose the use of mimic learning to enable the transfer of intrusion detection knowledge through a teacher model trained on private data to a student model. This student model provides a mean of publicly sharing knowledge extracted from private data without sharing the data itself. Our results confirm that the proposed scheme can produce a student intrusion detection model that mimics the teacher model without requiring access to the original dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge