Mohamed Baza

On Sharing Models Instead of Data using Mimic learning for Smart Health Applications

Dec 24, 2019

Abstract:Electronic health records (EHR) systems contain vast amounts of medical information about patients. These data can be used to train machine learning models that can predict health status, as well as to help prevent future diseases or disabilities. However, getting patients' medical data to obtain well-trained machine learning models is a challenging task. This is because sharing the patients' medical records is prohibited by law in most countries due to patients privacy concerns. In this paper, we tackle this problem by sharing the models instead of the original sensitive data by using the mimic learning approach. The idea is first to train a model on the original sensitive data, called the teacher model. Then, using this model, we can transfer its knowledge to another model, called the student model, without the need to learn the original data used in training the teacher model. The student model is then shared to the public and can be used to make accurate predictions. To assess the mimic learning approach, we have evaluated our scheme using different medical datasets. The results indicate that the student model mimics the teacher model performance in terms of prediction accuracy without the need to access to the patients' original data records.

Mimic Learning to Generate a Shareable Network Intrusion Detection Model

May 02, 2019

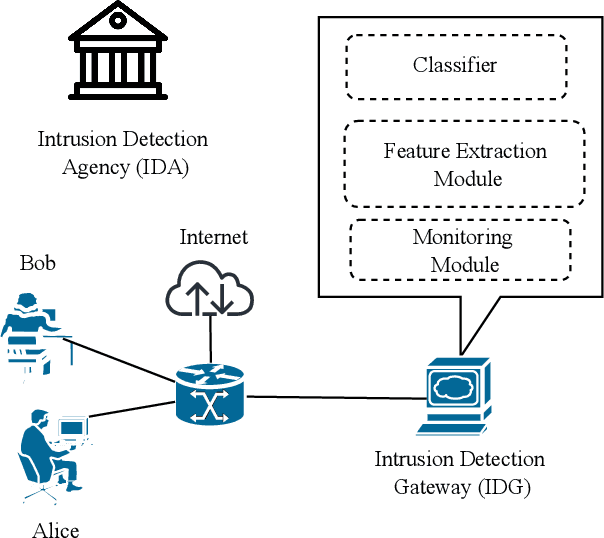

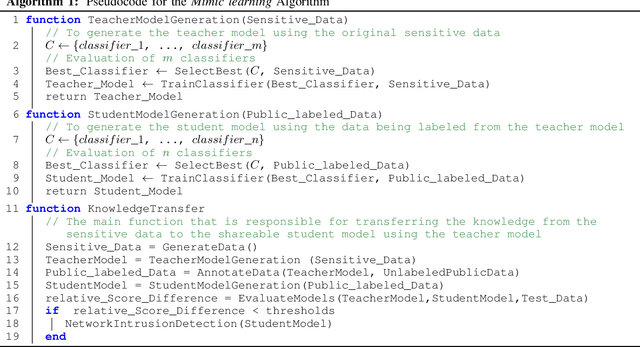

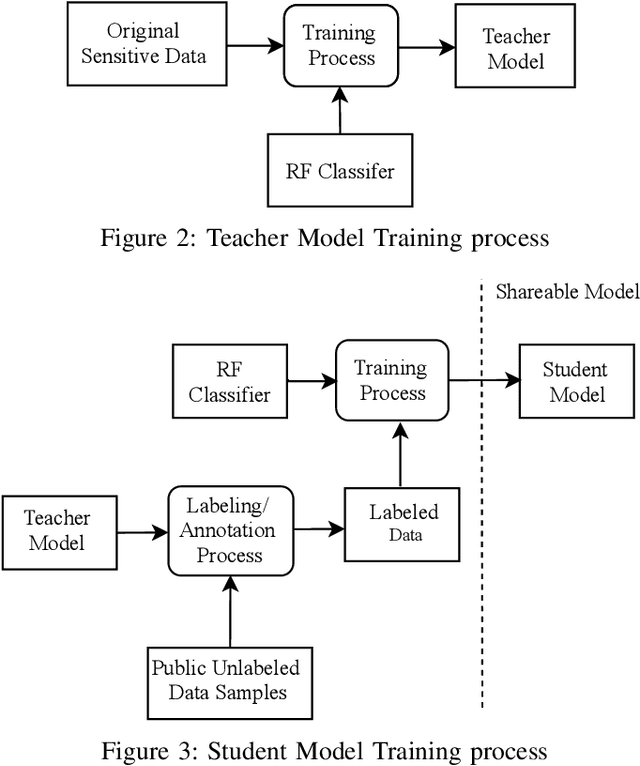

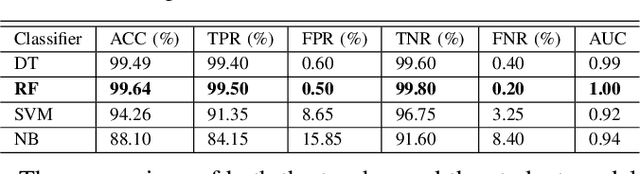

Abstract:Purveyors of malicious network attacks continue to increase the complexity and the sophistication of their techniques, and their ability to evade detection continues to improve as well. Hence, intrusion detection systems must also evolve to meet these increasingly challenging threats. Machine learning is often used to support this needed improvement. However, training a good prediction model can require a large set of labelled training data. Such datasets are difficult to obtain because privacy concerns prevent the majority of intrusion detection agencies from sharing their sensitive data. In this paper, we propose the use of mimic learning to enable the transfer of intrusion detection knowledge through a teacher model trained on private data to a student model. This student model provides a mean of publicly sharing knowledge extracted from private data without sharing the data itself. Our results confirm that the proposed scheme can produce a student intrusion detection model that mimics the teacher model without requiring access to the original dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge