Dominik Britz

Addressing materials' microstructure diversity using transfer learning

Jul 29, 2021

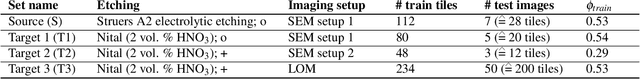

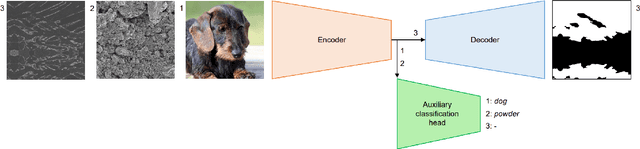

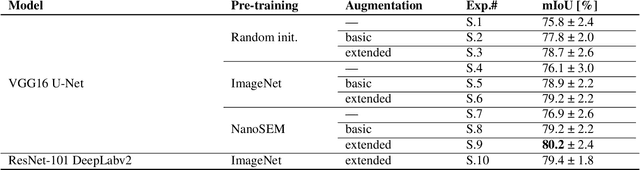

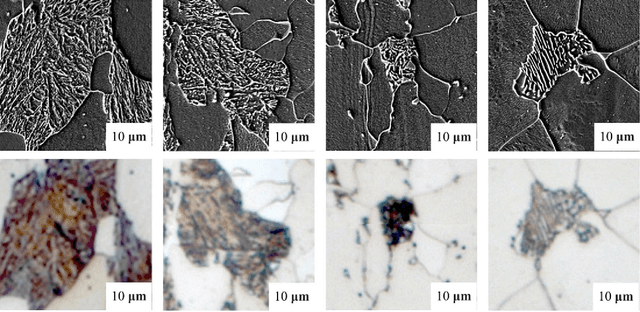

Abstract:Materials' microstructures are signatures of their alloying composition and processing history. Therefore, microstructures exist in a wide variety. As materials become increasingly complex to comply with engineering demands, advanced computer vision (CV) approaches such as deep learning (DL) inevitably gain relevance for quantifying microstrucutures' constituents from micrographs. While DL can outperform classical CV techniques for many tasks, shortcomings are poor data efficiency and generalizability across datasets. This is inherently in conflict with the expense associated with annotating materials data through experts and extensive materials diversity. To tackle poor domain generalizability and the lack of labeled data simultaneously, we propose to apply a sub-class of transfer learning methods called unsupervised domain adaptation (UDA). These algorithms address the task of finding domain-invariant features when supplied with annotated source data and unannotated target data, such that performance on the latter distribution is optimized despite the absence of annotations. Exemplarily, this study is conducted on a lath-shaped bainite segmentation task in complex phase steel micrographs. Here, the domains to bridge are selected to be different metallographic specimen preparations (surface etchings) and distinct imaging modalities. We show that a state-of-the-art UDA approach surpasses the na\"ive application of source domain trained models on the target domain (generalization baseline) to a large extent. This holds true independent of the domain shift, despite using little data, and even when the baseline models were pre-trained or employed data augmentation. Through UDA, mIoU was improved over generalization baselines from 82.2%, 61.0%, 49.7% to 84.7%, 67.3%, 73.3% on three target datasets, respectively. This underlines this techniques' potential to cope with materials variance.

Advanced Steel Microstructural Classification by Deep Learning Methods

Feb 15, 2018

Abstract:The inner structure of a material is called microstructure. It stores the genesis of a material and determines all its physical and chemical properties. While microstructural characterization is widely spread and well known, the microstructural classification is mostly done manually by human experts, which gives rise to uncertainties due to subjectivity. Since the microstructure could be a combination of different phases or constituents with complex substructures its automatic classification is very challenging and only a few prior studies exist. Prior works focused on designed and engineered features by experts and classified microstructures separately from the feature extraction step. Recently, Deep Learning methods have shown strong performance in vision applications by learning the features from data together with the classification step. In this work, we propose a Deep Learning method for microstructural classification in the examples of certain microstructural constituents of low carbon steel. This novel method employs pixel-wise segmentation via Fully Convolutional Neural Networks (FCNN) accompanied by a max-voting scheme. Our system achieves 93.94% classification accuracy, drastically outperforming the state-of-the-art method of 48.89% accuracy. Beyond the strong performance of our method, this line of research offers a more robust and first of all objective way for the difficult task of steel quality appreciation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge