Dipkamal Bhusal

Concept-Based Masking: A Patch-Agnostic Defense Against Adversarial Patch Attacks

Oct 05, 2025Abstract:Adversarial patch attacks pose a practical threat to deep learning models by forcing targeted misclassifications through localized perturbations, often realized in the physical world. Existing defenses typically assume prior knowledge of patch size or location, limiting their applicability. In this work, we propose a patch-agnostic defense that leverages concept-based explanations to identify and suppress the most influential concept activation vectors, thereby neutralizing patch effects without explicit detection. Evaluated on Imagenette with a ResNet-50, our method achieves higher robust and clean accuracy than the state-of-the-art PatchCleanser, while maintaining strong performance across varying patch sizes and locations. Our results highlight the promise of combining interpretability with robustness and suggest concept-driven defenses as a scalable strategy for securing machine learning models against adversarial patch attacks.

Revisiting Static Feature-Based Android Malware Detection

Sep 11, 2024Abstract:The increasing reliance on machine learning (ML) in computer security, particularly for malware classification, has driven significant advancements. However, the replicability and reproducibility of these results are often overlooked, leading to challenges in verifying research findings. This paper highlights critical pitfalls that undermine the validity of ML research in Android malware detection, focusing on dataset and methodological issues. We comprehensively analyze Android malware detection using two datasets and assess offline and continual learning settings with six widely used ML models. Our study reveals that when properly tuned, simpler baseline methods can often outperform more complex models. To address reproducibility challenges, we propose solutions for improving datasets and methodological practices, enabling fairer model comparisons. Additionally, we open-source our code to facilitate malware analysis, making it extensible for new models and datasets. Our paper aims to support future research in Android malware detection and other security domains, enhancing the reliability and reproducibility of published results.

SECURE: Benchmarking Generative Large Language Models for Cybersecurity Advisory

May 30, 2024Abstract:Large Language Models (LLMs) have demonstrated potential in cybersecurity applications but have also caused lower confidence due to problems like hallucinations and a lack of truthfulness. Existing benchmarks provide general evaluations but do not sufficiently address the practical and applied aspects of LLM performance in cybersecurity-specific tasks. To address this gap, we introduce the SECURE (Security Extraction, Understanding \& Reasoning Evaluation), a benchmark designed to assess LLMs performance in realistic cybersecurity scenarios. SECURE includes six datasets focussed on the Industrial Control System sector to evaluate knowledge extraction, understanding, and reasoning based on industry-standard sources. Our study evaluates seven state-of-the-art models on these tasks, providing insights into their strengths and weaknesses in cybersecurity contexts, and offer recommendations for improving LLMs reliability as cyber advisory tools.

PASA: Attack Agnostic Unsupervised Adversarial Detection using Prediction & Attribution Sensitivity Analysis

Apr 12, 2024

Abstract:Deep neural networks for classification are vulnerable to adversarial attacks, where small perturbations to input samples lead to incorrect predictions. This susceptibility, combined with the black-box nature of such networks, limits their adoption in critical applications like autonomous driving. Feature-attribution-based explanation methods provide relevance of input features for model predictions on input samples, thus explaining model decisions. However, we observe that both model predictions and feature attributions for input samples are sensitive to noise. We develop a practical method for this characteristic of model prediction and feature attribution to detect adversarial samples. Our method, PASA, requires the computation of two test statistics using model prediction and feature attribution and can reliably detect adversarial samples using thresholds learned from benign samples. We validate our lightweight approach by evaluating the performance of PASA on varying strengths of FGSM, PGD, BIM, and CW attacks on multiple image and non-image datasets. On average, we outperform state-of-the-art statistical unsupervised adversarial detectors on CIFAR-10 and ImageNet by 14\% and 35\% ROC-AUC scores, respectively. Moreover, our approach demonstrates competitive performance even when an adversary is aware of the defense mechanism.

Looking Beyond IoCs: Automatically Extracting Attack Patterns from External CTI

Nov 01, 2022

Abstract:Public and commercial companies extensively share cyber threat intelligence (CTI) to prepare systems to defend against emerging cyberattacks. Most used intelligence thus far has been limited to tracking known threat indicators such as IP addresses and domain names as they are easier to extract using regular expressions. Due to the limited long-term usage and difficulty of performing a long-term analysis on indicators, we propose using significantly more robust threat intelligence signals called attack patterns. However, extracting attack patterns at scale is a challenging task. In this paper, we present LADDER, a knowledge extraction framework that can extract text-based attack patterns from CTI reports at scale. The model characterizes attack patterns by capturing phases of an attack in android and enterprise networks. It then systematically maps them to the MITRE ATT\&CK pattern framework. We present several use cases to demonstrate the application of LADDER for SOC analysts in determining the presence of attack vectors belonging to emerging attacks in preparation for defenses in advance.

SoK: Modeling Explainability in Security Monitoring for Trust, Privacy, and Interpretability

Oct 31, 2022

Abstract:Trust, privacy, and interpretability have emerged as significant concerns for experts deploying deep learning models for security monitoring. Due to their back-box nature, these models cannot provide an intuitive understanding of the machine learning predictions, which are crucial in several decision-making applications, like anomaly detection. Security operations centers have a number of security monitoring tools that analyze logs and generate threat alerts which security analysts inspect. The alerts lack sufficient explanation on why it was raised or the context in which they occurred. Existing explanation methods for security also suffer from low fidelity and low stability and ignore privacy concerns. However, explanations are highly desirable; therefore, we systematize this knowledge on explanation models so they can ensure trust and privacy in security monitoring. Through our collaborative study of security operation centers, security monitoring tools, and explanation techniques, we discuss the strengths of existing methods and concerns vis-a-vis applications, such as security log analysis. We present a pipeline to design interpretable and privacy-preserving system monitoring tools. Additionally, we define and propose quantitative metrics to evaluate methods in explainable security. Finally, we discuss challenges and enlist exciting research directions for explorations.

CyNER: A Python Library for Cybersecurity Named Entity Recognition

Apr 08, 2022

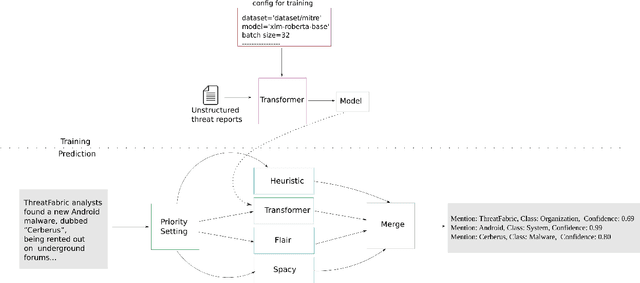

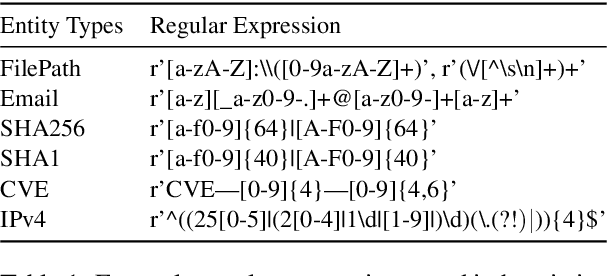

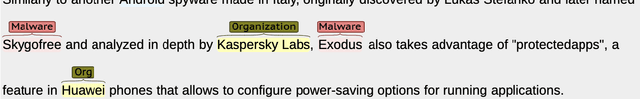

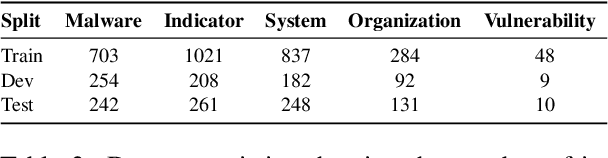

Abstract:Open Cyber threat intelligence (OpenCTI) information is available in an unstructured format from heterogeneous sources on the Internet. We present CyNER, an open-source python library for cybersecurity named entity recognition (NER). CyNER combines transformer-based models for extracting cybersecurity-related entities, heuristics for extracting different indicators of compromise, and publicly available NER models for generic entity types. We provide models trained on a diverse corpus that users can readily use. Events are described as classes in previous research - MALOnt2.0 (Christian et al., 2021) and MALOnt (Rastogi et al., 2020) and together extract a wide range of malware attack details from a threat intelligence corpus. The user can combine predictions from multiple different approaches to suit their needs. The library is made publicly available.

Adversarial Patterns: Building Robust Android Malware Classifiers

Mar 04, 2022

Abstract:Deep learning-based classifiers have substantially improved recognition of malware samples. However, these classifiers can be vulnerable to adversarial input perturbations. Any vulnerability in malware classifiers poses significant threats to the platforms they defend. Therefore, to create stronger defense models against malware, we must understand the patterns in input perturbations caused by an adversary. This survey paper presents a comprehensive study on adversarial machine learning for android malware classifiers. We first present an extensive background in building a machine learning classifier for android malware, covering both image-based and text-based feature extraction approaches. Then, we examine the pattern and advancements in the state-of-the-art research in evasion attacks and defenses. Finally, we present guidelines for designing robust malware classifiers and enlist research directions for the future.

Multi-Label Classification of Thoracic Diseases using Dense Convolutional Network on Chest Radiographs

Feb 08, 2022

Abstract:Chest X-ray images are one of the most common medical diagnosis techniques to identify different thoracic diseases. However, identification of pathologies in X-ray images requires skilled manpower and are often cited as a time-consuming task with varied level of interpretation, particularly in cases where the identification of disease only by images is difficult for human eyes. With recent achievements of deep learning in image classification, its application in disease diagnosis has been widely explored. This research project presents a multi-label disease diagnosis model of chest x-rays. Using Dense Convolutional Neural Network (DenseNet), the diagnosis system was able to obtain high classification predictions. The model obtained the highest AUC score of 0.896 for condition Cardiomegaly and the lowest AUC score for Nodule, 0.655. The model also localized the parts of the chest radiograph that indicated the presence of each pathology using GRADCAM, thus contributing to the model interpretability of a deep learning algorithm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge