Diego A. Mesa

The Value of Nullspace Tuning Using Partial Label Information

Mar 17, 2020

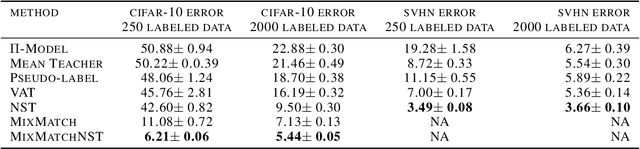

Abstract:In semi-supervised learning, information from unlabeled examples is used to improve the model learned from labeled examples. But in some learning problems, partial label information can be inferred from otherwise unlabeled examples and used to further improve the model. In particular, partial label information exists when subsets of training examples are known to have the same label, even though the label itself is missing. By encouraging a model to give the same label to all such examples, we can potentially improve its performance. We call this encouragement \emph{Nullspace Tuning} because the difference vector between any pair of examples with the same label should lie in the nullspace of a linear model. In this paper, we investigate the benefit of using partial label information using a careful comparison framework over well-characterized public datasets. We show that the additional information provided by partial labels reduces test error over good semi-supervised methods usually by a factor of 2, up to a factor of 5.5 in the best case. We also show that adding Nullspace Tuning to the newer and state-of-the-art MixMatch method decreases its test error by up to a factor of 1.8.

A Distributed Framework for the Construction of Transport Maps

Oct 28, 2018

Abstract:The need to reason about uncertainty in large, complex, and multi-modal datasets has become increasingly common across modern scientific environments. The ability to transform samples from one distribution $P$ to another distribution $Q$ enables the solution to many problems in machine learning (e.g. Bayesian inference, generative modeling) and has been actively pursued from theoretical, computational, and application perspectives across the fields of information theory, computer science, and biology. Performing such transformations, in general, still leads to computational difficulties, especially in high dimensions. Here, we consider the problem of computing such "measure transport maps" with efficient and parallelizable methods. Under the mild assumptions that $P$ need not be known but can be sampled from, and that the density of $Q$ is known up to a proportionality constant, and that $Q$ is log-concave, we provide in this work a convex optimization problem pertaining to relative entropy minimization. We show how an empirical minimization formulation and polynomial chaos map parameterization can allow for learning a transport map between $P$ and $Q$ with distributed and scalable methods. We also leverage findings from nonequilibrium thermodynamics to represent the transport map as a composition of simpler maps, each of which is learned sequentially with a transport cost regularized version of the aforementioned problem formulation. We provide examples of our framework within the context of Bayesian inference for the Boston housing dataset and generative modeling for handwritten digit images from the MNIST dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge