Deshuang Huang

Exact Fit Attention in Node-Holistic Graph Convolutional Network for Improved EEG-Based Driver Fatigue Detection

Jan 25, 2025Abstract:EEG-based fatigue monitoring can effectively reduce the incidence of related traffic accidents. In the past decade, with the advancement of deep learning, convolutional neural networks (CNN) have been increasingly used for EEG signal processing. However, due to the data's non-Euclidean characteristics, existing CNNs may lose important spatial information from EEG, specifically channel correlation. Thus, we propose the node-holistic graph convolutional network (NHGNet), a model that uses graphic convolution to dynamically learn each channel's features. With exact fit attention optimization, the network captures inter-channel correlations through a trainable adjacency matrix. The interpretability is enhanced by revealing critical areas of brain activity and their interrelations in various mental states. In validations on two public datasets, NHGNet outperforms the SOTAs. Specifically, in the intra-subject, NHGNet improved detection accuracy by at least 2.34% and 3.42%, and in the inter-subjects, it improved by at least 2.09% and 15.06%. Visualization research on the model revealed that the central parietal area plays an important role in detecting fatigue levels, whereas the frontal and temporal lobes are essential for maintaining vigilance.

Single-Stage Broad Multi-Instance Multi-Label Learning with Diverse Inter-Correlations and its application to medical image classification

Sep 06, 2022

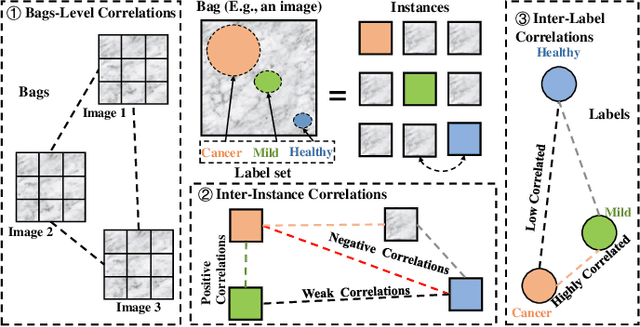

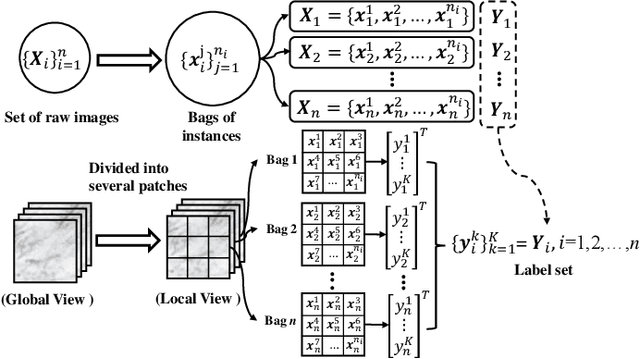

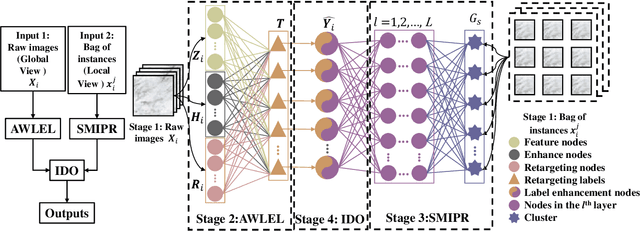

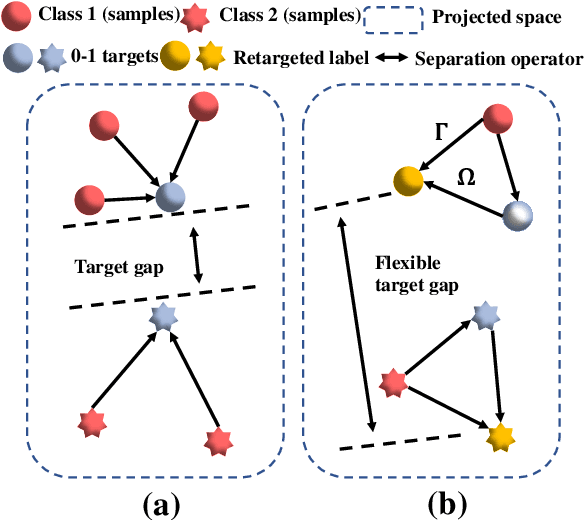

Abstract:In many real-world applications, one object (e.g., image) can be represented or described by multiple instances (e.g., image patches) and simultaneously associated with multiple labels. Such applications can be formulated as multi-instance multi-label learning (MIML) problems and have been extensively studied during the past few years. Existing MIML methods are useful in many applications but most of which suffer from relatively low accuracy and training efficiency due to several issues: i) the inter-label correlations (i.e., the probabilistic correlations between the multiple labels corresponding to an object) are neglected; ii) the inter-instance correlations cannot be learned directly (or jointly) with other types of correlations due to the missing instance labels; iii) diverse inter-correlations (e.g., inter-label correlations, inter-instance correlations) can only be learned in multiple stages. To resolve these issues, a new single-stage framework called broad multi-instance multi-label learning (BMIML) is proposed. In BMIML, there are three innovative modules: i) an auto-weighted label enhancement learning (AWLEL) based on broad learning system (BLS); ii) A specific MIML neural network called scalable multi-instance probabilistic regression (SMIPR); iii) Finally, an interactive decision optimization (IDO). As a result, BMIML can achieve simultaneous learning of diverse inter-correlations between whole images, instances, and labels in single stage for higher classification accuracy and much faster training time. Experiments show that BMIML is highly competitive to (or even better than) existing methods in accuracy and much faster than most MIML methods even for large medical image data sets (> 90K images).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge