Deok-Seon Kim

Reconstructing Unseen Sentences from Speech-related Biosignals for Open-vocabulary Neural Communication

Oct 31, 2025Abstract:Brain-to-speech (BTS) systems represent a groundbreaking approach to human communication by enabling the direct transformation of neural activity into linguistic expressions. While recent non-invasive BTS studies have largely focused on decoding predefined words or sentences, achieving open-vocabulary neural communication comparable to natural human interaction requires decoding unconstrained speech. Additionally, effectively integrating diverse signals derived from speech is crucial for developing personalized and adaptive neural communication and rehabilitation solutions for patients. This study investigates the potential of speech synthesis for previously unseen sentences across various speech modes by leveraging phoneme-level information extracted from high-density electroencephalography (EEG) signals, both independently and in conjunction with electromyography (EMG) signals. Furthermore, we examine the properties affecting phoneme decoding accuracy during sentence reconstruction and offer neurophysiological insights to further enhance EEG decoding for more effective neural communication solutions. Our findings underscore the feasibility of biosignal-based sentence-level speech synthesis for reconstructing unseen sentences, highlighting a significant step toward developing open-vocabulary neural communication systems adapted to diverse patient needs and conditions. Additionally, this study provides meaningful insights into the development of communication and rehabilitation solutions utilizing EEG-based decoding technologies.

Imagined Speech and Visual Imagery as Intuitive Paradigms for Brain-Computer Interfaces

Nov 14, 2024Abstract:Recent advancements in brain-computer interface (BCI) technology have emphasized the promise of imagined speech and visual imagery as effective paradigms for intuitive communication. This study investigates the classification performance and brain connectivity patterns associated with these paradigms, focusing on decoding accuracy across selected word classes. Sixteen participants engaged in tasks involving thirteen imagined speech and visual imagery classes, revealing above-chance classification accuracy for both paradigms. Variability in classification accuracy across individual classes highlights the influence of sensory and motor associations in imagined speech and vivid visual associations in visual imagery. Connectivity analysis further demonstrated increased functional connectivity in language-related and sensory regions for imagined speech, whereas visual imagery activated spatial and visual processing networks. These findings suggest the potential of imagined speech and visual imagery as an intuitive and scalable paradigm for BCI communication when selecting optimal word classes. Further exploration of the decoding outcomes for these two paradigms could provide insights for practical BCI communication.

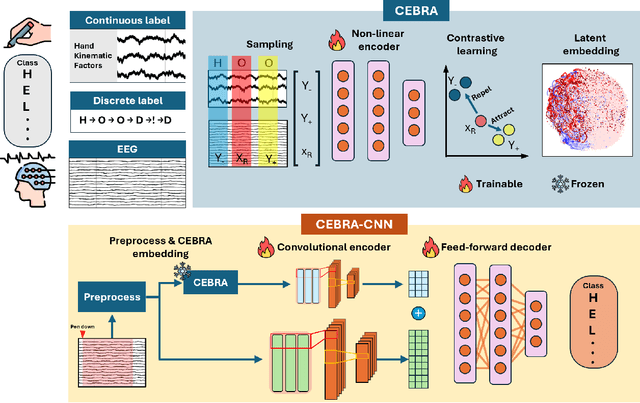

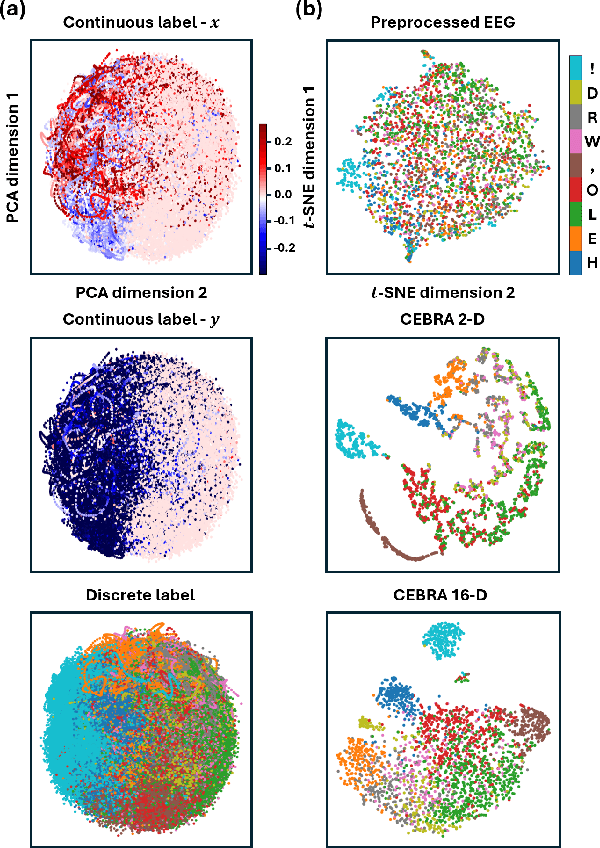

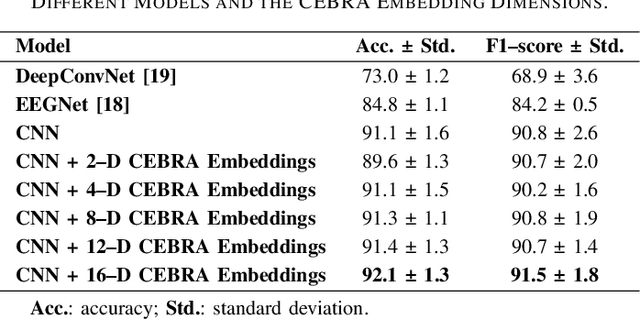

Towards Scalable Handwriting Communication via EEG Decoding and Latent Embedding Integration

Nov 14, 2024

Abstract:In recent years, brain-computer interfaces have made advances in decoding various motor-related tasks, including gesture recognition and movement classification, utilizing electroencephalogram (EEG) data. These developments are fundamental in exploring how neural signals can be interpreted to recognize specific physical actions. This study centers on a written alphabet classification task, where we aim to decode EEG signals associated with handwriting. To achieve this, we incorporate hand kinematics to guide the extraction of the consistent embeddings from high-dimensional neural recordings using auxiliary variables (CEBRA). These CEBRA embeddings, along with the EEG, are processed by a parallel convolutional neural network model that extracts features from both data sources simultaneously. The model classifies nine different handwritten characters, including symbols such as exclamation marks and commas, within the alphabet. We evaluate the model using a quantitative five-fold cross-validation approach and explore the structure of the embedding space through visualizations. Our approach achieves a classification accuracy of 91 % for the nine-class task, demonstrating the feasibility of fine-grained handwriting decoding from EEG.

Enhanced Generative Adversarial Networks for Unseen Word Generation from EEG Signals

Nov 14, 2023Abstract:Recent advances in brain-computer interface (BCI) technology, particularly based on generative adversarial networks (GAN), have shown great promise for improving decoding performance for BCI. Within the realm of Brain-Computer Interfaces (BCI), GANs find application in addressing many areas. They serve as a valuable tool for data augmentation, which can solve the challenge of limited data availability, and synthesis, effectively expanding the dataset and creating novel data formats, thus enhancing the robustness and adaptability of BCI systems. Research in speech-related paradigms has significantly expanded, with a critical impact on the advancement of assistive technologies and communication support for individuals with speech impairments. In this study, GANs were investigated, particularly for the BCI field, and applied to generate text from EEG signals. The GANs could generalize all subjects and decode unseen words, indicating its ability to capture underlying speech patterns consistent across different individuals. The method has practical applications in neural signal-based speech recognition systems and communication aids for individuals with speech difficulties.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge