Ji-Ha Park

Toward Practical BCI: A Real-time Wireless Imagined Speech EEG Decoding System

Nov 11, 2025

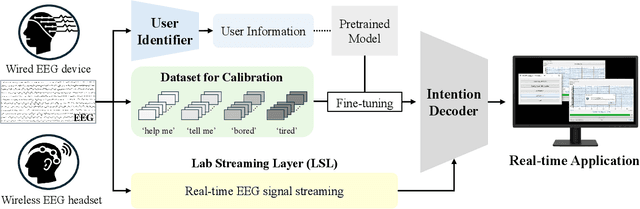

Abstract:Brain-computer interface (BCI) research, while promising, has largely been confined to static and fixed environments, limiting real-world applicability. To move towards practical BCI, we introduce a real-time wireless imagined speech electroencephalogram (EEG) decoding system designed for flexibility and everyday use. Our framework focuses on practicality, demonstrating extensibility beyond wired EEG devices to portable, wireless hardware. A user identification module recognizes the operator and provides a personalized, user-specific service. To achieve seamless, real-time operation, we utilize the lab streaming layer to manage the continuous streaming of live EEG signals to the personalized decoder. This end-to-end pipeline enables a functional real-time application capable of classifying user commands from imagined speech EEG signals, achieving an overall 4-class accuracy of 62.00 % on a wired device and 46.67 % on a portable wireless headset. This paper demonstrates a significant step towards truly practical and accessible BCI technology, establishing a clear direction for future research in robust, practical, and personalized neural interfaces.

Imagined Speech and Visual Imagery as Intuitive Paradigms for Brain-Computer Interfaces

Nov 14, 2024Abstract:Recent advancements in brain-computer interface (BCI) technology have emphasized the promise of imagined speech and visual imagery as effective paradigms for intuitive communication. This study investigates the classification performance and brain connectivity patterns associated with these paradigms, focusing on decoding accuracy across selected word classes. Sixteen participants engaged in tasks involving thirteen imagined speech and visual imagery classes, revealing above-chance classification accuracy for both paradigms. Variability in classification accuracy across individual classes highlights the influence of sensory and motor associations in imagined speech and vivid visual associations in visual imagery. Connectivity analysis further demonstrated increased functional connectivity in language-related and sensory regions for imagined speech, whereas visual imagery activated spatial and visual processing networks. These findings suggest the potential of imagined speech and visual imagery as an intuitive and scalable paradigm for BCI communication when selecting optimal word classes. Further exploration of the decoding outcomes for these two paradigms could provide insights for practical BCI communication.

Dynamic Neural Communication: Convergence of Computer Vision and Brain-Computer Interface

Nov 14, 2024

Abstract:Interpreting human neural signals to decode static speech intentions such as text or images and dynamic speech intentions such as audio or video is showing great potential as an innovative communication tool. Human communication accompanies various features, such as articulatory movements, facial expressions, and internal speech, all of which are reflected in neural signals. However, most studies only generate short or fragmented outputs, while providing informative communication by leveraging various features from neural signals remains challenging. In this study, we introduce a dynamic neural communication method that leverages current computer vision and brain-computer interface technologies. Our approach captures the user's intentions from neural signals and decodes visemes in short time steps to produce dynamic visual outputs. The results demonstrate the potential to rapidly capture and reconstruct lip movements during natural speech attempts from human neural signals, enabling dynamic neural communication through the convergence of computer vision and brain--computer interface.

Brain-Driven Representation Learning Based on Diffusion Model

Nov 14, 2023Abstract:Interpreting EEG signals linked to spoken language presents a complex challenge, given the data's intricate temporal and spatial attributes, as well as the various noise factors. Denoising diffusion probabilistic models (DDPMs), which have recently gained prominence in diverse areas for their capabilities in representation learning, are explored in our research as a means to address this issue. Using DDPMs in conjunction with a conditional autoencoder, our new approach considerably outperforms traditional machine learning algorithms and established baseline models in accuracy. Our results highlight the potential of DDPMs as a sophisticated computational method for the analysis of speech-related EEG signals. This could lead to significant advances in brain-computer interfaces tailored for spoken communication.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge